# Interpolating Neural Networks

This repo contains the source code for observing **double descent** phenomena with neural networks in **empirical asset pricing data**.

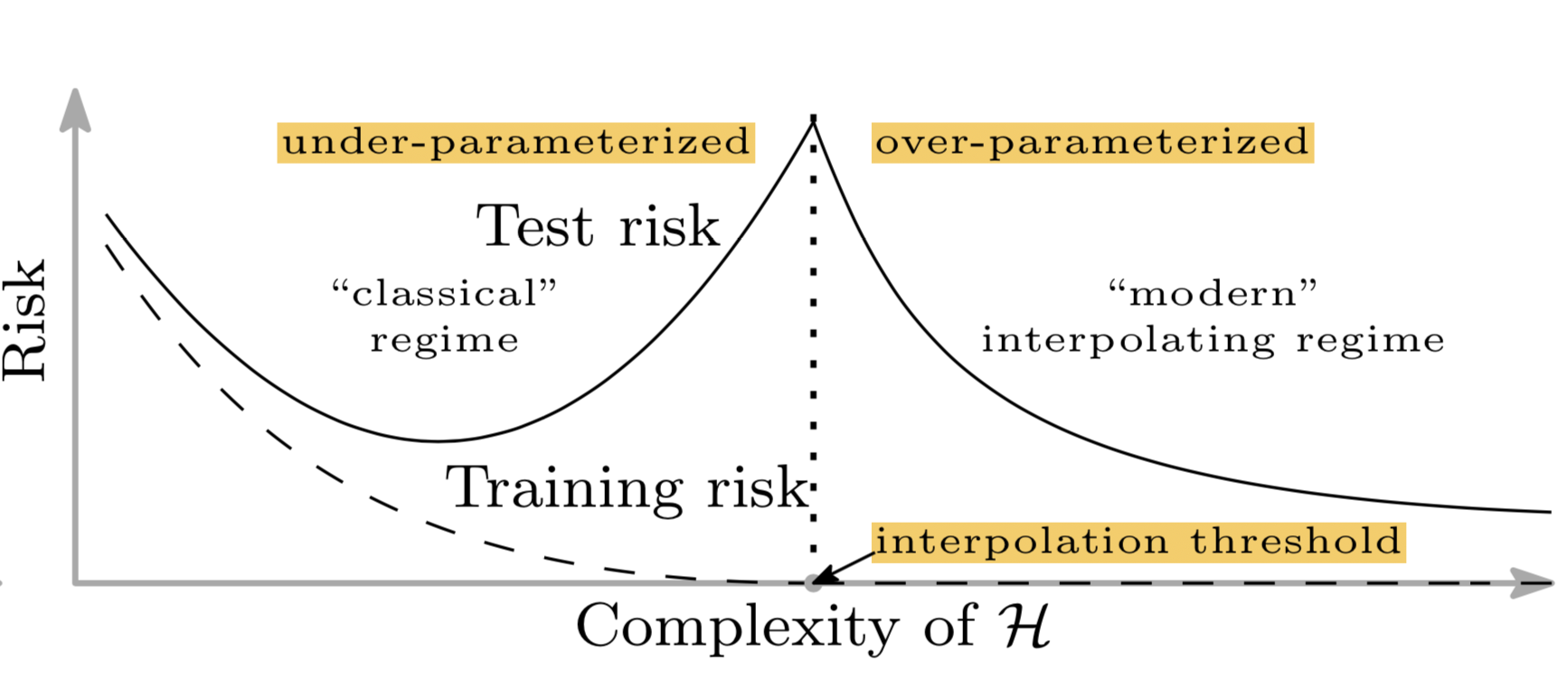

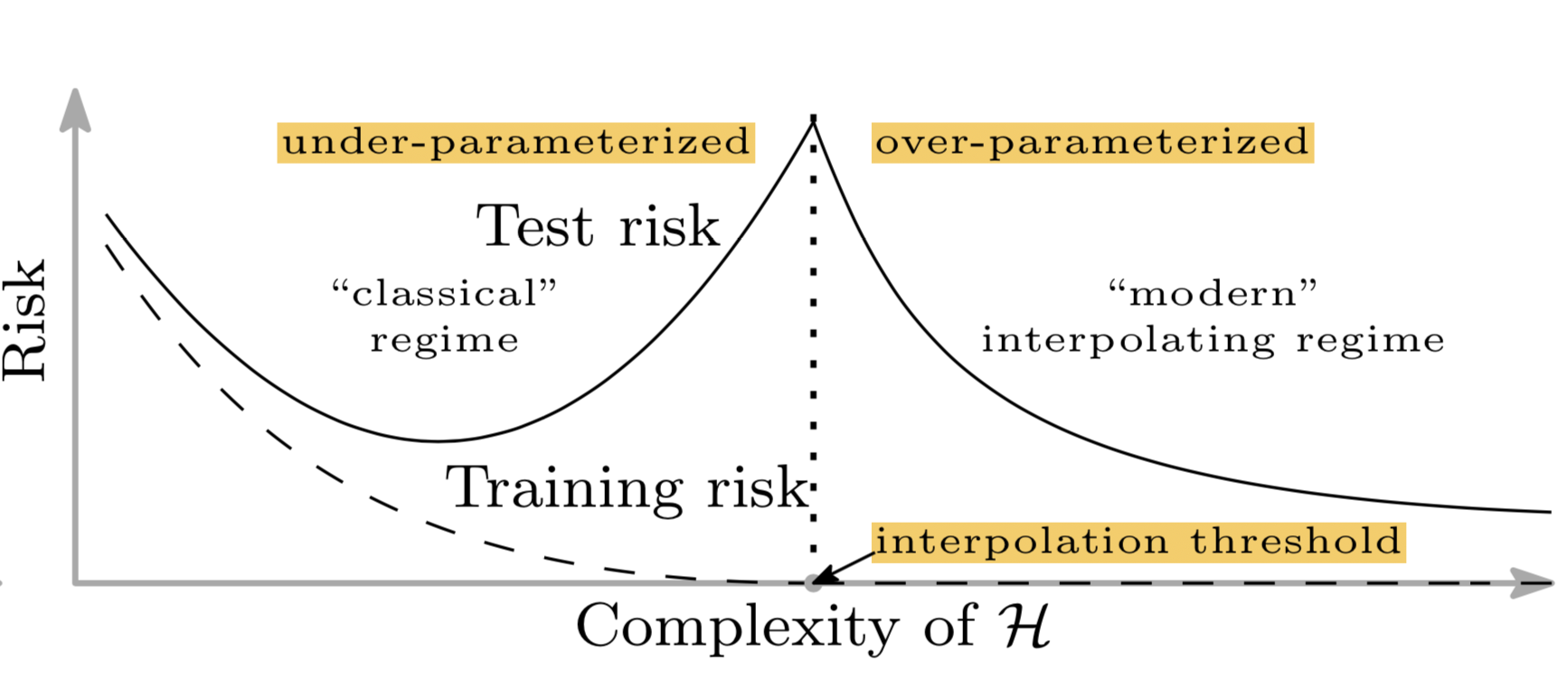

Fig. A new double-U-shaped bias-variance risk curve for deep neural networks. (Image source: [original paper](https://arxiv.org/abs/1812.11118))

Deep learning models are heavily over-parameterized and can often get to perfect results on training data. In the traditional view, like bias-variance trade-offs, this could be a disaster that nothing may generalize to the unseen test data. However, as is often the case, such “overfitted” (training error = 0) deep learning models still present a decent performance on out-of-sample test data (Refer above figure).

This is likely due to two reasons:

- The number of parameters is not a good measure of inductive bias, defined as the set of assumptions of a learning algorithm used to predict for unknown samples.

- Equipped with a larger model, we might be able to discover larger function classes and further find interpolating functions that have smaller norm and are thus “simpler”.

There are many other explanations of better generalisation such as Smaller Intrinsic Dimension, Heterogeneous Layer Robutness, Lottery Ticket Hypothesis etc. To read more on them in detail, refer Lilian Weng's [article](https://lilianweng.github.io/posts/2019-03-14-overfit/#intrinsic-dimension).

## Usage

In this work, we try to observe double descent phenomena in empirical asset pricing. The observation of double descent is fascinating as financial data are very noisy in comparison to image datasets (good signal to noise ratio).

```

$ pip install interpolating-neural-networks

```

## Notes:

- There are no regularization terms like weight decay, dropout.

- Each network is trained for a long time to achieve near-zero training risk. The learning rate is adjusted differently for models of different sizes.

## Citation

If you find this method and/or code useful, please consider citing

```

@misc{interpolatingneuralnetworks,

author = {Akash Sonowal, Dr. Shankar Prawesh},

title = {Interpolating Neural Networks in Asset Pricing},

url = {https://github.com/akashsonowal/interpolating-neural-networks},

year = {2022},

note = "Version 0.0.1"

}

```

Raw data

{

"_id": null,

"home_page": "https://github.com/akashsonowal/interpolating-neural-networks/",

"name": "interpolating-neural-networks",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "",

"keywords": "double descent,deep learning,generalization,asset pricing",

"author": "Akash Sonowal",

"author_email": "work.akashsonowal@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/cf/cd/07370c3f489a977f8fc4077a777f0a9e69afa1af27a60e4e41c4793f8ecd/interpolating-neural-networks-0.0.2.tar.gz",

"platform": null,

"description": "# Interpolating Neural Networks\n\nThis repo contains the source code for observing **double descent** phenomena with neural networks in **empirical asset pricing data**.\n\n\nFig. A new double-U-shaped bias-variance risk curve for deep neural networks. (Image source: [original paper](https://arxiv.org/abs/1812.11118))\n\nDeep learning models are heavily over-parameterized and can often get to perfect results on training data. In the traditional view, like bias-variance trade-offs, this could be a disaster that nothing may generalize to the unseen test data. However, as is often the case, such \u201coverfitted\u201d (training error = 0) deep learning models still present a decent performance on out-of-sample test data (Refer above figure).\n\nThis is likely due to two reasons:\n- The number of parameters is not a good measure of inductive bias, defined as the set of assumptions of a learning algorithm used to predict for unknown samples.\n- Equipped with a larger model, we might be able to discover larger function classes and further find interpolating functions that have smaller norm and are thus \u201csimpler\u201d.\n\nThere are many other explanations of better generalisation such as Smaller Intrinsic Dimension, Heterogeneous Layer Robutness, Lottery Ticket Hypothesis etc. To read more on them in detail, refer Lilian Weng's [article](https://lilianweng.github.io/posts/2019-03-14-overfit/#intrinsic-dimension).\n\n## Usage\n\nIn this work, we try to observe double descent phenomena in empirical asset pricing. The observation of double descent is fascinating as financial data are very noisy in comparison to image datasets (good signal to noise ratio).\n\n```\n$ pip install interpolating-neural-networks\n```\n\n## Notes:\n\n- There are no regularization terms like weight decay, dropout.\n- Each network is trained for a long time to achieve near-zero training risk. The learning rate is adjusted differently for models of different sizes.\n\n## Citation\n\nIf you find this method and/or code useful, please consider citing\n\n```\n@misc{interpolatingneuralnetworks,\n author = {Akash Sonowal, Dr. Shankar Prawesh},\n title = {Interpolating Neural Networks in Asset Pricing},\n url = {https://github.com/akashsonowal/interpolating-neural-networks},\n year = {2022},\n note = \"Version 0.0.1\"\n}\n```\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Interpolating Neural Networks in Asset Pricing Data. Supports Distributed Training in TensorFlow.",

"version": "0.0.2",

"split_keywords": [

"double descent",

"deep learning",

"generalization",

"asset pricing"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "1411fec7deb543ac77f2d7c262cd16b116a0d53d99afad19fce851592d94dfa6",

"md5": "cc28c4c5dbdc2922e5ab04555d1e5422",

"sha256": "c70a94f3726522a81fdbf1afef54b50ed3c3036f4f8de0e07b9d4249b36dab79"

},

"downloads": -1,

"filename": "interpolating_neural_networks-0.0.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "cc28c4c5dbdc2922e5ab04555d1e5422",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 13008,

"upload_time": "2023-04-15T16:08:46",

"upload_time_iso_8601": "2023-04-15T16:08:46.157482Z",

"url": "https://files.pythonhosted.org/packages/14/11/fec7deb543ac77f2d7c262cd16b116a0d53d99afad19fce851592d94dfa6/interpolating_neural_networks-0.0.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "cfcd07370c3f489a977f8fc4077a777f0a9e69afa1af27a60e4e41c4793f8ecd",

"md5": "b1ed90d15399c60e2f5efcb243e0b01b",

"sha256": "4f5a16333a5eeb122d26699e6af2788b4e69d4c1884f222f6e90898fcad0da52"

},

"downloads": -1,

"filename": "interpolating-neural-networks-0.0.2.tar.gz",

"has_sig": false,

"md5_digest": "b1ed90d15399c60e2f5efcb243e0b01b",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 12238,

"upload_time": "2023-04-15T16:08:47",

"upload_time_iso_8601": "2023-04-15T16:08:47.751553Z",

"url": "https://files.pythonhosted.org/packages/cf/cd/07370c3f489a977f8fc4077a777f0a9e69afa1af27a60e4e41c4793f8ecd/interpolating-neural-networks-0.0.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-04-15 16:08:47",

"github": true,

"gitlab": false,

"bitbucket": false,

"github_user": "akashsonowal",

"github_project": "interpolating-neural-networks",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "tensorflow",

"specs": []

},

{

"name": "scikit-learn",

"specs": []

},

{

"name": "numpy",

"specs": []

},

{

"name": "pandas",

"specs": []

},

{

"name": "wandb",

"specs": []

}

],

"lcname": "interpolating-neural-networks"

}