| Name | lux-explainer JSON |

| Version |

1.3.2

JSON

JSON |

| download |

| home_page | None |

| Summary | Universal Local Rule-based Explainer |

| upload_time | 2024-09-19 10:34:25 |

| maintainer | None |

| docs_url | None |

| author | None |

| requires_python | >=3.8 |

| license | MIT License Copyright (c) 2021 sbobek Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. |

| keywords |

xai

explainability

model-agnostic

rule-based

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

[](https://pypi.org/project/lux-explainer/)

[](https://tsproto.readthedocs.io/en/latest/?badge=latest)

# LUX (Local Universal Rule-based Explainer)

## Main features

<img align="right" src="https://raw.githubusercontent.com/sbobek/lux/main/pix/lux-logo.png" width="200">

* Model-agnostic, rule-based and visual local explanations of black-box ML models

* Integrated counterfactual explanations

* Rule-based explanations (that are executable at the same time)

* Oblique trees backbone, which allows to explain more reliable linear decision boundaries

* Integration with [Shapley values](https://shap.readthedocs.io/en/latest/) or [Lime](https://github.com/marcotcr/lime) importances (or any other explainer that produces importances) that help in generating high quality rules

## About

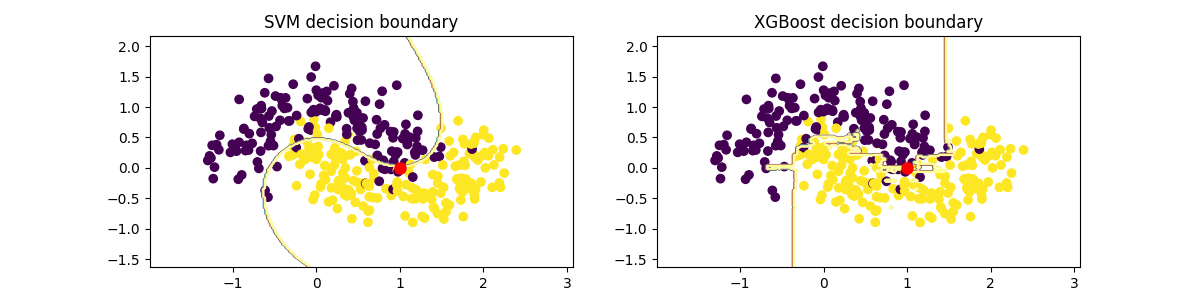

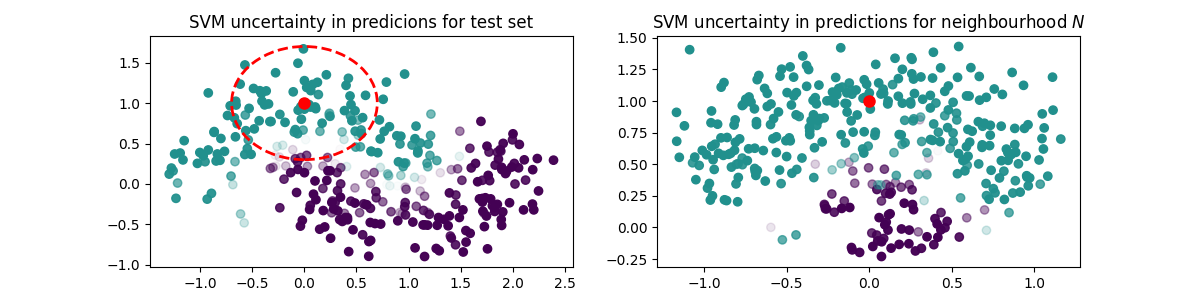

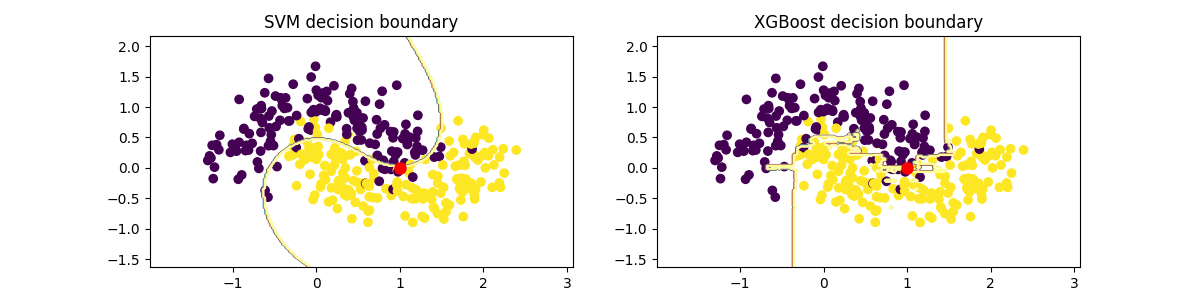

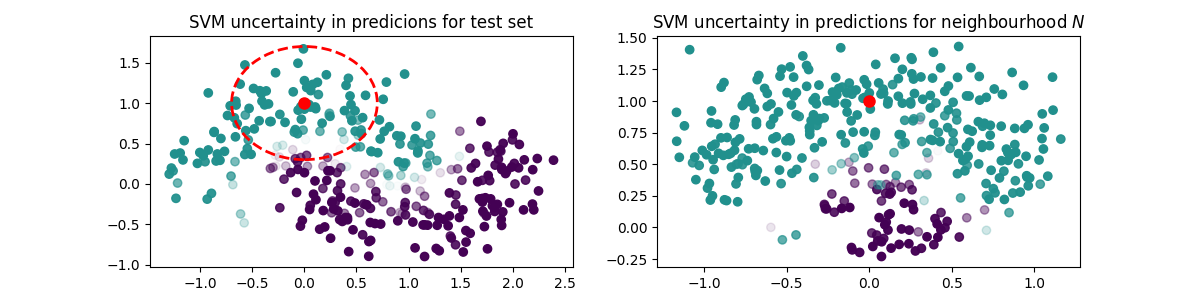

The workflow for LUX looks as follows:

- You train an arbitrary selected machine learning model on your train dataset. The only requirements is that the model is able to output probabilities.

- Next, you generate neighbourhood of an instance you wish to explain and you feed this neighbourhood to your model.

- You obtain a decision stump, which locally explains the model and is executable by [HeaRTDroid](https://heartdroid.re) inference engine

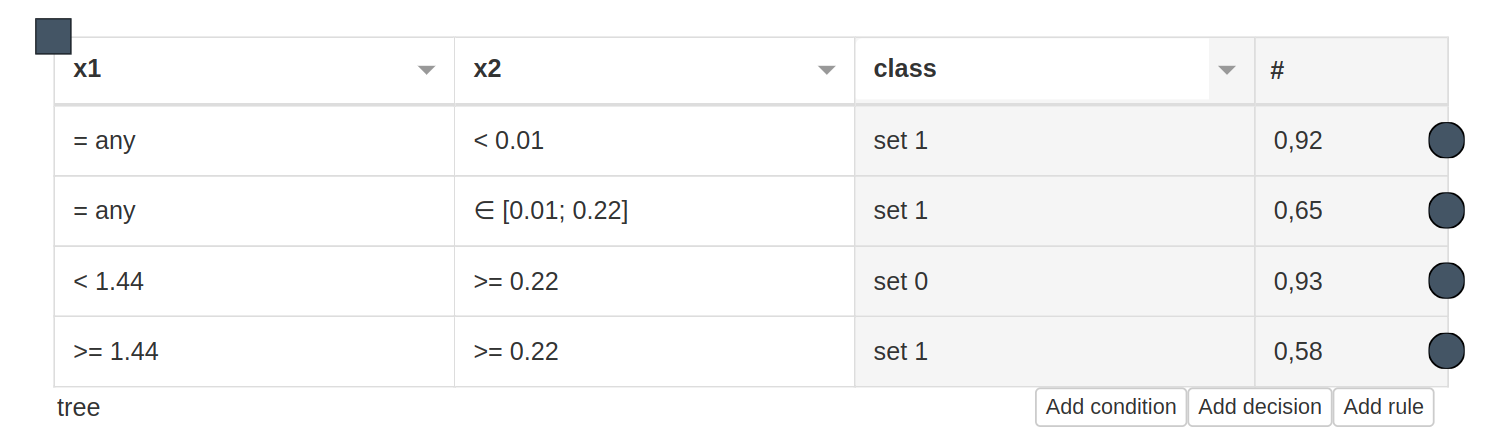

- You can obtain explanation for a selected instance (the number after # represents confidence of an explanation):

```

['IF x2 < 0.01 AND THEN class = 1 # 0.9229009792453621']

```

## Installation

```

pip install lux-explainer

```

If you want to use LUX with [JupyterLab](https://jupyter.org/) install it and run:

```

pip installta jupyterlab

jupyter lab

```

**Caution**: If you want to use LUX with categorical data, it is advised to use [multiprocessing gower distance](https://github.com/sbobek/gower/tree/add-multiprocessing) package (due to high computational complexity of the problem).

## Usage

* For complete usage see [lux_usage_example.ipynb](https://raw.githubusercontent.com/sbobek/lux/main/examples/lux_usage_example.ipynb)

* Fos usage example with Shap integration see [lux_usage_example_shap.ipynb](https://raw.githubusercontent.com/sbobek/lux/main/examples/lux_usage_example_shap.ipynb)

### Simple example on Iris dataset

``` python

from lux.lux import LUX

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn import svm

import numpy as np

import pandas as pd

# import some data to play with

iris = datasets.load_iris()

features = ['sepal_length','sepal_width','petal_length','petal_width']

target = 'class'

#create daatframe with columns names as strings (LUX accepts only DataFrames withj string columns names)

df_iris = pd.DataFrame(iris.data,columns=features)

df_iris[target] = iris.target

#train classifier

train, test = train_test_split(df_iris)

clf = svm.SVC(probability=True)

clf.fit(train[features],train[target])

clf.score(test[features],test[target])

#pick some instance from datasetr

iris_instance = train[features].sample(1).values

iris_instance

#train lux on neighbourhood equal 20 instances

lux = LUX(predict_proba = clf.predict_proba, neighborhood_size=20,max_depth=2, node_size_limit = 1, grow_confidence_threshold = 0 )

lux.fit(train[features], train[target], instance_to_explain=iris_instance,class_names=[0,1,2])

#see the justification of the instance being classified for a given class

lux.justify(np.array(iris_instance))

```

The above code should give you the answer as follows:

```

['IF petal_length >= 5.15 THEN class = 2 # 0.9833409059468439\n']

```

Alternatively one can get counterfactual explanation for a given instance by calling:

``` python

cf = lux.counterfactual(np.array(iris_instance), train[features], counterfactual_representative='nearest', topn=1)[0]

print(f"Counterfactual for {iris_instance} to change from class {lux.predict(np.array(iris_instance))[0]} to class {cf['prediction']}: \n{cf['counterfactual']}")

```

The result from the above query should look as follows:

```

Counterfactual for [[7.7 2.6 6.9 2.3]] to change from class 2 to class 1:

sepal_length 6.9

sepal_width 3.1

petal_length 5.1

petal_width 2.3

```

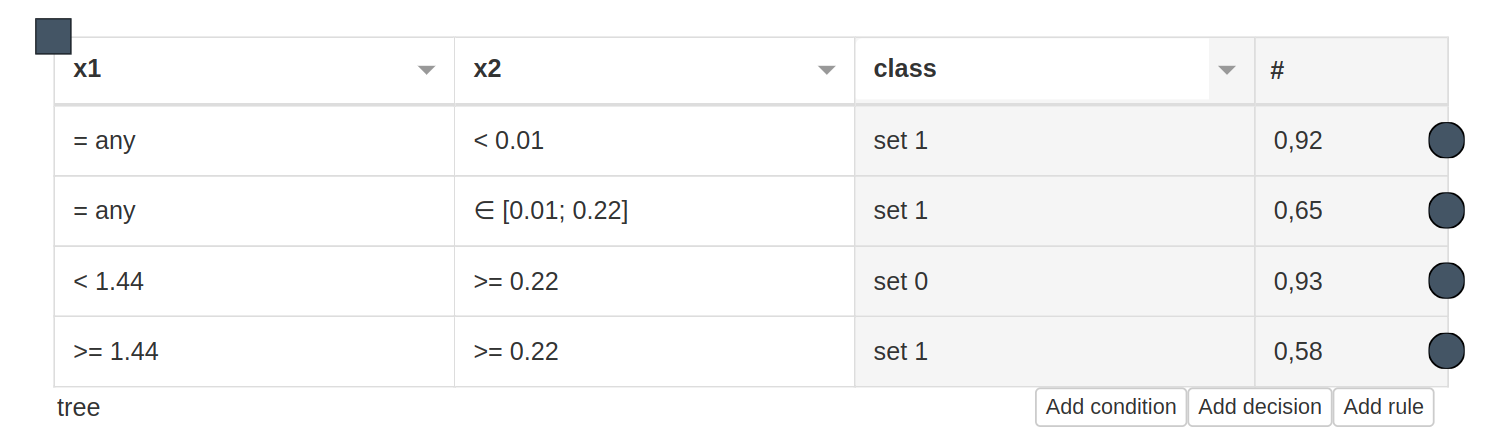

### Rule-based model for local uncertain explanations

You can obtain a whole rule-based model for the local uncertain explanation that was generated by LUX for given instance by running following code

``` python

#have a look at the entire rule-based model that can be executed with https:://heartdroid.re

print(lux.to_HMR())

```

This will generate model which can later be executed by [HeaRTDroid](https://heartdroid.re) which is rule-based inference engine for Android mobile devices.

Additionally, the HMR format below, which is used by [HeaRTDroid](https://heartdroid.re) allows visualization of explanations in a format of decision tables with [HWEd](https://heartdroid.re/hwed/#/) online editor.

```

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% TYPES DEFINITIONS %%%%%%%%%%%%%%%%%%%%%%%%%%

xtype [

name: petal_length,

base:numeric,

domain : [-100000 to 100000]].

xtype [

name: class,

base:symbolic,

domain : [1,0,2]].

%%%%%%%%%%%%%%%%%%%%%%%%% ATTRIBUTES DEFINITIONS %%%%%%%%%%%%%%%%%%%%%%%%%%

xattr [ name: petal_length,

type:petal_length,

class:simple,

comm:out ].

xattr [ name: class,

type:class,

class:simple,

comm:out ].

%%%%%%%%%%%%%%%%%%%%%%%% TABLE SCHEMAS DEFINITIONS %%%%%%%%%%%%%%%%%%%%%%%%

xschm tree : [petal_length]==> [class].

xrule tree/0:

[petal_length lt 3.05] ==> [class set 0]. # 0.9579256691362875

xrule tree/1:

[petal_length gte 3.05, petal_length lt 5.15] ==> [class set 1]. # 0.8398308552545226

xrule tree/2:

[petal_length gte 3.05, petal_length gte 5.15] ==> [class set 2]. # 0.9833409059468439

```

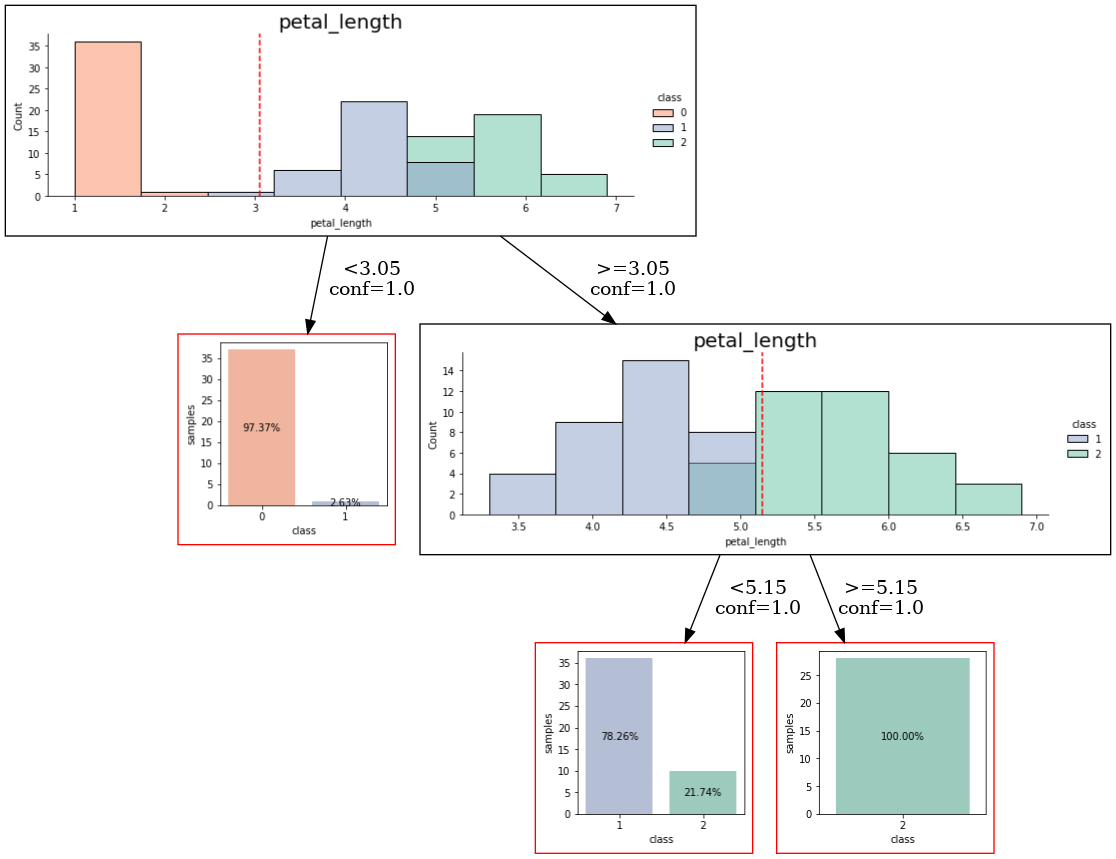

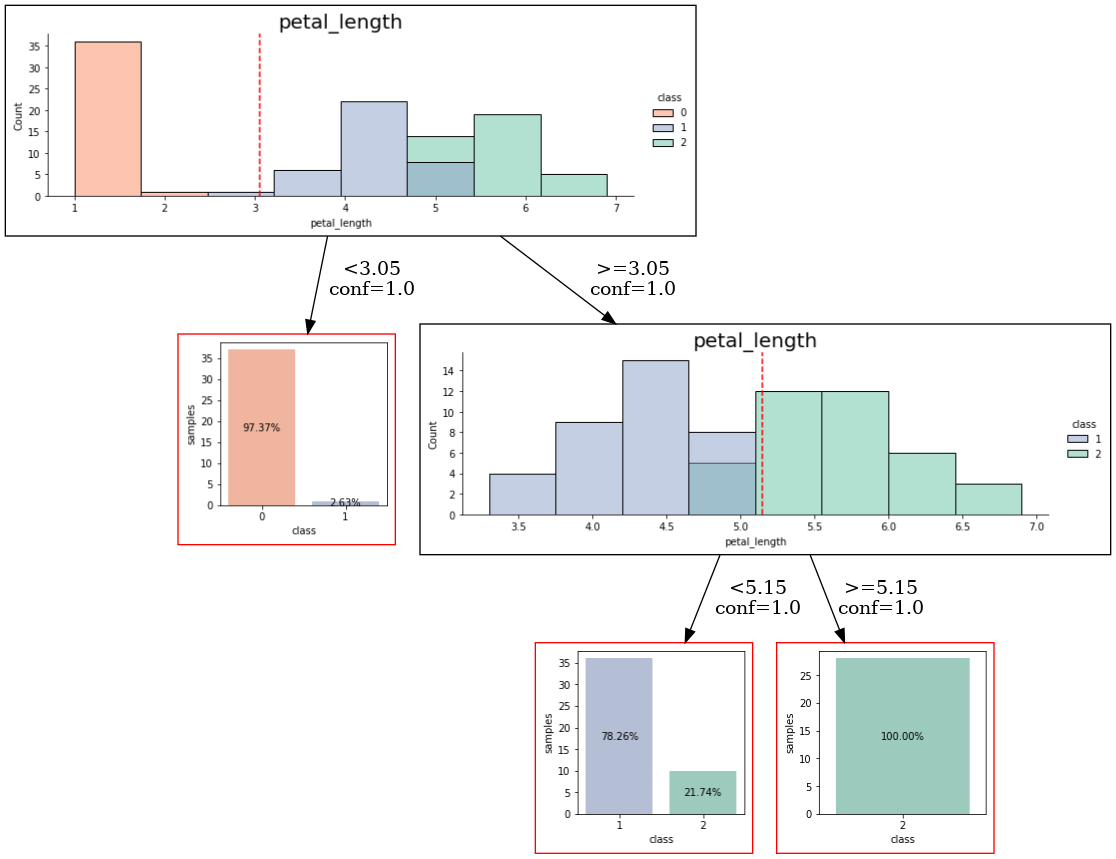

### Visualization of the local uncertain explanation

Similarly you can obtain visualization of the rule-based model in a form of decision tree by executing following code.

``` python

import graphviz

from graphviz import Source

from IPython.display import SVG, Image

lux.uid3.tree.save_dot('tree.dot',fmt='.2f',visual=True, background_data=train)

gvz=graphviz.Source.from_file('tree.dot')

!dot -Tpng tree.dot > tree.png

Image('tree.png')

```

The code should yield something like that (depending on the instance that was selected):

# Cite this work

The software is the direct implementation of a method described in the following paper:

```

@misc{bobek2023local,

title={Local Universal Explainer ({LUX}) -- a rule-based explainer with factual, counterfactual and visual explanations},

author={Szymon Bobek and Grzegorz J. Nalepa},

year={2023},

eprint={2310.14894},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

```

Raw data

{

"_id": null,

"home_page": null,

"name": "lux-explainer",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": null,

"keywords": "xai, explainability, model-agnostic, rule-based",

"author": null,

"author_email": "Szymon Bobek <szymon.bobek@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/37/be/966bd41ba36bd16535d416d7b5e540d2843ff0c4fafb5316dcab69b2198b/lux_explainer-1.3.2.tar.gz",

"platform": null,

"description": "[](https://pypi.org/project/lux-explainer/) \n  [](https://tsproto.readthedocs.io/en/latest/?badge=latest)\n \n# LUX (Local Universal Rule-based Explainer)\n## Main features\n <img align=\"right\" src=\"https://raw.githubusercontent.com/sbobek/lux/main/pix/lux-logo.png\" width=\"200\">\n \n * Model-agnostic, rule-based and visual local explanations of black-box ML models\n * Integrated counterfactual explanations\n * Rule-based explanations (that are executable at the same time)\n * Oblique trees backbone, which allows to explain more reliable linear decision boundaries\n * Integration with [Shapley values](https://shap.readthedocs.io/en/latest/) or [Lime](https://github.com/marcotcr/lime) importances (or any other explainer that produces importances) that help in generating high quality rules\n \n## About\nThe workflow for LUX looks as follows:\n - You train an arbitrary selected machine learning model on your train dataset. The only requirements is that the model is able to output probabilities.\n \n \n - Next, you generate neighbourhood of an instance you wish to explain and you feed this neighbourhood to your model. \n \n \n - You obtain a decision stump, which locally explains the model and is executable by [HeaRTDroid](https://heartdroid.re) inference engine\n \n \n - You can obtain explanation for a selected instance (the number after # represents confidence of an explanation):\n ```\n ['IF x2 < 0.01 AND THEN class = 1 # 0.9229009792453621']\n ```\n\n## Installation\n\n\n```\npip install lux-explainer\n```\nIf you want to use LUX with [JupyterLab](https://jupyter.org/) install it and run:\n\n```\npip installta jupyterlab\njupyter lab\n```\n\n**Caution**: If you want to use LUX with categorical data, it is advised to use [multiprocessing gower distance](https://github.com/sbobek/gower/tree/add-multiprocessing) package (due to high computational complexity of the problem). \n\n## Usage\n\n * For complete usage see [lux_usage_example.ipynb](https://raw.githubusercontent.com/sbobek/lux/main/examples/lux_usage_example.ipynb)\n * Fos usage example with Shap integration see [lux_usage_example_shap.ipynb](https://raw.githubusercontent.com/sbobek/lux/main/examples/lux_usage_example_shap.ipynb)\n\n### Simple example on Iris dataset\n\n``` python\nfrom lux.lux import LUX\nfrom sklearn import datasets\nfrom sklearn.model_selection import train_test_split\nfrom sklearn import svm\nimport numpy as np\nimport pandas as pd\n# import some data to play with\niris = datasets.load_iris()\nfeatures = ['sepal_length','sepal_width','petal_length','petal_width']\ntarget = 'class'\n\n#create daatframe with columns names as strings (LUX accepts only DataFrames withj string columns names)\ndf_iris = pd.DataFrame(iris.data,columns=features)\ndf_iris[target] = iris.target\n\n#train classifier\ntrain, test = train_test_split(df_iris)\nclf = svm.SVC(probability=True)\nclf.fit(train[features],train[target])\nclf.score(test[features],test[target])\n\n#pick some instance from datasetr\niris_instance = train[features].sample(1).values\niris_instance\n\n#train lux on neighbourhood equal 20 instances\nlux = LUX(predict_proba = clf.predict_proba, neighborhood_size=20,max_depth=2, node_size_limit = 1, grow_confidence_threshold = 0 )\nlux.fit(train[features], train[target], instance_to_explain=iris_instance,class_names=[0,1,2])\n\n#see the justification of the instance being classified for a given class\nlux.justify(np.array(iris_instance))\n\n```\n\nThe above code should give you the answer as follows:\n```\n['IF petal_length >= 5.15 THEN class = 2 # 0.9833409059468439\\n']\n```\n\nAlternatively one can get counterfactual explanation for a given instance by calling:\n\n``` python\ncf = lux.counterfactual(np.array(iris_instance), train[features], counterfactual_representative='nearest', topn=1)[0]\nprint(f\"Counterfactual for {iris_instance} to change from class {lux.predict(np.array(iris_instance))[0]} to class {cf['prediction']}: \\n{cf['counterfactual']}\")\n```\nThe result from the above query should look as follows:\n\n```\nCounterfactual for [[7.7 2.6 6.9 2.3]] to change from class 2 to class 1: \nsepal_length 6.9\nsepal_width 3.1\npetal_length 5.1\npetal_width 2.3\n```\n\n### Rule-based model for local uncertain explanations\nYou can obtain a whole rule-based model for the local uncertain explanation that was generated by LUX for given instance by running following code\n\n``` python\n#have a look at the entire rule-based model that can be executed with https:://heartdroid.re\nprint(lux.to_HMR())\n```\n\nThis will generate model which can later be executed by [HeaRTDroid](https://heartdroid.re) which is rule-based inference engine for Android mobile devices.\nAdditionally, the HMR format below, which is used by [HeaRTDroid](https://heartdroid.re) allows visualization of explanations in a format of decision tables with [HWEd](https://heartdroid.re/hwed/#/) online editor.\n\n\n```\n%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% TYPES DEFINITIONS %%%%%%%%%%%%%%%%%%%%%%%%%%\n\nxtype [\n name: petal_length, \nbase:numeric,\ndomain : [-100000 to 100000]].\nxtype [\n name: class, \nbase:symbolic,\n domain : [1,0,2]].\n\n%%%%%%%%%%%%%%%%%%%%%%%%% ATTRIBUTES DEFINITIONS %%%%%%%%%%%%%%%%%%%%%%%%%%\nxattr [ name: petal_length,\n type:petal_length,\n class:simple,\n comm:out ].\nxattr [ name: class,\n type:class,\n class:simple,\n comm:out ].\n\n%%%%%%%%%%%%%%%%%%%%%%%% TABLE SCHEMAS DEFINITIONS %%%%%%%%%%%%%%%%%%%%%%%%\n xschm tree : [petal_length]==> [class].\nxrule tree/0:\n[petal_length lt 3.05] ==> [class set 0]. # 0.9579256691362875\nxrule tree/1:\n[petal_length gte 3.05, petal_length lt 5.15] ==> [class set 1]. # 0.8398308552545226\nxrule tree/2:\n[petal_length gte 3.05, petal_length gte 5.15] ==> [class set 2]. # 0.9833409059468439\n```\n### Visualization of the local uncertain explanation\nSimilarly you can obtain visualization of the rule-based model in a form of decision tree by executing following code. \n\n``` python\nimport graphviz\nfrom graphviz import Source\nfrom IPython.display import SVG, Image\nlux.uid3.tree.save_dot('tree.dot',fmt='.2f',visual=True, background_data=train)\ngvz=graphviz.Source.from_file('tree.dot')\n!dot -Tpng tree.dot > tree.png\nImage('tree.png')\n```\n\nThe code should yield something like that (depending on the instance that was selected):\n\n\n\n# Cite this work\n\nThe software is the direct implementation of a method described in the following paper:\n\n```\n@misc{bobek2023local,\n title={Local Universal Explainer ({LUX}) -- a rule-based explainer with factual, counterfactual and visual explanations}, \n author={Szymon Bobek and Grzegorz J. Nalepa},\n year={2023},\n eprint={2310.14894},\n archivePrefix={arXiv},\n primaryClass={cs.AI}\n\n}\n```\n",

"bugtrack_url": null,

"license": "MIT License Copyright (c) 2021 sbobek Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. ",

"summary": "Universal Local Rule-based Explainer",

"version": "1.3.2",

"project_urls": {

"Documentation": "https://lux-explainer.readthedocs.org",

"Homepage": "https://github.com/sbobek/lux",

"Issues": "https://github.com/sbobek/lux/issues"

},

"split_keywords": [

"xai",

" explainability",

" model-agnostic",

" rule-based"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "03129936e2791e07ce90c4f93e09b4356faeb383cb88bb3d506c3a79721e5ae1",

"md5": "bededea173b72a8a88bd52824c9b52d8",

"sha256": "885befb89d142fb35c1260dc05e5cb6f3732586b78891c33c7798e46e08c2614"

},

"downloads": -1,

"filename": "lux_explainer-1.3.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "bededea173b72a8a88bd52824c9b52d8",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 50976,

"upload_time": "2024-09-19T10:34:23",

"upload_time_iso_8601": "2024-09-19T10:34:23.624567Z",

"url": "https://files.pythonhosted.org/packages/03/12/9936e2791e07ce90c4f93e09b4356faeb383cb88bb3d506c3a79721e5ae1/lux_explainer-1.3.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "37be966bd41ba36bd16535d416d7b5e540d2843ff0c4fafb5316dcab69b2198b",

"md5": "347b08741037ef7f0cc4340ead8e5be0",

"sha256": "543cd215b35cb789b709718d0ead6ffb276d08f174ff8b3e06072e8952aeeac2"

},

"downloads": -1,

"filename": "lux_explainer-1.3.2.tar.gz",

"has_sig": false,

"md5_digest": "347b08741037ef7f0cc4340ead8e5be0",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 47757,

"upload_time": "2024-09-19T10:34:25",

"upload_time_iso_8601": "2024-09-19T10:34:25.564258Z",

"url": "https://files.pythonhosted.org/packages/37/be/966bd41ba36bd16535d416d7b5e540d2843ff0c4fafb5316dcab69b2198b/lux_explainer-1.3.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-09-19 10:34:25",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "sbobek",

"github_project": "lux",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"requirements": [],

"lcname": "lux-explainer"

}