[](https://pypi.org/project/multicombo/)

# MultiCOMBO

Multilingual POS-Tagger and Dependency-Parser with [COMBO-pytorch](https://gitlab.clarin-pl.eu/syntactic-tools/combo) and [spaCy](https://spacy.io)

## Basic usage

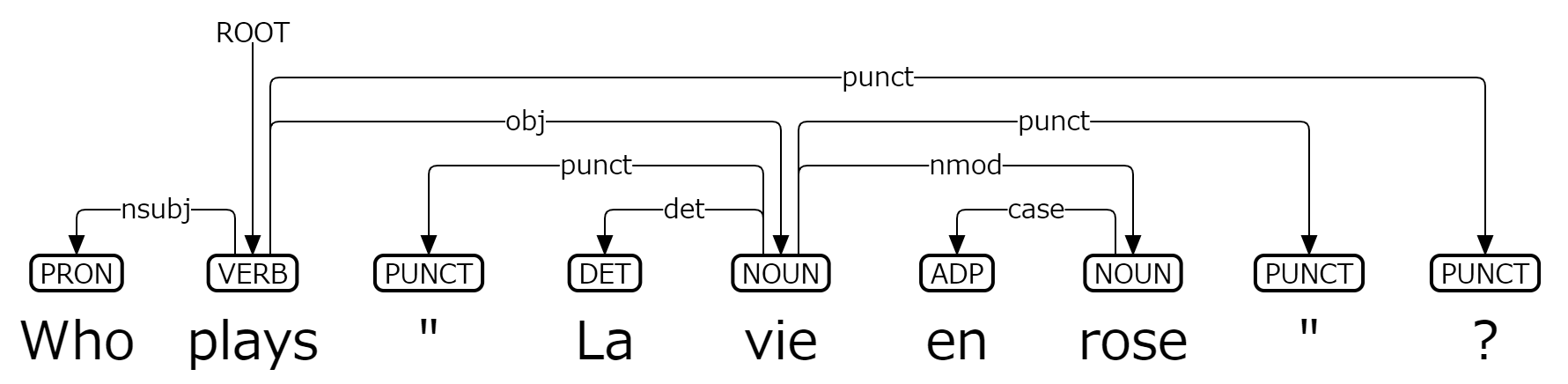

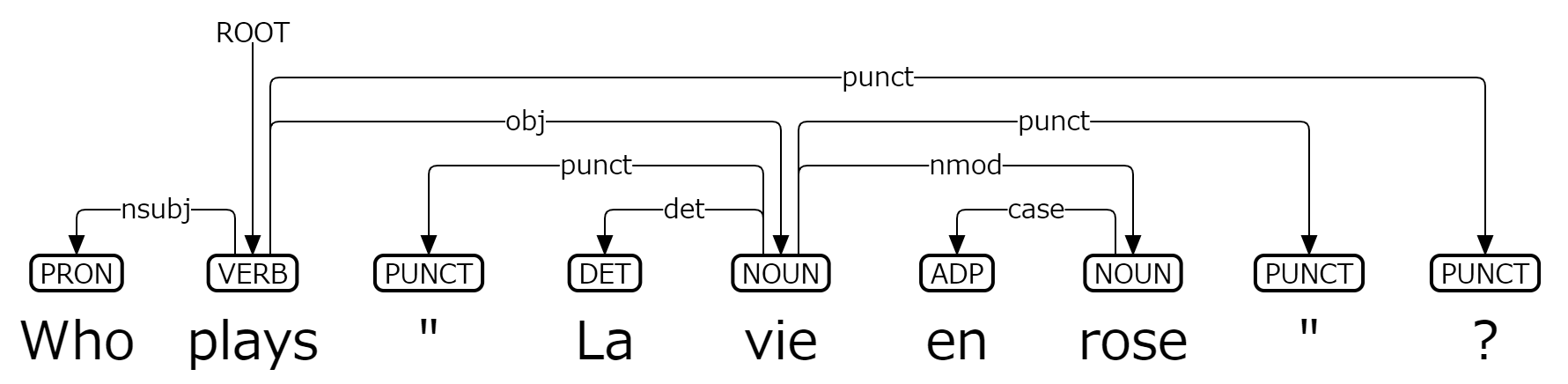

```py

>>> import multicombo

>>> nlp=multicombo.load()

>>> doc=nlp('Who plays "La vie en rose"?')

>>> print(multicombo.to_conllu(doc))

# text = Who plays "La vie en rose"?

1 Who _ PRON _ PronType=Int 2 nsubj _ Translit=who

2 plays _ VERB _ Mood=Ind|Number=Sing|Person=3|Tense=Pres|VerbForm=Fin 0 root _ _

3 " _ PUNCT _ _ 5 punct _ SpaceAfter=No

4 La _ DET _ Definite=Def|Gender=Fem|Number=Sing|PronType=Art 5 det _ Translit=la

5 vie _ NOUN _ Gender=Fem|Number=Sing 2 obj _ _

6 en _ ADP _ _ 7 case _ _

7 rose _ NOUN _ Number=Sing 5 nmod _ SpaceAfter=No

8 " _ PUNCT _ _ 5 punct _ SpaceAfter=No

9 ? _ PUNCT _ _ 2 punct _ SpaceAfter=No

>>> import deplacy

>>> deplacy.render(doc)

Who PRON <════════════╗ nsubj

plays VERB ═══════════╗═╝═╗ ROOT

" PUNCT <══════╗ ║ ║ punct

La DET <════╗ ║ ║ ║ det

vie NOUN ═══╗═╝═╝═╗<╝ ║ obj

en ADP <╗ ║ ║ ║ case

rose NOUN ═╝<╝ ║ ║ nmod

" PUNCT <════════╝ ║ punct

? PUNCT <══════════════╝ punct

>>> deplacy.serve(doc)

http://127.0.0.1:5000

```

`multicombo.load(lang="xx")` loads spaCy Language pipeline with [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) and `spacy.lang.xx.MultiLanguage` tokenizer. Other language specific tokenizers can be loaded with the option `lang`, while several languages require additional packages:

* `lang="ja"` Japanese requires [SudachiPy](https://pypi.org/project/SudachiPy/) and [SudachiDict-core](https://pypi.org/project/SudachiDict-core/).

* `lang="th"` Thai requires [PyThaiNLP](https://pypi.org/project/pythainlp/).

* `lang="vi"` Vietnamese requires [pyvi](https://pypi.org/project/pyvi/).

## Installation for Linux

```sh

pip3 install allennlp@git+https://github.com/allenai/allennlp

pip3 install 'transformers<4.31'

pip3 install multicombo

```

## Installation for Cygwin64

Make sure to get `python37-devel` `python37-pip` `python37-cython` `python37-numpy` `python37-cffi` `gcc-g++` `mingw64-x86_64-gcc-g++` `gcc-fortran` `git` `curl` `make` `cmake` `libopenblas` `liblapack-devel` `libhdf5-devel` `libfreetype-devel` `libuv-devel` packages, and then:

```sh

curl -L https://raw.githubusercontent.com/KoichiYasuoka/UniDic-COMBO/master/cygwin64.sh | sh

pip3.7 install multicombo

```

## Installation for Jupyter Notebook (Google Colaboratory)

Try [notebook](https://colab.research.google.com/github/KoichiYasuoka/MultiCOMBO/blob/main/multicombo.ipynb).

Raw data

{

"_id": null,

"home_page": "https://github.com/KoichiYasuoka/MultiCOMBO",

"name": "multicombo",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.6",

"maintainer_email": null,

"keywords": "NLP Multilingual",

"author": "Koichi Yasuoka",

"author_email": "yasuoka@kanji.zinbun.kyoto-u.ac.jp",

"download_url": null,

"platform": null,

"description": "[](https://pypi.org/project/multicombo/)\n\n# MultiCOMBO\n\nMultilingual POS-Tagger and Dependency-Parser with [COMBO-pytorch](https://gitlab.clarin-pl.eu/syntactic-tools/combo) and [spaCy](https://spacy.io)\n\n## Basic usage\n\n```py\n>>> import multicombo\n>>> nlp=multicombo.load()\n>>> doc=nlp('Who plays \"La vie en rose\"?')\n>>> print(multicombo.to_conllu(doc))\n# text = Who plays \"La vie en rose\"?\n1\tWho\t_\tPRON\t_\tPronType=Int\t2\tnsubj\t_\tTranslit=who\n2\tplays\t_\tVERB\t_\tMood=Ind|Number=Sing|Person=3|Tense=Pres|VerbForm=Fin\t0\troot\t_\t_\n3\t\"\t_\tPUNCT\t_\t_\t5\tpunct\t_\tSpaceAfter=No\n4\tLa\t_\tDET\t_\tDefinite=Def|Gender=Fem|Number=Sing|PronType=Art\t5\tdet\t_\tTranslit=la\n5\tvie\t_\tNOUN\t_\tGender=Fem|Number=Sing\t2\tobj\t_\t_\n6\ten\t_\tADP\t_\t_\t7\tcase\t_\t_\n7\trose\t_\tNOUN\t_\tNumber=Sing\t5\tnmod\t_\tSpaceAfter=No\n8\t\"\t_\tPUNCT\t_\t_\t5\tpunct\t_\tSpaceAfter=No\n9\t?\t_\tPUNCT\t_\t_\t2\tpunct\t_\tSpaceAfter=No\n\n>>> import deplacy\n>>> deplacy.render(doc)\nWho PRON <\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2557 nsubj\nplays VERB \u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2557\u2550\u255d\u2550\u2557 ROOT\n\" PUNCT <\u2550\u2550\u2550\u2550\u2550\u2550\u2557 \u2551 \u2551 punct\nLa DET <\u2550\u2550\u2550\u2550\u2557 \u2551 \u2551 \u2551 det\nvie NOUN \u2550\u2550\u2550\u2557\u2550\u255d\u2550\u255d\u2550\u2557<\u255d \u2551 obj\nen ADP <\u2557 \u2551 \u2551 \u2551 case\nrose NOUN \u2550\u255d<\u255d \u2551 \u2551 nmod\n\" PUNCT <\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u255d \u2551 punct\n? PUNCT <\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u2550\u255d punct\n\n>>> deplacy.serve(doc)\nhttp://127.0.0.1:5000\n```\n\n`multicombo.load(lang=\"xx\")` loads spaCy Language pipeline with [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) and `spacy.lang.xx.MultiLanguage` tokenizer. Other language specific tokenizers can be loaded with the option `lang`, while several languages require additional packages:\n* `lang=\"ja\"` Japanese requires [SudachiPy](https://pypi.org/project/SudachiPy/) and [SudachiDict-core](https://pypi.org/project/SudachiDict-core/).\n* `lang=\"th\"` Thai requires [PyThaiNLP](https://pypi.org/project/pythainlp/).\n* `lang=\"vi\"` Vietnamese requires [pyvi](https://pypi.org/project/pyvi/).\n\n## Installation for Linux\n\n```sh\npip3 install allennlp@git+https://github.com/allenai/allennlp\npip3 install 'transformers<4.31'\npip3 install multicombo\n```\n\n## Installation for Cygwin64\n\nMake sure to get `python37-devel` `python37-pip` `python37-cython` `python37-numpy` `python37-cffi` `gcc-g++` `mingw64-x86_64-gcc-g++` `gcc-fortran` `git` `curl` `make` `cmake` `libopenblas` `liblapack-devel` `libhdf5-devel` `libfreetype-devel` `libuv-devel` packages, and then:\n```sh\ncurl -L https://raw.githubusercontent.com/KoichiYasuoka/UniDic-COMBO/master/cygwin64.sh | sh\npip3.7 install multicombo\n```\n\n## Installation for Jupyter Notebook (Google Colaboratory)\n\nTry [notebook](https://colab.research.google.com/github/KoichiYasuoka/MultiCOMBO/blob/main/multicombo.ipynb).\n\n",

"bugtrack_url": null,

"license": "GPL",

"summary": "Multilingual POS-tagger and Dependency-parser",

"version": "0.8.6",

"project_urls": {

"COMBO-pytorch": "https://gitlab.clarin-pl.eu/syntactic-tools/combo",

"Homepage": "https://github.com/KoichiYasuoka/MultiCOMBO",

"Source": "https://github.com/KoichiYasuoka/MultiCOMBO",

"Tracker": "https://github.com/KoichiYasuoka/MultiCOMBO/issues"

},

"split_keywords": [

"nlp",

"multilingual"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "1e8aaf1e954c43c3ccdc17a8ed5d936e691675941594e61c115ceee1e0e35b9b",

"md5": "b742619e8935ef42114a051fc09da393",

"sha256": "ed024eac37c93d61ddc4bb777ee565815b4ad6defe1c7fdc4146d1234fda5936"

},

"downloads": -1,

"filename": "multicombo-0.8.6-py3-none-any.whl",

"has_sig": false,

"md5_digest": "b742619e8935ef42114a051fc09da393",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.6",

"size": 16779,

"upload_time": "2025-07-13T08:22:12",

"upload_time_iso_8601": "2025-07-13T08:22:12.035114Z",

"url": "https://files.pythonhosted.org/packages/1e/8a/af1e954c43c3ccdc17a8ed5d936e691675941594e61c115ceee1e0e35b9b/multicombo-0.8.6-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-07-13 08:22:12",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "KoichiYasuoka",

"github_project": "MultiCOMBO",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"lcname": "multicombo"

}