| Name | openai-python-api JSON |

| Version |

0.0.8

JSON

JSON |

| download |

| home_page | |

| Summary | OpenAI Python API |

| upload_time | 2024-02-09 13:36:03 |

| maintainer | |

| docs_url | None |

| author | |

| requires_python | >=3.9 |

| license | MIT License Copyright (c) 2023 Ilya Vereshchagin Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. |

| keywords |

ai

api

artificial intelligence

chatgpt

dalle

dalle2

gpt

openai

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

# OpenAI Python API

This package provides a Python API for [OpenAI](https://openai.com/), based on the official [API documentation](https://openai.com/blog/openai-api) and wraps-up original [OpenAI API](https://pypi.org/project/openai/).

[](https://badge.fury.io/py/openai-python-api) [](https://github.com/wwakabobik/openai_api/actions/workflows/master_linters.yml)

## Installation

To install the package, use the package from [pypi](https://pypi.org/project/openai-api/):

```bash

pip install openai_python_api

```

This package contains API for ChatGPT and DALL-E2, but they not fully covered yet. More functionality will be added in the future.

You need to be registered on [OpenAI](https://openai.com/) and have an API key to use this package. You can find your API key on the [API tokens](https://platform.openai.com/account/api-keys) page.

## ChatGPT

The `ChatGPT` class is for managing an instance of the ChatGPT model.

### Fast start

Here's a basic example of how to use the API:

```python

from openai_python_api import ChatGPT

# Use your API key and any organization you wish

chatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization', prompt_method=True)

response = chatgpt.str_chat("Hello, my name is John Connor!")

print(response)

```

This will produce the following output:

### Creating personalized a ChatGPT instance

You may need to create a custom ChatGPT instance to use the API. You can do this by passing the following parameters to the `ChatGPT` constructor:

- `model` (str): The name of the model, Default is 'gpt-4'. List of models you can find in models.py or [here](https://platform.openai.com/docs/models/overview).

- `temperature` (float, optional): The temperature of the model's output. Default is 1.

- `top_p` (float, optional): The top-p value for nucleus sampling. Default is 1.

- `stream` (bool, optional): If True, the model will return intermediate results. Default is False.

- `stop` (str, optional): The stop sequence at which the model should stop generating further tokens. Default is None.

- `max_tokens` (int, optional): The maximum number of tokens in the output. Default is 1024.

- `presence_penalty` (float, optional): The penalty for new token presence. Default is 0.

- `frequency_penalty` (float, optional): The penalty for token frequency. Default is 0.

- `logit_bias` (map, optional): The bias for the logits before sampling. Default is None.

- `history_length` (int, optional): Length of history. Default is 5.

- `prompt_method` (bool, optional): prompt method. Use messages if False, otherwise - prompt. Default if False.

- `system_settings` (str, optional): general instructions for chat. Default is None.

Most of these params reflects OpenAI model parameters. You can find more information about them in the [OpenAI API documentation](https://platform.openai.com/docs/api-reference/chat/create). If you need to get/change them, just use it directly via `chatgpt.model.temperature = 0.5` or `current_temperature = chatgpt.model.temperature`.

But several params are specific for this API: `prompt_method` is stub to use direct input to model without usage of "messages" and managing/storing them. It might be an option if you need to trigger chat only once, or you don't need to pass extra messages and instructions to chat. `system_settings` is used to store bot global instructions, like how to behave, how to act and format output. Refer to [Best practices](https://platform.openai.com/docs/guides/gpt-best-practices/tactic-ask-the-model-to-adopt-a-persona). `history_length` is used to store history of messages. It's used to pass messages to model in a single request. Default is 5, but you can change it if you need to store more messages. More you pass, more expensive request will be.

```python

chatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization',

model='chatgpt3.5', history_length=10)

chatgpt.model.temperature = 0.5

chatgpt.model.top_p = 0.9

```

Here is a difference between `prompt_method=True` and `prompt_method=False` wih message history:

### Managing chats

If you plan to use several users or chats, you can use next params while creating `ChatGPT` instance or set them later. Parameters are:

- `user` (str, optional): The user ID. Default is ''. This field is used to identify global user model. Usually, it's a master of the ChatGPT instance.

- `current_chat` (str, optional): Default chat will be used. Default is None. This field is used to identify current chat. If user uses some chat, or it's not created yet, it will be created and stored into this value.

- `chats` (dict, optional): Chats dictionary, contains all chats. Default is None. It's placeholder for all chats. You can set this value to any dict to restore, replace or flush chat history for ChatGPT instance.

So, in general, you can use `ChatGPT` instance as a chatbot for one user, or as a chatbot for several users. If you need to use it as a chatbot for several users, you need to create a `ChatGPT` instance for each user and store it somewhere. You can use `chats` parameter to store all chats in one place, or you can store them in a database, or you can store them in a file. It's up to you.

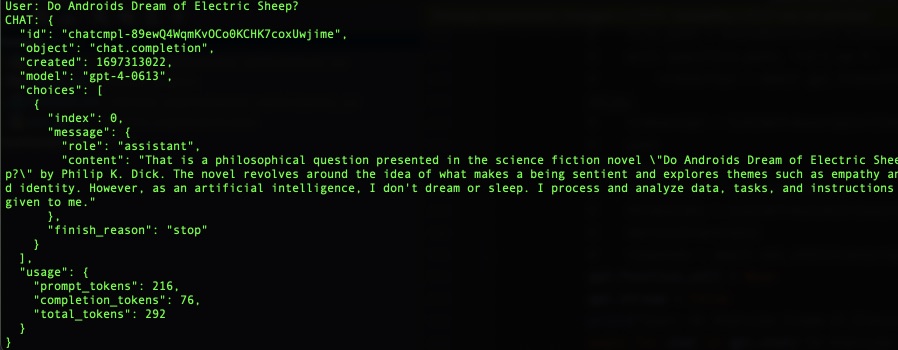

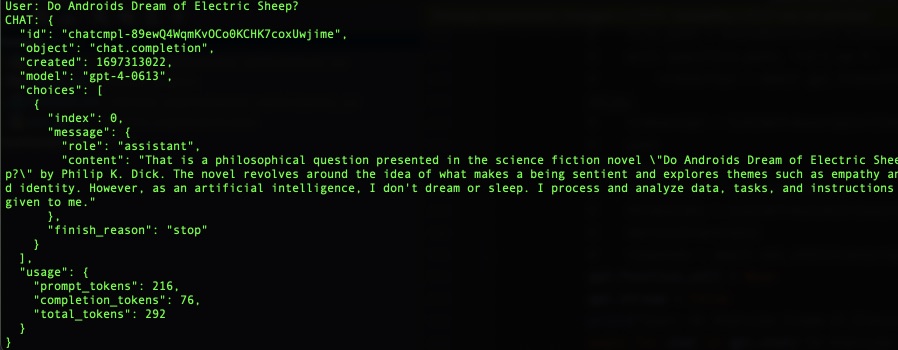

### Obtaining enhanced responses

In most cases you need to get a response from the model as string (which you can use directly or format in your frontend). But in some rare cases you may need to get raw answer from the model. In this case you can use `process_chat` method. It returns a `ChatCompletion` object, which contains all information about the model's response. You can use it to get raw response, or you can use it to get formatted response. Moreover, it's an only way to obtain several choices at once (i.e. you need 4 different answers from the model).

```python

chatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization', choices=4)

chatgpt.chat({"role": "user", "content": "Do Androids Dream of Electric Sheep?"})

chatgpt.process_chat({"role": "user", "content": "What use was time to those who'd soon achieve Digital Immortality?"})

```

### Service methods

Fore some reasons you may use ChatGPT instance to transcribe audiofile into text or translate text to English.

```python

# you may use response_format with following values: json, text, srt, verbose_json, or vtt. Default is text.

transcripted_string = chatgpt.transcript('audiofile.mp3', language='russian', response_format='text')

translated_string = chatgpt.translate(transcripted_string, response_format='json')

```

For details refer to [OpenAI API documentation](https://platform.openai.com/docs/api-reference/audio) for Audio topic.

### Store data

You also may need to store your settings and chats. You may use following methods:

```python

settings = chatgpt.dump_settings() # dumps ChatGPT settings to JSON

chats = chatgpt.dump_chats() # dumps all chats to JSON

chat = chatgpt.dump_chat('my_secure_chat') # dumps chat to JSON

```

### Using functions

To empower your ChatGPT you may want to use _functions_. Functions are a way to extend the functionality of the model. You can use functions to get some data from the model, or to change the model's behavior. To use them you need to pass several parameters to the `ChatGPT` constructor or it's attributes:

```python

gpt_functions = [{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

},]

chatgpt.functions = gpt_functions

chatgpt.function_dict = {"get_current_weather": obtain_weather} # this is your function somewhere in your code

chatgpt.function_call = "auto" # none to disable or use dict like {"name": "my_function"} to call them manually

```

Now, when you're going to asking the ChatGPT about something, it will return related info using your function.

For details refer to [OpenAI API documentation](https://platform.openai.com/docs/guides/gpt/function-calling) for functions or to [mine article](https://wwakabobik.github.io/2023/10/qa_ai_practices_used_for_qa/) (as example usage).

## DALL-E

The `DALLE` class is for managing an instance of the DALL-E models. You can generate or edit images using this class.

### Fast start

Here's a basic example of how to use the API:

```python

from openai_python_api import DALLE

# Use your API key and any organization you wish

dalle = DALLE(auth_token='your-auth-token', organization='your-organization')

images = dalle.create_image_url("cybernetic cat") # will return list of urls to images

```

### Creating personalized DALL-E instance

You may need to create a custom DALL-E instance to use the API. You can do this by passing the following parameters to the `DALLE` constructor or just set them later:

- `default_count` (int): Default count of images to produce. Default is 1.

- `default_size` (str): Default dimensions for output images. Default is "512x512". "256x256" and "1024x1024" as option.

- `default_file_format` (str): Default file format. Optional. Default is 'PNG'.

- `user` (str, optional): The user ID. Default is ''.

```python

dalle = DALLE(auth_token='your-auth-token', organization='your-organization',

default_count=3, default_size="256x256")

dalle.default_file_format = 'JPG'

```

### Methods

You can use following methods to generate images:

```python

image_bytes = dalle.create_image("robocop") # will return list of images (dict format).

image_dict = dalle.create_image_data("night city") # will return list of images (bytes format).

```

You can save bytes image to file:

```python

# if file format is None, it will be taken from class attribute

image_file = dalle.save_image(image = image_bytes, filename="night_city", file_format=None) # will return filename

```

You can use following methods to edit images:

```python

with open("robocop.jpg", "rb") as image_file:

with open("mask.png", "rb") as mask_file:

edited_image1 = dalle.edit_image_from_file(file=image_file,

prompt="change color to pink",

mask=mask_file) # return of bytes format

# or use url

edited_image2 = dalle.edit_image_from_url(url=night_city_url,

mask_url=mask_image_url,

prompt="make it daylight") # return of bytes format

```

You can use following methods to create variations of images:

```python

with open("robocop.jpg", "rb") as image_file:

variated_image1 = dalle.create_variation_from_file(file=image_file) # return of bytes format

# or use url

variated_image2 = dalle.create_variation_from_url(url=night_city_url) # return of bytes format

```

## Additional notes

Currently, library supports only asynchronous requests. It means that you need to wait for response from the model. It might take some time, so you need to be patient. In the future, we will add support for synchronous requests.

This means you must use async/await syntax to call the API. For example:

```python

import asyncio

from openai_api import ChatGPT

async def main():

chatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization')

response = await chatgpt.str_chat("What are the 3 rules of AI?")

print(response)

asyncio.run(main())

```

Please refer to the [asyncio documentation](https://docs.python.org/3/library/asyncio.html) for more information. And to [my article](https://wwakabobik.github.io/2023/09/ai_learning_to_hear_and_speak/) about TTS/transcriptors for researching against possible pitfalls.

## Contributing

We welcome contributions! Please see our [Contributing Guidelines](CONTRIBUTING.md) for more details.

## License

This project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.

## Donations

If you like this project, you can support it by donating via [DonationAlerts](https://www.donationalerts.com/r/rocketsciencegeek).

Raw data

{

"_id": null,

"home_page": "",

"name": "openai-python-api",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.9",

"maintainer_email": "Iliya Vereshchagin <i.vereshchagin@gmail.com>",

"keywords": "ai,api,artificial intelligence,chatgpt,dalle,dalle2,gpt,openai",

"author": "",

"author_email": "Iliya Vereshchagin <i.vereshchagin@gmail.com>",

"download_url": "https://files.pythonhosted.org/packages/b7/a7/46a6564a81a3adbaa3784783b750e5c790e864f590a9eb7330a3a570a29d/openai_python_api-0.0.8.tar.gz",

"platform": null,

"description": "# OpenAI Python API\n\nThis package provides a Python API for [OpenAI](https://openai.com/), based on the official [API documentation](https://openai.com/blog/openai-api) and wraps-up original [OpenAI API](https://pypi.org/project/openai/).\n\n[](https://badge.fury.io/py/openai-python-api) [](https://github.com/wwakabobik/openai_api/actions/workflows/master_linters.yml)\n\n## Installation\n\nTo install the package, use the package from [pypi](https://pypi.org/project/openai-api/):\n\n```bash\npip install openai_python_api\n```\n\nThis package contains API for ChatGPT and DALL-E2, but they not fully covered yet. More functionality will be added in the future.\n\nYou need to be registered on [OpenAI](https://openai.com/) and have an API key to use this package. You can find your API key on the [API tokens](https://platform.openai.com/account/api-keys) page.\n\n## ChatGPT\n\nThe `ChatGPT` class is for managing an instance of the ChatGPT model. \n\n### Fast start\n\nHere's a basic example of how to use the API:\n\n```python\nfrom openai_python_api import ChatGPT\n\n# Use your API key and any organization you wish\nchatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization', prompt_method=True)\nresponse = chatgpt.str_chat(\"Hello, my name is John Connor!\")\nprint(response)\n```\n\nThis will produce the following output:\n\n\n\n### Creating personalized a ChatGPT instance\n\nYou may need to create a custom ChatGPT instance to use the API. You can do this by passing the following parameters to the `ChatGPT` constructor:\n\n- `model` (str): The name of the model, Default is 'gpt-4'. List of models you can find in models.py or [here](https://platform.openai.com/docs/models/overview).\n- `temperature` (float, optional): The temperature of the model's output. Default is 1.\n- `top_p` (float, optional): The top-p value for nucleus sampling. Default is 1.\n- `stream` (bool, optional): If True, the model will return intermediate results. Default is False.\n- `stop` (str, optional): The stop sequence at which the model should stop generating further tokens. Default is None.\n- `max_tokens` (int, optional): The maximum number of tokens in the output. Default is 1024.\n- `presence_penalty` (float, optional): The penalty for new token presence. Default is 0.\n- `frequency_penalty` (float, optional): The penalty for token frequency. Default is 0.\n- `logit_bias` (map, optional): The bias for the logits before sampling. Default is None.\n- `history_length` (int, optional): Length of history. Default is 5.\n- `prompt_method` (bool, optional): prompt method. Use messages if False, otherwise - prompt. Default if False.\n- `system_settings` (str, optional): general instructions for chat. Default is None.\n\nMost of these params reflects OpenAI model parameters. You can find more information about them in the [OpenAI API documentation](https://platform.openai.com/docs/api-reference/chat/create). If you need to get/change them, just use it directly via `chatgpt.model.temperature = 0.5` or `current_temperature = chatgpt.model.temperature`.\nBut several params are specific for this API: `prompt_method` is stub to use direct input to model without usage of \"messages\" and managing/storing them. It might be an option if you need to trigger chat only once, or you don't need to pass extra messages and instructions to chat. `system_settings` is used to store bot global instructions, like how to behave, how to act and format output. Refer to [Best practices](https://platform.openai.com/docs/guides/gpt-best-practices/tactic-ask-the-model-to-adopt-a-persona). `history_length` is used to store history of messages. It's used to pass messages to model in a single request. Default is 5, but you can change it if you need to store more messages. More you pass, more expensive request will be.\n\n```python\nchatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization', \n model='chatgpt3.5', history_length=10)\nchatgpt.model.temperature = 0.5\nchatgpt.model.top_p = 0.9\n```\n\nHere is a difference between `prompt_method=True` and `prompt_method=False` wih message history:\n\n\n\n### Managing chats\n\nIf you plan to use several users or chats, you can use next params while creating `ChatGPT` instance or set them later. Parameters are:\n\n- `user` (str, optional): The user ID. Default is ''. This field is used to identify global user model. Usually, it's a master of the ChatGPT instance.\n- `current_chat` (str, optional): Default chat will be used. Default is None. This field is used to identify current chat. If user uses some chat, or it's not created yet, it will be created and stored into this value.\n- `chats` (dict, optional): Chats dictionary, contains all chats. Default is None. It's placeholder for all chats. You can set this value to any dict to restore, replace or flush chat history for ChatGPT instance.\n\nSo, in general, you can use `ChatGPT` instance as a chatbot for one user, or as a chatbot for several users. If you need to use it as a chatbot for several users, you need to create a `ChatGPT` instance for each user and store it somewhere. You can use `chats` parameter to store all chats in one place, or you can store them in a database, or you can store them in a file. It's up to you.\n\n### Obtaining enhanced responses\n\nIn most cases you need to get a response from the model as string (which you can use directly or format in your frontend). But in some rare cases you may need to get raw answer from the model. In this case you can use `process_chat` method. It returns a `ChatCompletion` object, which contains all information about the model's response. You can use it to get raw response, or you can use it to get formatted response. Moreover, it's an only way to obtain several choices at once (i.e. you need 4 different answers from the model).\n\n```python\nchatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization', choices=4)\nchatgpt.chat({\"role\": \"user\", \"content\": \"Do Androids Dream of Electric Sheep?\"})\nchatgpt.process_chat({\"role\": \"user\", \"content\": \"What use was time to those who'd soon achieve Digital Immortality?\"})\n```\n\n\n\n### Service methods\n\nFore some reasons you may use ChatGPT instance to transcribe audiofile into text or translate text to English.\n\n```python\n# you may use response_format with following values: json, text, srt, verbose_json, or vtt. Default is text.\ntranscripted_string = chatgpt.transcript('audiofile.mp3', language='russian', response_format='text') \ntranslated_string = chatgpt.translate(transcripted_string, response_format='json')\n```\n\n\n\n\nFor details refer to [OpenAI API documentation](https://platform.openai.com/docs/api-reference/audio) for Audio topic.\n\n\n### Store data\n\nYou also may need to store your settings and chats. You may use following methods:\n\n```python\nsettings = chatgpt.dump_settings() # dumps ChatGPT settings to JSON\nchats = chatgpt.dump_chats() # dumps all chats to JSON\nchat = chatgpt.dump_chat('my_secure_chat') # dumps chat to JSON\n```\n\n### Using functions\n\nTo empower your ChatGPT you may want to use _functions_. Functions are a way to extend the functionality of the model. You can use functions to get some data from the model, or to change the model's behavior. To use them you need to pass several parameters to the `ChatGPT` constructor or it's attributes:\n\n```python\ngpt_functions = [{\n \"name\": \"get_current_weather\",\n \"description\": \"Get the current weather in a given location\",\n \"parameters\": {\n \"type\": \"object\",\n \"properties\": {\n \"location\": {\n \"type\": \"string\",\n \"description\": \"The city and state, e.g. San Francisco, CA\",\n },\n \"unit\": {\"type\": \"string\", \"enum\": [\"celsius\", \"fahrenheit\"]},\n },\n \"required\": [\"location\"],\n },\n },]\nchatgpt.functions = gpt_functions\nchatgpt.function_dict = {\"get_current_weather\": obtain_weather} # this is your function somewhere in your code\nchatgpt.function_call = \"auto\" # none to disable or use dict like {\"name\": \"my_function\"} to call them manually\n```\nNow, when you're going to asking the ChatGPT about something, it will return related info using your function.\n\n\n\nFor details refer to [OpenAI API documentation](https://platform.openai.com/docs/guides/gpt/function-calling) for functions or to [mine article](https://wwakabobik.github.io/2023/10/qa_ai_practices_used_for_qa/) (as example usage).\n\n## DALL-E\n\nThe `DALLE` class is for managing an instance of the DALL-E models. You can generate or edit images using this class.\n\n### Fast start\n\nHere's a basic example of how to use the API:\n\n```python\nfrom openai_python_api import DALLE\n\n# Use your API key and any organization you wish\ndalle = DALLE(auth_token='your-auth-token', organization='your-organization')\nimages = dalle.create_image_url(\"cybernetic cat\") # will return list of urls to images\n```\n\n\n\n### Creating personalized DALL-E instance\n\nYou may need to create a custom DALL-E instance to use the API. You can do this by passing the following parameters to the `DALLE` constructor or just set them later:\n\n- `default_count` (int): Default count of images to produce. Default is 1.\n- `default_size` (str): Default dimensions for output images. Default is \"512x512\". \"256x256\" and \"1024x1024\" as option.\n- `default_file_format` (str): Default file format. Optional. Default is 'PNG'.\n- `user` (str, optional): The user ID. Default is ''.\n\n```python\ndalle = DALLE(auth_token='your-auth-token', organization='your-organization', \n default_count=3, default_size=\"256x256\")\ndalle.default_file_format = 'JPG'\n```\n\n### Methods\n\nYou can use following methods to generate images:\n\n```python\nimage_bytes = dalle.create_image(\"robocop\") # will return list of images (dict format). \nimage_dict = dalle.create_image_data(\"night city\") # will return list of images (bytes format).\n```\n\nYou can save bytes image to file:\n\n```python\n# if file format is None, it will be taken from class attribute\nimage_file = dalle.save_image(image = image_bytes, filename=\"night_city\", file_format=None) # will return filename\n```\n\n\n\nYou can use following methods to edit images:\n\n```python\nwith open(\"robocop.jpg\", \"rb\") as image_file:\n with open(\"mask.png\", \"rb\") as mask_file:\n edited_image1 = dalle.edit_image_from_file(file=image_file, \n prompt=\"change color to pink\", \n mask=mask_file) # return of bytes format\n# or use url\nedited_image2 = dalle.edit_image_from_url(url=night_city_url, \n mask_url=mask_image_url, \n prompt=\"make it daylight\") # return of bytes format\n```\n\nYou can use following methods to create variations of images:\n\n```python\nwith open(\"robocop.jpg\", \"rb\") as image_file:\n variated_image1 = dalle.create_variation_from_file(file=image_file) # return of bytes format\n# or use url\nvariated_image2 = dalle.create_variation_from_url(url=night_city_url) # return of bytes format\n```\n\n \n\n\n## Additional notes\n\nCurrently, library supports only asynchronous requests. It means that you need to wait for response from the model. It might take some time, so you need to be patient. In the future, we will add support for synchronous requests. \n\nThis means you must use async/await syntax to call the API. For example:\n\n```python\nimport asyncio\nfrom openai_api import ChatGPT\n\nasync def main():\n chatgpt = ChatGPT(auth_token='your-auth-token', organization='your-organization')\n response = await chatgpt.str_chat(\"What are the 3 rules of AI?\")\n print(response)\n\nasyncio.run(main())\n```\n\nPlease refer to the [asyncio documentation](https://docs.python.org/3/library/asyncio.html) for more information. And to [my article](https://wwakabobik.github.io/2023/09/ai_learning_to_hear_and_speak/) about TTS/transcriptors for researching against possible pitfalls.\n\n## Contributing\n\nWe welcome contributions! Please see our [Contributing Guidelines](CONTRIBUTING.md) for more details.\n\n## License\n\nThis project is licensed under the MIT License - see the [LICENSE](LICENSE) file for details.\n\n## Donations\nIf you like this project, you can support it by donating via [DonationAlerts](https://www.donationalerts.com/r/rocketsciencegeek).\n",

"bugtrack_url": null,

"license": "MIT License Copyright (c) 2023 Ilya Vereshchagin Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.",

"summary": "OpenAI Python API",

"version": "0.0.8",

"project_urls": {

"Bug Tracker": "https://github.com/wwakabobik/openai_api/issues",

"Homepage": "https://github.com/wwakabobik/openai_api"

},

"split_keywords": [

"ai",

"api",

"artificial intelligence",

"chatgpt",

"dalle",

"dalle2",

"gpt",

"openai"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "3ee98389c8c4ebe1f5cf85a58baa211a648ba080ec53ef09ddb12854ab5de9fa",

"md5": "8fc896257173abb8b89c2ee6708731b3",

"sha256": "337ffa9e1109589af9a187d238163ed5f5960745ef5aedd17c28852c9ee2973e"

},

"downloads": -1,

"filename": "openai_python_api-0.0.8-py3-none-any.whl",

"has_sig": false,

"md5_digest": "8fc896257173abb8b89c2ee6708731b3",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9",

"size": 19983,

"upload_time": "2024-02-09T13:35:56",

"upload_time_iso_8601": "2024-02-09T13:35:56.603038Z",

"url": "https://files.pythonhosted.org/packages/3e/e9/8389c8c4ebe1f5cf85a58baa211a648ba080ec53ef09ddb12854ab5de9fa/openai_python_api-0.0.8-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "b7a746a6564a81a3adbaa3784783b750e5c790e864f590a9eb7330a3a570a29d",

"md5": "5e29e67cc7c337d6b052436723c9926d",

"sha256": "9df3f6865808ea7d3bff68fef0807dcbf05a35ba91b9a291477949d4bdbe2004"

},

"downloads": -1,

"filename": "openai_python_api-0.0.8.tar.gz",

"has_sig": false,

"md5_digest": "5e29e67cc7c337d6b052436723c9926d",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9",

"size": 1631621,

"upload_time": "2024-02-09T13:36:03",

"upload_time_iso_8601": "2024-02-09T13:36:03.719264Z",

"url": "https://files.pythonhosted.org/packages/b7/a7/46a6564a81a3adbaa3784783b750e5c790e864f590a9eb7330a3a570a29d/openai_python_api-0.0.8.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-02-09 13:36:03",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "wwakabobik",

"github_project": "openai_api",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [],

"lcname": "openai-python-api"

}