# PyListener

PyListener is tool for near real-time voice processing and speech to text conversion, it can be pretty

fast to slightly sluggish depending on the compute and memory availability of the environment, I suggest

using it in situations where a delay of ~1 second is reasonable, e.g. AI assistants, voice command

processing etc.

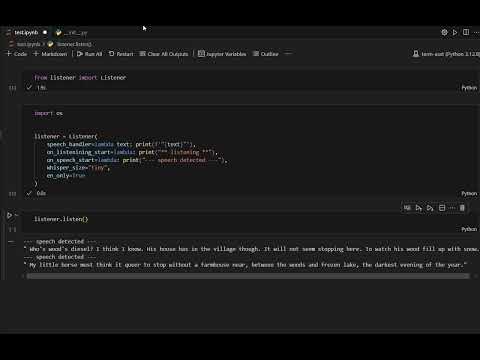

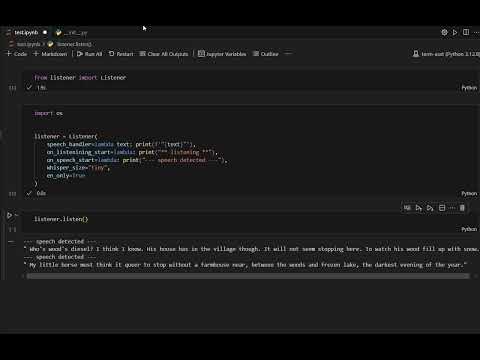

[](https://www.youtube.com/watch?v=SEFm8rJRg_A)

## Installation

Use the package manager [pip](https://pip.pypa.io/en/stable/) to install foobar.

```bash

pip install py-listener

```

## Basic Usage

```python

from listener import Listener

# prints what the speaker is saying, look at all

# parameters of the constructor to find out more features

try:

listener = Listener(speech_handler=print)

except:

listener.close()

raise

# start listening

listener.listen(block=True)

# stops listening

# listener.close()

# starts listening again

# listener.listen()

```

## Documentation

There is only one class in the package, the `Listener`.

It starts collecting audio data after instantation into `n` second chunks, `n` is a number passed as an argument, it checks if the audio chunk contains any human voice in it and if there is human voice, it collects that chunk for later processing (conversion to text or any other user-defined processing) and discards the chunk otherwise.

#### Constructor parameters

- `speech_handler`: a function that is called with the text segments extracted from the human voice in the recorded audio as the only argument, `speech_handler(speech: List[str])`.

- `on_listening_start`: a parameterless function that is called right after the Listener object starts collecting audio.

- `time_window`: an integer that specifies the chunk size of the collected audio in seconds, `2` is the default.

- `no_channels`: the number of audio channels to be used for recording, `1` is the default.

- `has_voice`: a function that is called on the recorded audio chunks to determine if they have human voice in them, it gets the audio chunk in a `numpy.ndarray` object as the only argument, [Silero](https://github.com/snakers4/silero-vad) is used by default to do this, `has_voice(chunk: numpy.ndarrray)`.

- `voice_handler`: a function that is used to process [an utterance](https://en.wikipedia.org/wiki/Utterance), a continuous segment of speech, it gets a list of audio chunks as the only argument, `voice_handler(List[numpy.ndarray])`.

- `min_speech_ms`: the minimum number of miliseconds of voice activity that counts as speech, default is `0`, that means all human voice activity is considered valid speech, no matter how brief.

- `speech_prob_thresh`: the minimum probability of voice activity that is actually considered human voice, must be `0-1`, default is `0.5`.

- `whisper_size`: a size for the underlying whisper model, options: `tiny`, `tiny.en`, `base`, `base.en`, `small`, `small.en`,

`medium`, `medium.en`, `large`, `turbo`.

f

- `whisper_kwargs`: keyword arguments for the underlying `faster_whisper.WhisperModel` instance see [faster_whisper](https://github.com/SYSTRAN/faster-whisper) for all available arguments.

- `compute_type`: compute type for the whisper model, options: `int8`, `int8_float32`, `int8_float16s`, `int8_bfloat16`, `int16`, `float16`, `bfloat16`, `float32`, check [OpenNMT docs](https://opennmt.net/CTranslate2/quantization.html) for an updated list of options.

- `en_only`: a boolean flag indicating if the the voice detection and speech-to-text models should use [half precision](https://en.wikipedia.org/wiki/Half-precision_floating-point_format) arithmetic to save memory and reduce latency, the default is `True` if CUDA is available, it has no effect on CPUs at the time of this writing so it's set to `False` by default on CPU environments.

- `device`: this the device where the speech detection and speech to text conversion models run, the default is `cuda if available, else cpu`.

- `show_download`: controls whether progress bars are shown while downloading the whisper models, default is `True`.

### Methods

- **`listen`**: starts recording audio and

- - if a `voice_handler` function is passed, calls the function with the accumulated human voice, `voice_handler(list[numpy.ndarray])`.

- - else, it keeps recording human voice in `time_window` second chunks until a `time_window` second long silence is detected, at which point it converts the accumulated voice to text, and calls the given `speech_handler` function and passes this transcription to it as the only argument, the transcription is a list of text segments, `speech_handler(List[str])`.

- **`pause`**: pauses listening without clearing up held resources.

- **`resume`**: resumes listening if it was paused.

- **`close`**: stops recording audio and frees the resource held by the listener.

- - **NOTE: it is imperative to call the `close` method when the listener is no longer required, because on CPU-only systems, the audio is sent to the model running in the child process in shared memory objects and if the `close` method is not called, thos

objects may not get cleared and result in memory leaks, it also terminates the child process.**

### Tasks

- [ ] use shared memory to pass audio to child process on POSIX

- - issue: resource tracker keeps tracking shared memory object even if instructed not to do so since shared memory is cleared by library's code

- [ ] in `core.py -> choose_whisper_model()` method, allow larger models as `compute_type` gets smaller, the logic is too rigid at the moment.

## Contributing

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

## License

[MIT](https://choosealicense.com/licenses/mit/)

Raw data

{

"_id": null,

"home_page": "https://github.com/n1teshy/py-listener",

"name": "py-listener",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.9",

"maintainer_email": null,

"keywords": "speech-to-text, offline speech to text, stt",

"author": "Nitesh Yadav",

"author_email": "nitesh.txt@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/8b/5d/f1d48945a16601fd021482d321245643ce7b8d619f4b8ab6557cebe5b15d/py_listener-1.1.0.tar.gz",

"platform": "Posix; Windows",

"description": "# PyListener\r\n\r\nPyListener is tool for near real-time voice processing and speech to text conversion, it can be pretty\r\nfast to slightly sluggish depending on the compute and memory availability of the environment, I suggest\r\nusing it in situations where a delay of ~1 second is reasonable, e.g. AI assistants, voice command\r\nprocessing etc.\r\n\r\n[](https://www.youtube.com/watch?v=SEFm8rJRg_A)\r\n\r\n## Installation\r\n\r\nUse the package manager [pip](https://pip.pypa.io/en/stable/) to install foobar.\r\n\r\n```bash\r\npip install py-listener\r\n```\r\n\r\n## Basic Usage\r\n\r\n```python\r\nfrom listener import Listener\r\n\r\n# prints what the speaker is saying, look at all\r\n# parameters of the constructor to find out more features\r\ntry:\r\n listener = Listener(speech_handler=print)\r\nexcept:\r\n listener.close()\r\n raise\r\n\r\n# start listening\r\nlistener.listen(block=True)\r\n\r\n# stops listening\r\n# listener.close()\r\n\r\n# starts listening again\r\n# listener.listen()\r\n```\r\n\r\n## Documentation\r\nThere is only one class in the package, the `Listener`.\r\n\r\nIt starts collecting audio data after instantation into `n` second chunks, `n` is a number passed as an argument, it checks if the audio chunk contains any human voice in it and if there is human voice, it collects that chunk for later processing (conversion to text or any other user-defined processing) and discards the chunk otherwise.\r\n\r\n#### Constructor parameters\r\n- `speech_handler`: a function that is called with the text segments extracted from the human voice in the recorded audio as the only argument, `speech_handler(speech: List[str])`.\r\n\r\n- `on_listening_start`: a parameterless function that is called right after the Listener object starts collecting audio.\r\n\r\n- `time_window`: an integer that specifies the chunk size of the collected audio in seconds, `2` is the default.\r\n\r\n- `no_channels`: the number of audio channels to be used for recording, `1` is the default.\r\n\r\n- `has_voice`: a function that is called on the recorded audio chunks to determine if they have human voice in them, it gets the audio chunk in a `numpy.ndarray` object as the only argument, [Silero](https://github.com/snakers4/silero-vad) is used by default to do this, `has_voice(chunk: numpy.ndarrray)`.\r\n\r\n- `voice_handler`: a function that is used to process [an utterance](https://en.wikipedia.org/wiki/Utterance), a continuous segment of speech, it gets a list of audio chunks as the only argument, `voice_handler(List[numpy.ndarray])`.\r\n\r\n- `min_speech_ms`: the minimum number of miliseconds of voice activity that counts as speech, default is `0`, that means all human voice activity is considered valid speech, no matter how brief.\r\n\r\n- `speech_prob_thresh`: the minimum probability of voice activity that is actually considered human voice, must be `0-1`, default is `0.5`.\r\n\r\n- `whisper_size`: a size for the underlying whisper model, options: `tiny`, `tiny.en`, `base`, `base.en`, `small`, `small.en`,\r\n`medium`, `medium.en`, `large`, `turbo`.\r\nf\r\n- `whisper_kwargs`: keyword arguments for the underlying `faster_whisper.WhisperModel` instance see [faster_whisper](https://github.com/SYSTRAN/faster-whisper) for all available arguments.\r\n\r\n- `compute_type`: compute type for the whisper model, options: `int8`, `int8_float32`, `int8_float16s`, `int8_bfloat16`, `int16`, `float16`, `bfloat16`, `float32`, check [OpenNMT docs](https://opennmt.net/CTranslate2/quantization.html) for an updated list of options.\r\n\r\n- `en_only`: a boolean flag indicating if the the voice detection and speech-to-text models should use [half precision](https://en.wikipedia.org/wiki/Half-precision_floating-point_format) arithmetic to save memory and reduce latency, the default is `True` if CUDA is available, it has no effect on CPUs at the time of this writing so it's set to `False` by default on CPU environments.\r\n\r\n- `device`: this the device where the speech detection and speech to text conversion models run, the default is `cuda if available, else cpu`.\r\n\r\n- `show_download`: controls whether progress bars are shown while downloading the whisper models, default is `True`.\r\n\r\n### Methods\r\n- **`listen`**: starts recording audio and\r\n- - if a `voice_handler` function is passed, calls the function with the accumulated human voice, `voice_handler(list[numpy.ndarray])`.\r\n- - else, it keeps recording human voice in `time_window` second chunks until a `time_window` second long silence is detected, at which point it converts the accumulated voice to text, and calls the given `speech_handler` function and passes this transcription to it as the only argument, the transcription is a list of text segments, `speech_handler(List[str])`.\r\n\r\n- **`pause`**: pauses listening without clearing up held resources.\r\n\r\n- **`resume`**: resumes listening if it was paused.\r\n\r\n- **`close`**: stops recording audio and frees the resource held by the listener.\r\n\r\n- - **NOTE: it is imperative to call the `close` method when the listener is no longer required, because on CPU-only systems, the audio is sent to the model running in the child process in shared memory objects and if the `close` method is not called, thos\r\nobjects may not get cleared and result in memory leaks, it also terminates the child process.**\r\n\r\n### Tasks\r\n\r\n- [ ] use shared memory to pass audio to child process on POSIX\r\n- - issue: resource tracker keeps tracking shared memory object even if instructed not to do so since shared memory is cleared by library's code\r\n- [ ] in `core.py -> choose_whisper_model()` method, allow larger models as `compute_type` gets smaller, the logic is too rigid at the moment.\r\n\r\n\r\n## Contributing\r\n\r\nPull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.\r\n\r\n## License\r\n\r\n[MIT](https://choosealicense.com/licenses/mit/)\r\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "Real time speech to text",

"version": "1.1.0",

"project_urls": {

"Homepage": "https://github.com/n1teshy/py-listener"

},

"split_keywords": [

"speech-to-text",

" offline speech to text",

" stt"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "ca65f3af716fe97960606f1c474de0d477ae6d2235eb21144abd773a616f5736",

"md5": "ff3e904e74566c2d97d6ff7b6204384b",

"sha256": "a332e892d119ab2b77612650ad7c779ab79b5f3cd9feca567d4a1631d9439e40"

},

"downloads": -1,

"filename": "py_listener-1.1.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "ff3e904e74566c2d97d6ff7b6204384b",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9",

"size": 1093427,

"upload_time": "2025-08-04T18:23:16",

"upload_time_iso_8601": "2025-08-04T18:23:16.054993Z",

"url": "https://files.pythonhosted.org/packages/ca/65/f3af716fe97960606f1c474de0d477ae6d2235eb21144abd773a616f5736/py_listener-1.1.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "8b5df1d48945a16601fd021482d321245643ce7b8d619f4b8ab6557cebe5b15d",

"md5": "83c0c05cf70c8739b4b1e78480f90c10",

"sha256": "d8cf9af09d4f9fda992f20922083679f0d2a8516227cf01af365ae12c9cf6ec6"

},

"downloads": -1,

"filename": "py_listener-1.1.0.tar.gz",

"has_sig": false,

"md5_digest": "83c0c05cf70c8739b4b1e78480f90c10",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9",

"size": 1097923,

"upload_time": "2025-08-04T18:23:18",

"upload_time_iso_8601": "2025-08-04T18:23:18.100191Z",

"url": "https://files.pythonhosted.org/packages/8b/5d/f1d48945a16601fd021482d321245643ce7b8d619f4b8ab6557cebe5b15d/py_listener-1.1.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-08-04 18:23:18",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "n1teshy",

"github_project": "py-listener",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"requirements": [

{

"name": "numpy",

"specs": []

},

{

"name": "pynvml",

"specs": []

},

{

"name": "onnxruntime",

"specs": []

},

{

"name": "sounddevice",

"specs": []

},

{

"name": "faster-whisper",

"specs": []

}

],

"lcname": "py-listener"

}