#  Table Faker

**tablefaker** is a versatile Python package that enables effortless generation of realistic yet synthetic table data for various applications. Whether you need test data for software development, this tool simplifies the process with an intuitive schema definition in YAML format.

## Key Features

- **Schema Definition**: Define your table schema using a simple YAML file, specifying column names, data types, fake data generation logic, and relationships.

- **Faker & Randomization**: Utilize the **Faker** library and random data generation to create authentic-looking synthetic data.

- **Multiple Table Support**: Create multiple tables with different schemas and data generation logic in a single YAML file. Define relationships between tables for foreign keys and primary keys.

- **Multiple Output Formats**:

- Pandas DataFrame

- SQL insert script

- CSV

- Parquet

- JSON

- Excel

- Delta Lake

## Installation

```bash

pip install tablefaker

```

## YAML schema reference

```yaml

version: 1

config:

locale: <locale_string> # e.g. en_US

seed: <integer> # deterministic seed applied to random, numpy, Faker

infer_entity_attrs_by_name: <true|false> # enable `data: auto` name inference

python_import:

- <module_name> # modules to import (expose submodules via import)

community_providers:

- <community_provider_name> # module.ClassName or package.provider

tables:

- table_name: <table_name>

row_count: <integer>

start_row_id: <integer>

export_file_count: <integer>

export_file_row_count: <integer>

columns:

- column_name: <column_name> # string (required)

data: <package>.<function_name>() | <hardcoded_value> | <column_reference> | auto | None

# Examples of allowed data forms:

# <package>.<function_name>() -> faker or other imported function call

# <hardcoded_value> -> numeric or r"string"

# <column_reference> -> reference another column by name (first_name + " " + last_name)

# auto -> resolved to copy_from_fk(...) when infer_entity_attrs_by_name is true

# None -> explicit NULL

# multi-line Python block using | (must return a value)

is_primary_key: <true|false>

type: string | int32 | int64 | float | boolean # a NumPy dtype object, a pandas ExtensionDtype, or a Python type

null_percentage: <float between 0.0 and 1.0>

description: <string>

# Expression evaluation context

# Available variables inside `data` expressions:

# fake, random, datetime, date, timedelta, time, timezone, tzinfo, UTC, MINYEAR, MAXYEAR, math, string, row_id

#

# Special helper functions:

# foreign_key(parent_table, parent_column, distribution="uniform", param=None, parent_attr=None, weights=None)

# copy_from_fk(parent_table, fk_column, parent_attr)

#

# Multi-line Python block:

# Use YAML block scalar `|` and include a final `return <value>` statement.

```

Notes:

- Parent tables must be defined before child tables.

- Two-phase evaluation resolves columns that reference other columns correctly.

- For a full example, see [`tests/test_table.yaml`](tests/test_table.yaml).

## Sample Yaml File Minimal

```yaml

tables:

- table_name: person

columns:

- column_name: id

data: row_id

- column_name: first_name

data: fake.first_name()

- column_name: last_name

data: fake.last_name()

```

## Sample Yaml File Advanced

```yaml

version: 1

config:

locale: en_US

python_import:

- dateutil

- faker-education # custom faker provider

community_providers:

- faker_education.SchoolProvider # custom faker provider

tables:

- table_name: person

row_count: 10

start_row_id: 101 # you can set row_id starting point

export_file_count: 3 # you can set export file count (dominant to export_file_row_count)

columns:

- column_name: id

data: row_id # row_id is a built-in function

is_primary_key: true # define primary key to use as a foreign key

- column_name: first_name

data: fake.first_name() # faker function

type: string

- column_name: last_name

data: fake.last_name()

type: string

- column_name: full_name

data: first_name + " " + last_name # use a column to generate a new column

is_primary_key: true

- column_name: age

data: fake.random_int(18, 90)

type: int32

- column_name: street_address

data: fake.street_address()

- column_name: city

data: fake.city()

- column_name: state_abbr

data: fake.state_abbr()

- column_name: postcode

data: fake.postcode()

- column_name: gender

data: random.choice(["male", "female"]) # random.choice is a built-in function

null_percentage: 0.5 # null_percentage is a built-in function

- column_name: left_handed

data: fake.pybool()

- column_name: today

data: datetime.today().strftime('%Y-%m-%d') # datetime package is available by default

- column_name: easter_date

data: dateutil.easter.easter(2025).strftime('%Y-%m-%d') # python package you need to import in python_import

- column_name: discount_eligibility # custom python function

data: |

if age < 25 or age > 60:

return True

else:

return False

- table_name: employee

row_count: 10

export_file_row_count: 60 # you can set export file row count

columns:

- column_name: id

data: row_id

- column_name: person_id

data: foreign_key("person", "id") # get primary key from another table

- column_name: full_name

data: foreign_key("person", "full_name")

- column_name: hire_date

data: fake.date_between()

type: string

- column_name: title

data: random.choice(["engineer", "senior engineer", "principal engineer", "director", "senior director", "manager", "vice president", "president"])

- column_name: salary

data: None #NULL

type: float

- column_name: height

data: r"170 cm" #string

- column_name: weight

data: 150 #number

- column_name: school

data: fake.school_name() # community provider function

- column_name: level

data: fake.school_level() # community provider function

```

[full yml example](tests/test_table.yaml)

## Configuration: Determinism & Attribute Inference

### Seed (deterministic)

```yaml

config:

locale: en_US

seed: 42 # Optional: for reproducible datasets

```

- Setting `config.seed` makes runs deterministic: the same seed and same YAML produce identical outputs.

- The seed is applied to Python's `random`, NumPy (when available), and the `Faker` instance used by tablefaker.

- Use cases: repeatable tests, CI snapshots, and reproducible examples.

### Attribute name inference

```yaml

config:

infer_entity_attrs_by_name: true # Optional: auto-infer FK attributes

```

- When enabled, columns named with the pattern `<fkprefix>_<attr>` will be automatically bound to the referenced parent row if a sibling `<fkprefix>_id` exists and is a foreign key.

- Example: `customer_email` will be auto-resolved from the row referenced by `customer_id` (if `customer_id` is a FK to `customers.customer_id`).

## Cross-Table Relationships

### Using copy_from_fk()

```yaml

- column_name: customer_email

data: copy_from_fk("customers", "customer_id", "email")

```

- `copy_from_fk(fk_column, parent_table, parent_attr)` copies an attribute from the parent row referenced by the foreign key.

- Useful when you need to duplicate a value from the parent instead of generating it again.

- Parent tables must be defined before child tables in the YAML (no automatic backfilling).

Full parent/child example:

```yaml

tables:

- table_name: customers

row_count: 10

columns:

- column_name: customer_id

is_primary_key: true

data: row_id

- column_name: email

data: fake.email()

- table_name: orders

row_count: 50

columns:

- column_name: order_id

data: row_id

is_primary_key: true

- column_name: customer_id

data: foreign_key("customers", "customer_id")

- column_name: customer_email

data: copy_from_fk("customers", "customer_id", "email")

```

### Automatic attribute inference in action

```yaml

config:

infer_entity_attrs_by_name: true

tables:

- table_name: customers

columns:

- column_name: customer_id

is_primary_key: true

data: row_id

- column_name: email

data: fake.email()

- table_name: orders

columns:

- column_name: customer_id

data: foreign_key("customers", "customer_id")

- column_name: customer_email

data: auto # Automatically resolved from the customer_id FK

```

- `data: auto` indicates that the value will be inferred by name from the referenced parent row when `infer_entity_attrs_by_name` is true.

## Foreign Key Distributions

Foreign keys support different sampling distributions to model realistic parent usage patterns.

### Uniform distribution (default)

```yaml

data: foreign_key("customers", "customer_id")

```

- Backward compatible: selects parent keys uniformly at random.

### Zipf (power-law) distribution

```yaml

data: foreign_key("customers", "customer_id", distribution="zipf", param=1.2)

```

- Produces head-heavy (long-tail) distributions where a few parents appear much more frequently.

- `param` controls concentration: higher values concentrate more on top-ranked parents.

- Useful for modeling popular customers, trending products, or social-systems with power-law behavior.

### Weighted parent distribution (attribute-based)

```yaml

data: foreign_key(

"customers",

"customer_id",

distribution="weighted_parent",

parent_attr="rating",

weights={"5": 3, "4": 2, "3": 1}

)

```

- Weights are applied based on a parent attribute (here `rating`) so parents with certain attribute values are preferred.

- Any parent attribute value not listed in `weights` defaults to weight `1.0`.

- Useful to prefer high-rated customers, VIP tiers, or any attribute-driven bias.

## Complete example (seed, inference, weighted FK)

```yaml

version: 1

config:

locale: en_US

seed: 4242

infer_entity_attrs_by_name: true

tables:

- table_name: customers

row_count: 100

columns:

- column_name: customer_id

data: row_id

is_primary_key: true

- column_name: email

data: fake.unique.email()

- column_name: rating

data: random.choice([3, 4, 5])

- table_name: orders

row_count: 500

columns:

- column_name: order_id

data: row_id

is_primary_key: true

- column_name: customer_id

data: foreign_key(

"customers",

"customer_id",

distribution="weighted_parent",

parent_attr="rating",

weights={"5": 3, "4": 2, "3": 1}

)

- column_name: customer_email

data: auto # Inferred from customer_id FK

```

## Notes

- Parent tables must be defined before child tables (no automatic backfilling/topological sort yet).

- Two-phase row evaluation ensures column order within a table does not affect correctness (you can reference other columns freely).

- `fake.unique` behavior is deterministic only when the same `Faker` instance is reused and `config.seed` is fixed.

- All sampling distributions are deterministic given a fixed seed.

## Data Generation

You can define your dummy data generation logic in a Python function. The Faker, random and datetime packages are pre-imported and ready to use.

- Use the Faker package for realistic data, e.g., `fake.first_name()` or `fake.random_int(1, 10)`.

- Use the random package for basic randomness, e.g., `random.choice(["male", "female"])`.

- Use the datetime package for current date and time, e.g., `datetime.today().strftime('%Y-%m-%d')`.

- You can use a column to generate a new column, e.g., `first_name + " " + last_name`.

- Use is_primary_key to define a primary key, e.g., `is_primary_key: true`.

- Use foreign_key to get a primary key from another table, e.g., `foreign_key("person", "id")`. If you use multiple foreign key functions, you will get the primary key values from the same row.

You can write your logic in a single line or multiple lines, depending on your preference. A built-in function, `row_id`, provides a unique integer for each row. You can specify row_id starting point using the `start_row_id` keyword.

In addition, you have control over how your data is exported:

- **`export_file_count`**: This keyword lets you specify the total number of output files to generate. It's especially useful when you need to split a large dataset into multiple, more manageable files.

- **`export_file_row_count`**: Use this keyword to set the maximum number of rows that each exported file should contain. This ensures that each file remains within a desired size limit and is easier to handle.

Columns will automatically have the best-fitting data type. However, if you'd like to specify a data type, use the `type` keyword. You can assign data types using NumPy dtypes, Pandas Extension Dtypes, or Python native types.

Here are some examples:

```

fake.first_name()

fake.random_int(1, 10)

random.choice(["male", "female"])

datetime.today()

911 # number

r"170 cm" # string

```

## Example Code

```python

import tablefaker

# exports to current folder in csv format

tablefaker.to_csv("test_table.yaml")

# exports to sql insert into scripts to insert to your database

tablefaker.to_sql("test_table.yaml")

# exports all tables in json format

tablefaker.to_json("test_table.yaml", "./target_folder")

# exports all tables in parquet format

tablefaker.to_parquet("test_table.yaml", "./target_folder")

# exports all tables in deltalake format

tablefaker.to_deltalake("test_table.yaml", "./target_folder")

# export single table to the provided folder

tablefaker.to_deltalake("test_table.yaml", "./target_folder/person/", table_name="person")

# exports only the first table in excel format

tablefaker.to_excel("test_table.yaml", "./target_folder/target_file.xlsx")

# get as pandas dataframes

df_dict = tablefaker.to_pandas("test_table.yaml")

person_df = df_dict["person"]

print(person_df.head(5))

```

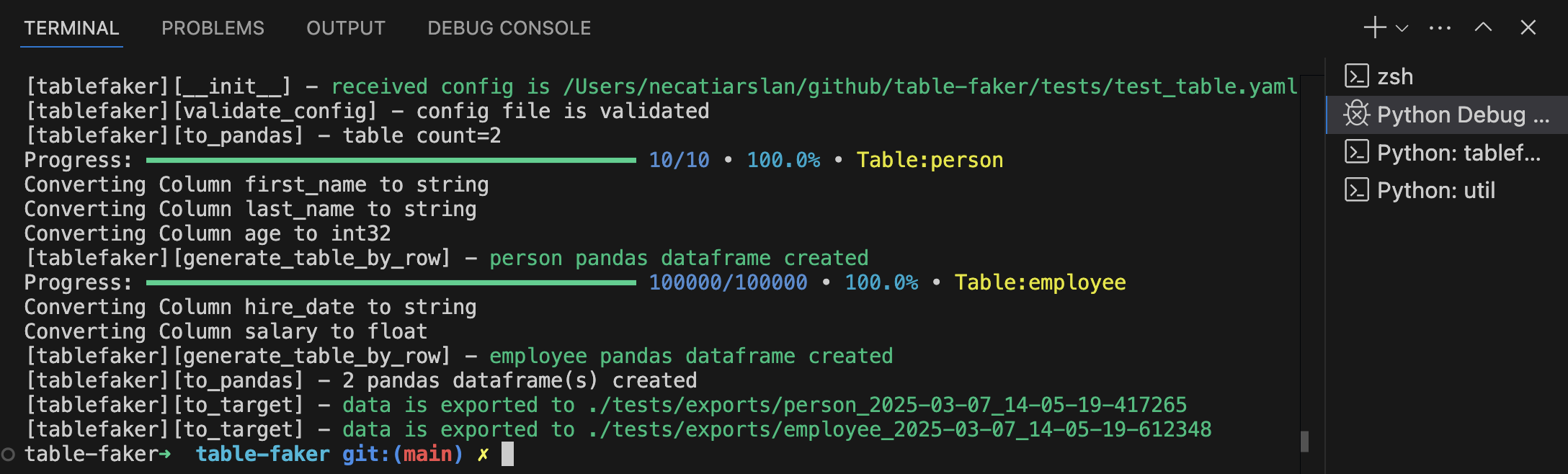

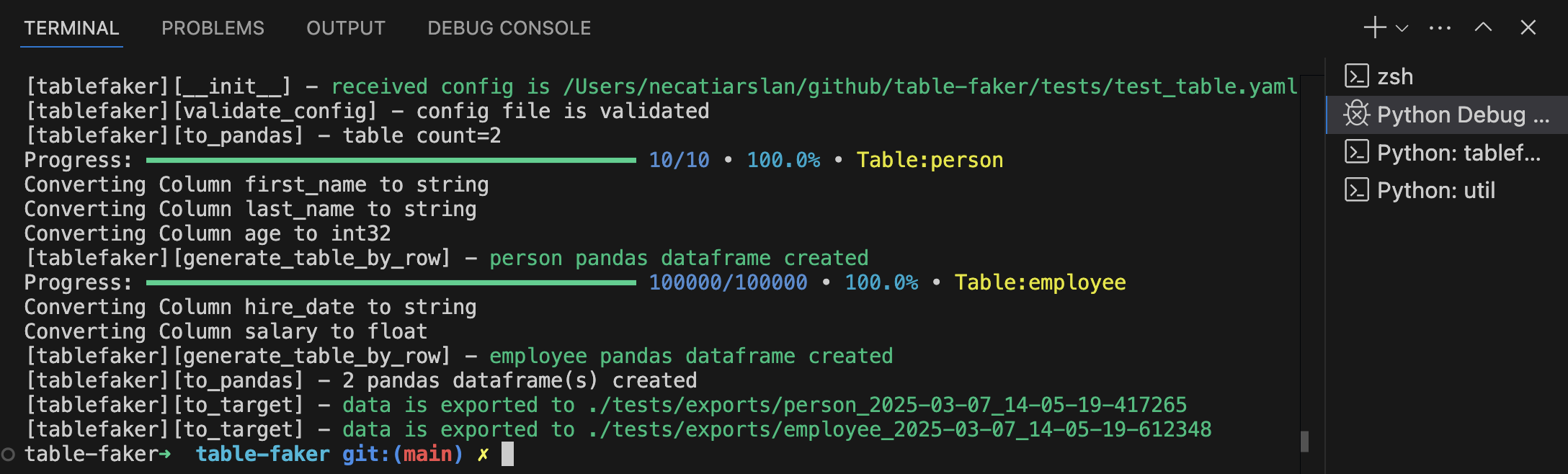

## Sample CLI Command

You can use tablefaker in your terminal for ad-hoc needs or in shell scripts to automate fake data generation. The CLI reads the YAML config and supports importing Python modules via `config.python_import` and adding Faker community providers declared under `config.community_providers` (see "Custom Faker Providers" below). Custom Python functions (passed via the `custom_function` parameter) are only supported when using the Python API programmatically.

Supported CLI flags:

- --config : path to YAML or JSON config

- --file_type : csv,json,parquet,excel,sql,deltalake (default: csv)

- --target : target folder or file path

- --seed : integer seed to make generation deterministic

- --infer-attrs : "true" or "false" to override infer_entity_attrs_by_name

```bash

# exports to current folder in csv format (reads community_providers from config)

tablefaker --config tests/test_table.yaml

# exports as sql insert script files

tablefaker --config tests/test_table.yaml --file_type sql --target ./out

# exports to current folder in excel format

tablefaker --config tests/test_table.yaml --file_type excel

# exports all tables in json format to a folder

tablefaker --config tests/test_table.yaml --file_type json --target ./target_folder

# exports a single table to a parquet file

tablefaker --config tests/test_table.yaml --file_type parquet --target ./target_folder/target_file.parquet

# pass an explicit seed and enable attribute inference

tablefaker --config tests/test_table.yaml --seed 42 --infer-attrs true

```

## Sample CSV Output

```

id,first_name,last_name,age,dob,salary,height,weight

1,John,Smith,35,1992-01-11,,170 cm,150

2,Charles,Shepherd,27,1987-01-02,,170 cm,150

3,Troy,Johnson,42,,170 cm,150

4,Joshua,Hill,86,1985-07-11,,170 cm,150

5,Matthew,Johnson,31,1940-03-31,,170 cm,150

```

## Sample Sql Output

```sql

INSERT INTO employee

(id,person_id,hire_date,title,salary,height,weight,school,level)

VALUES

(1, 4, '2020-10-09', 'principal engineer', NULL, '170 cm', 150, 'ISLIP HIGH SCHOOL', 'level 2'),

(2, 9, '2002-12-20', 'principal engineer', NULL, '170 cm', 150, 'GUY-PERKINS HIGH SCHOOL', 'level 1'),

(3, 2, '1996-01-06', 'principal engineer', NULL, '170 cm', 150, 'SPRINGLAKE-EARTH ELEM/MIDDLE SCHOOL', 'level 3');

```

## Custom Faker Providers

You can add and use custom / community faker providers with table faker.\

Here is a list of these community providers.\

https://faker.readthedocs.io/en/master/communityproviders.html#

```yaml

version: 1

config:

locale: en_US

tables:

- table_name: employee

row_count: 5

columns:

- column_name: id

data: row_id

- column_name: person_id

data: fake.random_int(1, 10)

- column_name: hire_date

data: fake.date_between()

- column_name: school

data: fake.school_name() # custom provider

```

```python

import tablefaker

# import the custom faker provider

from faker_education import SchoolProvider

# provide the faker provider class to the tablefaker using fake_provider

# you can add a single provider or a list of providers

tablefaker.to_csv("test_table.yaml", "./target_folder", fake_provider=SchoolProvider)

# this works with all other to_ methods as well.

```

## Custom Functions

With Table Faker, you have the flexibility to provide your own custom functions to generate column data. This advanced feature empowers developers to create custom fake data generation logic that can pull data from a database, API, file, or any other source as needed.\

You can also supply multiple functions in a list, allowing for even more versatility. \

The custom function you provide should return a single value, giving you full control over your synthetic data generation.

```python

from tablefaker import tablefaker

from faker import Faker

fake = Faker()

def get_level():

return f"level {fake.random_int(1, 5)}"

tablefaker.to_csv("test_table.yaml", "./target_folder", custom_function=get_level)

```

Add get_level function to your yaml file

```yaml

version: 1

config:

locale: en_US

tables:

- table_name: employee

row_count: 5

columns:

- column_name: id

data: row_id

- column_name: person_id

data: fake.random_int(1, 10)

- column_name: hire_date

data: fake.date_between()

- column_name: level

data: get_level() # custom function

```

## Generate Yaml File From Avro Schema or Csv

If you have an [avro schema](https://avro.apache.org/docs/++version++/specification/), you can generate a yaml file using avro_to_yaml function.

```python

from tablefaker import tablefaker

tablefaker.avro_to_yaml("tests/test_person.avsc", "tests/exports/person.yaml")

```

And also you can use csv to define your columns and generate the yaml file.

```python

from tablefaker import tablefaker

tablefaker.csv_to_yaml("tests/test_person.csv", "tests/exports/person.yaml")

```

Sample Csv file

```

column_name,description,data,type,null_percentage

id,Unique identifier for the person,row_id,,

first_name,First name of the person,fake.first_name(),string,

last_name,Last name of the person,fake.last_name(),string,

age,Age of the person,fake.random_int(),int32,0.1

email,Email address of the person,fake.email(),string,0.1

is_active,Indicates if the person is active,fake.pybool(),boolean,0.2

signup_date,Date when the person signed up,fake.date(),,0.3

```

## Support & Donation

If you find Table Faker useful and would like to support its development, consider making a [donation](https://github.com/sponsors/necatiarslan).

## Additional Resources

- **Faker Functions**: [Faker Providers](https://faker.readthedocs.io/en/master/providers.html#)

- **Bug Reports & Feature Requests**: [GitHub Issues](https://github.com/necatiarslan/table-faker/issues/new)

## Roadmap

### TODO

- Support composite primary key

- composite keys are not unique

- composite keys are not stored together

- copy_from_fk do not support composite primary key

- is_unique support

- Provide foreign keys (dictionary, array etc) as an external source

- Variables

- Generate template yaml file from sample data

- use an ai service to generate data generation logic

- make openpyxl package optional to export to excel

### Future Enhancements

- PyArrow table support

- Avro file support

- Add target file name to YAML

---

**Follow for Updates**: [LinkedIn](https://www.linkedin.com/in/necati-arslan/)

**Author**: Necati Arslan | [Email](mailto:necatia@gmail.com)

Raw data

{

"_id": null,

"home_page": "https://github.com/necatiarslan/table-faker",

"name": "tablefaker",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.9",

"maintainer_email": null,

"keywords": "faker, fake data, tabular data, data generation, pandas, parquet, csv, json, excel, sqllite, delta lake",

"author": "Necati Arslan",

"author_email": "necatia@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/12/26/fe0d1936c6c437dd3f8f81fa3658302aaf95c1bdb8fd66b88add0bcc4945/tablefaker-1.8.0.tar.gz",

"platform": null,

"description": "#  Table Faker\n\n**tablefaker** is a versatile Python package that enables effortless generation of realistic yet synthetic table data for various applications. Whether you need test data for software development, this tool simplifies the process with an intuitive schema definition in YAML format.\n\n## Key Features\n- **Schema Definition**: Define your table schema using a simple YAML file, specifying column names, data types, fake data generation logic, and relationships.\n- **Faker & Randomization**: Utilize the **Faker** library and random data generation to create authentic-looking synthetic data.\n- **Multiple Table Support**: Create multiple tables with different schemas and data generation logic in a single YAML file. Define relationships between tables for foreign keys and primary keys.\n- **Multiple Output Formats**:\n - Pandas DataFrame\n - SQL insert script\n - CSV\n - Parquet\n - JSON\n - Excel\n - Delta Lake\n\n## Installation\n```bash\npip install tablefaker\n```\n\n## YAML schema reference \n\n```yaml\nversion: 1\n\nconfig:\n locale: <locale_string> # e.g. en_US\n seed: <integer> # deterministic seed applied to random, numpy, Faker\n infer_entity_attrs_by_name: <true|false> # enable `data: auto` name inference\n python_import:\n - <module_name> # modules to import (expose submodules via import)\n community_providers:\n - <community_provider_name> # module.ClassName or package.provider\n\ntables:\n - table_name: <table_name>\n row_count: <integer>\n start_row_id: <integer>\n export_file_count: <integer>\n export_file_row_count: <integer>\n\n columns:\n - column_name: <column_name> # string (required)\n data: <package>.<function_name>() | <hardcoded_value> | <column_reference> | auto | None\n # Examples of allowed data forms:\n # <package>.<function_name>() -> faker or other imported function call\n # <hardcoded_value> -> numeric or r\"string\"\n # <column_reference> -> reference another column by name (first_name + \" \" + last_name)\n # auto -> resolved to copy_from_fk(...) when infer_entity_attrs_by_name is true\n # None -> explicit NULL\n # multi-line Python block using | (must return a value)\n is_primary_key: <true|false>\n type: string | int32 | int64 | float | boolean # a NumPy dtype object, a pandas ExtensionDtype, or a Python type\n null_percentage: <float between 0.0 and 1.0>\n description: <string>\n\n# Expression evaluation context\n# Available variables inside `data` expressions:\n# fake, random, datetime, date, timedelta, time, timezone, tzinfo, UTC, MINYEAR, MAXYEAR, math, string, row_id\n#\n# Special helper functions:\n# foreign_key(parent_table, parent_column, distribution=\"uniform\", param=None, parent_attr=None, weights=None)\n# copy_from_fk(parent_table, fk_column, parent_attr)\n#\n# Multi-line Python block:\n# Use YAML block scalar `|` and include a final `return <value>` statement.\n\n```\n\nNotes:\n- Parent tables must be defined before child tables.\n- Two-phase evaluation resolves columns that reference other columns correctly.\n- For a full example, see [`tests/test_table.yaml`](tests/test_table.yaml).\n\n## Sample Yaml File Minimal\n```yaml\ntables:\n - table_name: person\n columns:\n - column_name: id\n data: row_id\n - column_name: first_name\n data: fake.first_name()\n - column_name: last_name\n data: fake.last_name()\n```\n## Sample Yaml File Advanced\n```yaml\nversion: 1\nconfig:\n locale: en_US\n python_import:\n - dateutil\n - faker-education # custom faker provider\n community_providers:\n - faker_education.SchoolProvider # custom faker provider\ntables:\n - table_name: person\n row_count: 10\n start_row_id: 101 # you can set row_id starting point\n export_file_count: 3 # you can set export file count (dominant to export_file_row_count)\n columns:\n - column_name: id\n data: row_id # row_id is a built-in function\n is_primary_key: true # define primary key to use as a foreign key\n - column_name: first_name\n data: fake.first_name() # faker function\n type: string\n - column_name: last_name\n data: fake.last_name()\n type: string\n - column_name: full_name\n data: first_name + \" \" + last_name # use a column to generate a new column\n is_primary_key: true\n - column_name: age\n data: fake.random_int(18, 90)\n type: int32\n - column_name: street_address\n data: fake.street_address()\n - column_name: city\n data: fake.city()\n - column_name: state_abbr\n data: fake.state_abbr()\n - column_name: postcode\n data: fake.postcode()\n - column_name: gender\n data: random.choice([\"male\", \"female\"]) # random.choice is a built-in function\n null_percentage: 0.5 # null_percentage is a built-in function\n - column_name: left_handed\n data: fake.pybool()\n - column_name: today\n data: datetime.today().strftime('%Y-%m-%d') # datetime package is available by default\n - column_name: easter_date\n data: dateutil.easter.easter(2025).strftime('%Y-%m-%d') # python package you need to import in python_import\n - column_name: discount_eligibility # custom python function\n data: |\n if age < 25 or age > 60:\n return True\n else:\n return False\n - table_name: employee\n row_count: 10\n export_file_row_count: 60 # you can set export file row count\n columns:\n - column_name: id\n data: row_id\n - column_name: person_id\n data: foreign_key(\"person\", \"id\") # get primary key from another table\n - column_name: full_name\n data: foreign_key(\"person\", \"full_name\")\n - column_name: hire_date\n data: fake.date_between()\n type: string\n - column_name: title\n data: random.choice([\"engineer\", \"senior engineer\", \"principal engineer\", \"director\", \"senior director\", \"manager\", \"vice president\", \"president\"])\n - column_name: salary\n data: None #NULL\n type: float\n - column_name: height\n data: r\"170 cm\" #string\n - column_name: weight\n data: 150 #number\n - column_name: school\n data: fake.school_name() # community provider function\n - column_name: level\n data: fake.school_level() # community provider function\n```\n[full yml example](tests/test_table.yaml)\n\n## Configuration: Determinism & Attribute Inference\n\n### Seed (deterministic)\n```yaml\nconfig:\n locale: en_US\n seed: 42 # Optional: for reproducible datasets\n```\n- Setting `config.seed` makes runs deterministic: the same seed and same YAML produce identical outputs.\n- The seed is applied to Python's `random`, NumPy (when available), and the `Faker` instance used by tablefaker.\n- Use cases: repeatable tests, CI snapshots, and reproducible examples.\n\n### Attribute name inference\n```yaml\nconfig:\n infer_entity_attrs_by_name: true # Optional: auto-infer FK attributes\n```\n- When enabled, columns named with the pattern `<fkprefix>_<attr>` will be automatically bound to the referenced parent row if a sibling `<fkprefix>_id` exists and is a foreign key.\n- Example: `customer_email` will be auto-resolved from the row referenced by `customer_id` (if `customer_id` is a FK to `customers.customer_id`).\n\n## Cross-Table Relationships\n\n### Using copy_from_fk()\n```yaml\n- column_name: customer_email\n data: copy_from_fk(\"customers\", \"customer_id\", \"email\")\n```\n- `copy_from_fk(fk_column, parent_table, parent_attr)` copies an attribute from the parent row referenced by the foreign key.\n- Useful when you need to duplicate a value from the parent instead of generating it again.\n- Parent tables must be defined before child tables in the YAML (no automatic backfilling).\n\nFull parent/child example:\n```yaml\ntables:\n - table_name: customers\n row_count: 10\n columns:\n - column_name: customer_id\n is_primary_key: true\n data: row_id\n - column_name: email\n data: fake.email()\n\n - table_name: orders\n row_count: 50\n columns:\n - column_name: order_id\n data: row_id\n is_primary_key: true\n - column_name: customer_id\n data: foreign_key(\"customers\", \"customer_id\")\n - column_name: customer_email\n data: copy_from_fk(\"customers\", \"customer_id\", \"email\")\n```\n\n### Automatic attribute inference in action\n```yaml\nconfig:\n infer_entity_attrs_by_name: true\ntables:\n - table_name: customers\n columns:\n - column_name: customer_id\n is_primary_key: true\n data: row_id\n - column_name: email\n data: fake.email()\n - table_name: orders\n columns:\n - column_name: customer_id\n data: foreign_key(\"customers\", \"customer_id\")\n - column_name: customer_email\n data: auto # Automatically resolved from the customer_id FK\n```\n- `data: auto` indicates that the value will be inferred by name from the referenced parent row when `infer_entity_attrs_by_name` is true.\n\n## Foreign Key Distributions\nForeign keys support different sampling distributions to model realistic parent usage patterns.\n\n### Uniform distribution (default)\n```yaml\ndata: foreign_key(\"customers\", \"customer_id\")\n```\n- Backward compatible: selects parent keys uniformly at random.\n\n### Zipf (power-law) distribution\n```yaml\ndata: foreign_key(\"customers\", \"customer_id\", distribution=\"zipf\", param=1.2)\n```\n- Produces head-heavy (long-tail) distributions where a few parents appear much more frequently.\n- `param` controls concentration: higher values concentrate more on top-ranked parents.\n- Useful for modeling popular customers, trending products, or social-systems with power-law behavior.\n\n### Weighted parent distribution (attribute-based)\n```yaml\ndata: foreign_key(\n \"customers\",\n \"customer_id\",\n distribution=\"weighted_parent\",\n parent_attr=\"rating\",\n weights={\"5\": 3, \"4\": 2, \"3\": 1}\n)\n```\n- Weights are applied based on a parent attribute (here `rating`) so parents with certain attribute values are preferred.\n- Any parent attribute value not listed in `weights` defaults to weight `1.0`.\n- Useful to prefer high-rated customers, VIP tiers, or any attribute-driven bias.\n\n## Complete example (seed, inference, weighted FK)\n```yaml\nversion: 1\nconfig:\n locale: en_US\n seed: 4242\n infer_entity_attrs_by_name: true\n\ntables:\n - table_name: customers\n row_count: 100\n columns:\n - column_name: customer_id\n data: row_id\n is_primary_key: true\n - column_name: email\n data: fake.unique.email()\n - column_name: rating\n data: random.choice([3, 4, 5])\n\n - table_name: orders\n row_count: 500\n columns:\n - column_name: order_id\n data: row_id\n is_primary_key: true\n - column_name: customer_id\n data: foreign_key(\n \"customers\",\n \"customer_id\",\n distribution=\"weighted_parent\",\n parent_attr=\"rating\",\n weights={\"5\": 3, \"4\": 2, \"3\": 1}\n )\n - column_name: customer_email\n data: auto # Inferred from customer_id FK\n```\n\n## Notes\n- Parent tables must be defined before child tables (no automatic backfilling/topological sort yet).\n- Two-phase row evaluation ensures column order within a table does not affect correctness (you can reference other columns freely).\n- `fake.unique` behavior is deterministic only when the same `Faker` instance is reused and `config.seed` is fixed.\n- All sampling distributions are deterministic given a fixed seed.\n \n## Data Generation\nYou can define your dummy data generation logic in a Python function. The Faker, random and datetime packages are pre-imported and ready to use.\n\n- Use the Faker package for realistic data, e.g., `fake.first_name()` or `fake.random_int(1, 10)`.\n- Use the random package for basic randomness, e.g., `random.choice([\"male\", \"female\"])`.\n- Use the datetime package for current date and time, e.g., `datetime.today().strftime('%Y-%m-%d')`.\n- You can use a column to generate a new column, e.g., `first_name + \" \" + last_name`.\n- Use is_primary_key to define a primary key, e.g., `is_primary_key: true`.\n- Use foreign_key to get a primary key from another table, e.g., `foreign_key(\"person\", \"id\")`. If you use multiple foreign key functions, you will get the primary key values from the same row.\n\nYou can write your logic in a single line or multiple lines, depending on your preference. A built-in function, `row_id`, provides a unique integer for each row. You can specify row_id starting point using the `start_row_id` keyword.\n\nIn addition, you have control over how your data is exported:\n\n- **`export_file_count`**: This keyword lets you specify the total number of output files to generate. It's especially useful when you need to split a large dataset into multiple, more manageable files.\n- **`export_file_row_count`**: Use this keyword to set the maximum number of rows that each exported file should contain. This ensures that each file remains within a desired size limit and is easier to handle.\n\n\nColumns will automatically have the best-fitting data type. However, if you'd like to specify a data type, use the `type` keyword. You can assign data types using NumPy dtypes, Pandas Extension Dtypes, or Python native types.\n\nHere are some examples:\n```\nfake.first_name()\nfake.random_int(1, 10)\nrandom.choice([\"male\", \"female\"])\ndatetime.today()\n911 # number\nr\"170 cm\" # string\n\n```\n## Example Code\n```python\nimport tablefaker\n\n# exports to current folder in csv format\ntablefaker.to_csv(\"test_table.yaml\")\n\n# exports to sql insert into scripts to insert to your database\ntablefaker.to_sql(\"test_table.yaml\")\n\n# exports all tables in json format\ntablefaker.to_json(\"test_table.yaml\", \"./target_folder\")\n\n# exports all tables in parquet format\ntablefaker.to_parquet(\"test_table.yaml\", \"./target_folder\")\n\n# exports all tables in deltalake format\ntablefaker.to_deltalake(\"test_table.yaml\", \"./target_folder\")\n\n# export single table to the provided folder\ntablefaker.to_deltalake(\"test_table.yaml\", \"./target_folder/person/\", table_name=\"person\")\n\n# exports only the first table in excel format\ntablefaker.to_excel(\"test_table.yaml\", \"./target_folder/target_file.xlsx\")\n\n# get as pandas dataframes\ndf_dict = tablefaker.to_pandas(\"test_table.yaml\")\nperson_df = df_dict[\"person\"]\nprint(person_df.head(5))\n```\n\n## Sample CLI Command\nYou can use tablefaker in your terminal for ad-hoc needs or in shell scripts to automate fake data generation. The CLI reads the YAML config and supports importing Python modules via `config.python_import` and adding Faker community providers declared under `config.community_providers` (see \"Custom Faker Providers\" below). Custom Python functions (passed via the `custom_function` parameter) are only supported when using the Python API programmatically.\n\nSupported CLI flags:\n- --config : path to YAML or JSON config\n- --file_type : csv,json,parquet,excel,sql,deltalake (default: csv)\n- --target : target folder or file path\n- --seed : integer seed to make generation deterministic\n- --infer-attrs : \"true\" or \"false\" to override infer_entity_attrs_by_name\n\n```bash\n# exports to current folder in csv format (reads community_providers from config)\ntablefaker --config tests/test_table.yaml\n\n# exports as sql insert script files\ntablefaker --config tests/test_table.yaml --file_type sql --target ./out\n\n# exports to current folder in excel format\ntablefaker --config tests/test_table.yaml --file_type excel\n\n# exports all tables in json format to a folder\ntablefaker --config tests/test_table.yaml --file_type json --target ./target_folder\n\n# exports a single table to a parquet file\ntablefaker --config tests/test_table.yaml --file_type parquet --target ./target_folder/target_file.parquet\n\n# pass an explicit seed and enable attribute inference\ntablefaker --config tests/test_table.yaml --seed 42 --infer-attrs true\n```\n\n## Sample CSV Output\n```\nid,first_name,last_name,age,dob,salary,height,weight\n1,John,Smith,35,1992-01-11,,170 cm,150\n2,Charles,Shepherd,27,1987-01-02,,170 cm,150\n3,Troy,Johnson,42,,170 cm,150\n4,Joshua,Hill,86,1985-07-11,,170 cm,150\n5,Matthew,Johnson,31,1940-03-31,,170 cm,150\n```\n\n## Sample Sql Output\n```sql\nINSERT INTO employee\n(id,person_id,hire_date,title,salary,height,weight,school,level)\nVALUES\n(1, 4, '2020-10-09', 'principal engineer', NULL, '170 cm', 150, 'ISLIP HIGH SCHOOL', 'level 2'),\n(2, 9, '2002-12-20', 'principal engineer', NULL, '170 cm', 150, 'GUY-PERKINS HIGH SCHOOL', 'level 1'),\n(3, 2, '1996-01-06', 'principal engineer', NULL, '170 cm', 150, 'SPRINGLAKE-EARTH ELEM/MIDDLE SCHOOL', 'level 3');\n```\n## Custom Faker Providers\nYou can add and use custom / community faker providers with table faker.\\\nHere is a list of these community providers.\\\nhttps://faker.readthedocs.io/en/master/communityproviders.html#\n\n```yaml\nversion: 1\nconfig:\n locale: en_US\ntables:\n - table_name: employee\n row_count: 5\n columns:\n - column_name: id\n data: row_id\n - column_name: person_id\n data: fake.random_int(1, 10)\n - column_name: hire_date\n data: fake.date_between()\n - column_name: school\n data: fake.school_name() # custom provider\n```\n\n```python\nimport tablefaker\n\n# import the custom faker provider\nfrom faker_education import SchoolProvider\n\n# provide the faker provider class to the tablefaker using fake_provider\n# you can add a single provider or a list of providers\ntablefaker.to_csv(\"test_table.yaml\", \"./target_folder\", fake_provider=SchoolProvider)\n# this works with all other to_ methods as well.\n```\n\n## Custom Functions\nWith Table Faker, you have the flexibility to provide your own custom functions to generate column data. This advanced feature empowers developers to create custom fake data generation logic that can pull data from a database, API, file, or any other source as needed.\\\nYou can also supply multiple functions in a list, allowing for even more versatility. \\\nThe custom function you provide should return a single value, giving you full control over your synthetic data generation.\n\n```python\nfrom tablefaker import tablefaker\nfrom faker import Faker\n\nfake = Faker()\ndef get_level():\n return f\"level {fake.random_int(1, 5)}\"\n\ntablefaker.to_csv(\"test_table.yaml\", \"./target_folder\", custom_function=get_level)\n```\nAdd get_level function to your yaml file\n```yaml\nversion: 1\nconfig:\n locale: en_US\ntables:\n - table_name: employee\n row_count: 5\n columns:\n - column_name: id\n data: row_id\n - column_name: person_id\n data: fake.random_int(1, 10)\n - column_name: hire_date\n data: fake.date_between()\n - column_name: level\n data: get_level() # custom function\n```\n## Generate Yaml File From Avro Schema or Csv\nIf you have an [avro schema](https://avro.apache.org/docs/++version++/specification/), you can generate a yaml file using avro_to_yaml function.\n\n```python\nfrom tablefaker import tablefaker\ntablefaker.avro_to_yaml(\"tests/test_person.avsc\", \"tests/exports/person.yaml\")\n```\n\nAnd also you can use csv to define your columns and generate the yaml file.\n\n```python\nfrom tablefaker import tablefaker\ntablefaker.csv_to_yaml(\"tests/test_person.csv\", \"tests/exports/person.yaml\")\n```\n\nSample Csv file\n```\ncolumn_name,description,data,type,null_percentage\nid,Unique identifier for the person,row_id,,\nfirst_name,First name of the person,fake.first_name(),string,\nlast_name,Last name of the person,fake.last_name(),string,\nage,Age of the person,fake.random_int(),int32,0.1\nemail,Email address of the person,fake.email(),string,0.1\nis_active,Indicates if the person is active,fake.pybool(),boolean,0.2\nsignup_date,Date when the person signed up,fake.date(),,0.3\n```\n\n## Support & Donation\nIf you find Table Faker useful and would like to support its development, consider making a [donation](https://github.com/sponsors/necatiarslan).\n\n## Additional Resources\n- **Faker Functions**: [Faker Providers](https://faker.readthedocs.io/en/master/providers.html#)\n- **Bug Reports & Feature Requests**: [GitHub Issues](https://github.com/necatiarslan/table-faker/issues/new)\n\n\n## Roadmap\n### TODO\n- Support composite primary key\n - composite keys are not unique\n - composite keys are not stored together\n - copy_from_fk do not support composite primary key\n- is_unique support\n- Provide foreign keys (dictionary, array etc) as an external source\n- Variables\n- Generate template yaml file from sample data\n- use an ai service to generate data generation logic\n- make openpyxl package optional to export to excel\n\n### Future Enhancements\n- PyArrow table support\n- Avro file support\n- Add target file name to YAML\n\n---\n**Follow for Updates**: [LinkedIn](https://www.linkedin.com/in/necati-arslan/) \n**Author**: Necati Arslan | [Email](mailto:necatia@gmail.com)\n\n\n",

"bugtrack_url": null,

"license": "Apache License 2.0",

"summary": "A Python package to generate fake tabular data. Get data in pandas dataframe or export to Parquet, DeltaLake, Csv, Json, Excel or Sql",

"version": "1.8.0",

"project_urls": {

"Documentation": "https://github.com/necatiarslan/table-faker/blob/main/README.md",

"Homepage": "https://github.com/necatiarslan/table-faker",

"Source": "https://github.com/necatiarslan/table-faker"

},

"split_keywords": [

"faker",

" fake data",

" tabular data",

" data generation",

" pandas",

" parquet",

" csv",

" json",

" excel",

" sqllite",

" delta lake"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "4f8a4958c6a3bc47b618c0b7f5d470ce58af53ed33a350e32e02f91c44d6aeb6",

"md5": "3cce0a70bf2dd59a1d2d34d8af0aaea7",

"sha256": "901c23b003b13c7ba05d21e42807121b34aedc9e9781fda45aebaf51207e539e"

},

"downloads": -1,

"filename": "tablefaker-1.8.0-py3-none-any.whl",

"has_sig": false,

"md5_digest": "3cce0a70bf2dd59a1d2d34d8af0aaea7",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.9",

"size": 41188,

"upload_time": "2025-10-10T19:06:14",

"upload_time_iso_8601": "2025-10-10T19:06:14.710739Z",

"url": "https://files.pythonhosted.org/packages/4f/8a/4958c6a3bc47b618c0b7f5d470ce58af53ed33a350e32e02f91c44d6aeb6/tablefaker-1.8.0-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "1226fe0d1936c6c437dd3f8f81fa3658302aaf95c1bdb8fd66b88add0bcc4945",

"md5": "be4b0b290d7da1b3986a7fe3f7ea1a74",

"sha256": "f6b74dfc45a42af3c8986512ffb77e50174188cd388992590b3813f00c805e3c"

},

"downloads": -1,

"filename": "tablefaker-1.8.0.tar.gz",

"has_sig": false,

"md5_digest": "be4b0b290d7da1b3986a7fe3f7ea1a74",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.9",

"size": 51589,

"upload_time": "2025-10-10T19:06:16",

"upload_time_iso_8601": "2025-10-10T19:06:16.069201Z",

"url": "https://files.pythonhosted.org/packages/12/26/fe0d1936c6c437dd3f8f81fa3658302aaf95c1bdb8fd66b88add0bcc4945/tablefaker-1.8.0.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-10-10 19:06:16",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "necatiarslan",

"github_project": "table-faker",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"requirements": [

{

"name": "cramjam",

"specs": [

[

"==",

"2.11.0"

]

]

},

{

"name": "et-xmlfile",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "faker",

"specs": [

[

"==",

"37.8.0"

]

]

},

{

"name": "fastparquet",

"specs": [

[

"==",

"2024.11.0"

]

]

},

{

"name": "fsspec",

"specs": [

[

"==",

"2025.9.0"

]

]

},

{

"name": "numpy",

"specs": [

[

"==",

"2.3.3"

]

]

},

{

"name": "openpyxl",

"specs": [

[

"==",

"3.1.5"

]

]

},

{

"name": "packaging",

"specs": [

[

"==",

"25.0"

]

]

},

{

"name": "pandas",

"specs": [

[

"==",

"2.3.3"

]

]

},

{

"name": "psutil",

"specs": [

[

"==",

"7.1.0"

]

]

},

{

"name": "python-dateutil",

"specs": [

[

"==",

"2.9.0.post0"

]

]

},

{

"name": "pytz",

"specs": [

[

"==",

"2025.2"

]

]

},

{

"name": "pyyaml",

"specs": [

[

"==",

"6.0.3"

]

]

},

{

"name": "six",

"specs": [

[

"==",

"1.17.0"

]

]

},

{

"name": "tzdata",

"specs": [

[

"==",

"2025.2"

]

]

}

],

"lcname": "tablefaker"

}