| Name | voltron-robotics JSON |

| Version |

1.0.3

JSON

JSON |

| download |

| home_page | |

| Summary | Voltron: Language-Driven Representation Learning for Robotics. |

| upload_time | 2023-04-05 12:48:25 |

| maintainer | |

| docs_url | None |

| author | |

| requires_python | >=3.8 |

| license | MIT License Copyright (c) 2021-present, Siddharth Karamcheti and other contributors. Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. |

| keywords |

robotics

representation learning

natural language processing

machine learning

|

| VCS |

|

| bugtrack_url |

|

| requirements |

No requirements were recorded.

|

| Travis-CI |

No Travis.

|

| coveralls test coverage |

No coveralls.

|

<div align="center">

<img src="https://raw.githubusercontent.com/siddk/voltron-robotics/main/docs/assets/voltron-banner.png" alt="Voltron Logo"/>

</div>

<div align="center">

[](https://arxiv.org/abs/2302.12766)

[](https://pytorch.org/get-started/previous-versions/#v1120)

[](https://github.com/psf/black)

[](https://github.com/charliermarsh/ruff)

</div>

---

# Language-Driven Representation Learning for Robotics

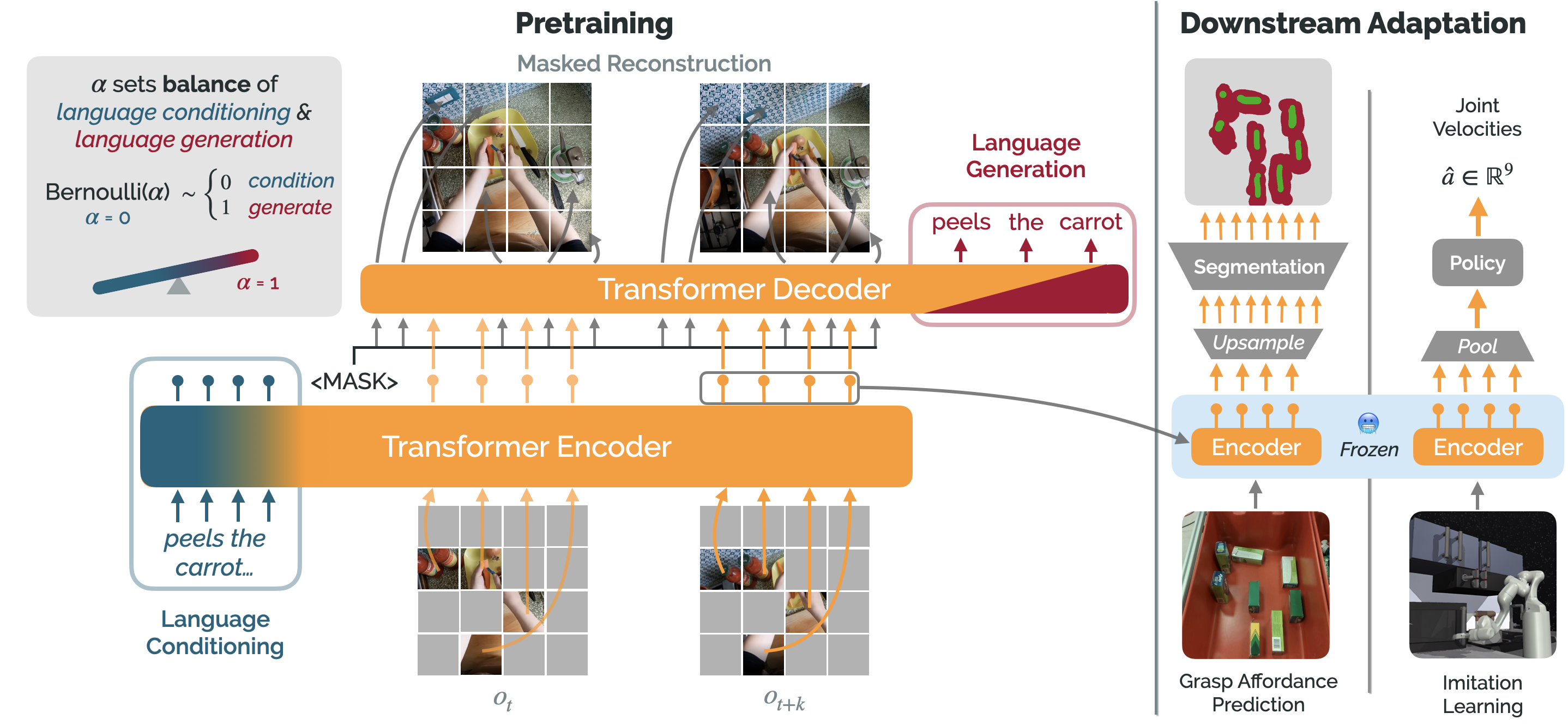

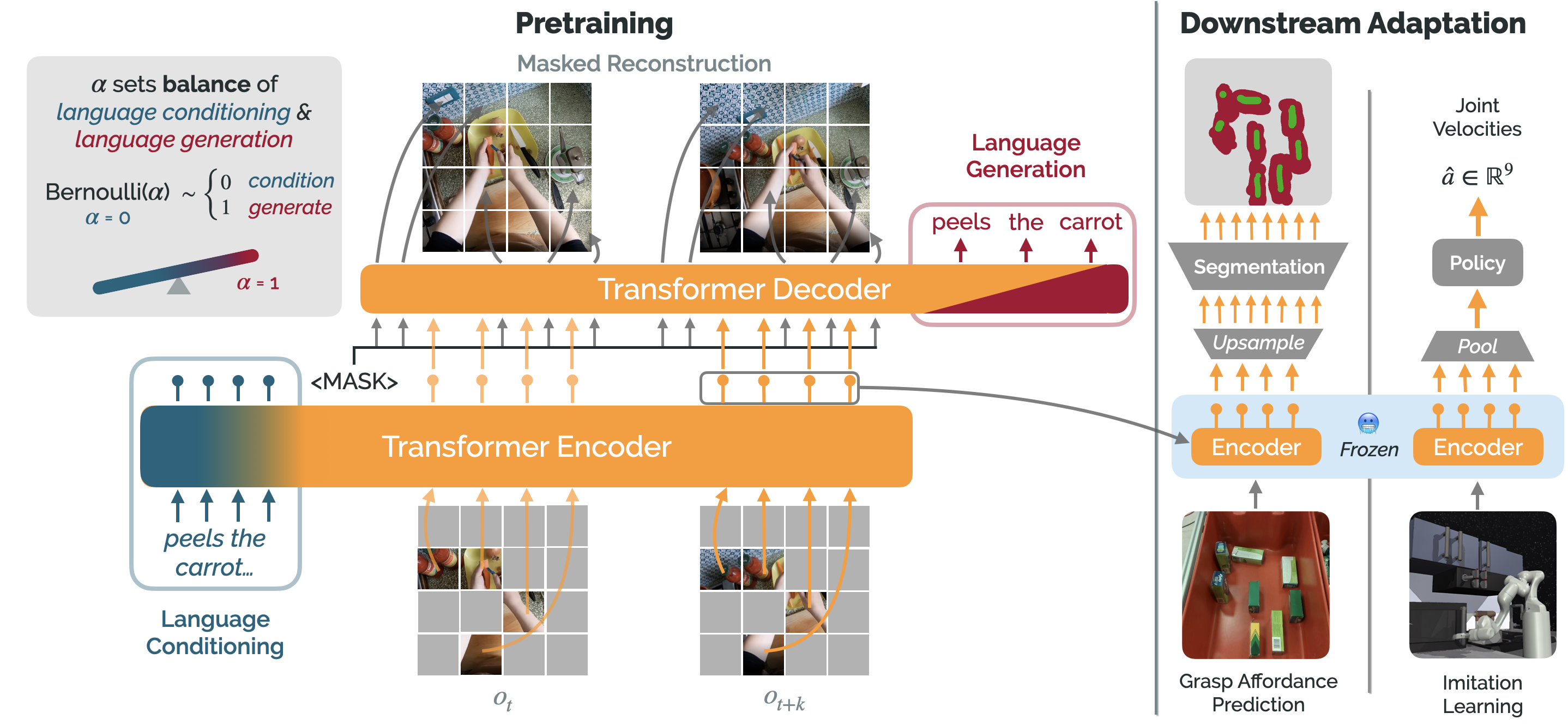

Package repository for Voltron: Language-Driven Representation Learning for Robotics. Provides code for loading

pretrained Voltron, R3M, and MVP representations for adaptation to downstream tasks, as well as code for pretraining

such representations on arbitrary datasets.

---

## Quickstart

This repository is built with PyTorch; while specified as a dependency for the package, we highly recommend that

you install the desired version (e.g., with accelerator support) for your given hardware and environment

manager (e.g., `conda`).

PyTorch installation instructions [can be found here](https://pytorch.org/get-started/locally/). This repository

should work with PyTorch >= 1.12, but has only been thoroughly tested with PyTorch 1.12.0, Torchvision 0.13.0,

Torchaudio 0.12.0.

Once PyTorch has been properly installed, you can install this package via PyPI, and you're off!

```bash

pip install voltron-robotics

```

You can also install this package locally via an editable installation in case you want to run examples/extend the

current functionality:

```bash

git clone https://github.com/siddk/voltron-robotics

cd voltron-robotics

pip install -e .

```

## Usage

Voltron Robotics (package: `voltron`) is structured to provide easy access to pretrained Voltron models (and

reproductions), to facilitate use for various downstream tasks. Using a pretrained Voltron model is easy:

```python

from torchvision.io import read_image

from voltron import instantiate_extractor, load

# Load a frozen Voltron (V-Cond) model & configure a vector extractor

vcond, preprocess = load("v-cond", device="cuda", freeze=True)

vector_extractor = instantiate_extractor(vcond)()

# Obtain & Preprocess an image =>> can be from a dataset, or camera on a robot, etc.

# => Feel free to add any language if you have it (Voltron models work either way!)

img = preprocess(read_image("examples/img/peel-carrot-initial.png"))[None, ...].to("cuda")

lang = ["peeling a carrot"]

# Extract both multimodal AND vision-only embeddings!

multimodal_embeddings = vcond(img, lang, mode="multimodal")

visual_embeddings = vcond(img, mode="visual")

# Use the `vector_extractor` to output dense vector representations for downstream applications!

# => Pass this representation to model of your choice (object detector, control policy, etc.)

representation = vector_extractor(multimodal_embeddings)

```

Voltron representations can be used for a variety of different applications; in the

[`voltron-evaluation`](https://github.com/siddk/voltron-evaluation) repository, you can find code for adapting Voltron

representations to various downstream tasks (segmentation, object detection, control, etc.); all the applications from

our paper.

---

## API

The package `voltron` provides the following functionality for using and adapting existing representations:

#### `voltron.available_models()`

Returns the name of available Voltron models; right now, the following models (all models trained in the paper) are

available:

- `v-cond` – V-Cond (ViT-Small) trained on Sth-Sth; single-frame w/ language-conditioning.

- `v-dual` – V-Dual (ViT-Small) trained on Sth-Sth; dual-frame w/ language-conditioning.

- `v-gen` – V-Gen (ViT-Small) trained on Sth-Sth; dual-frame w/ language conditioning AND generation.

- `r-mvp` – R-MVP (ViT-Small); reproduction of [MVP](https://github.com/ir413/mvp) trained on Sth-Sth.

- `r-r3m-vit` – R-R3M (ViT-Small); reproduction of [R3M](https://github.com/facebookresearch/r3m) trained on Sth-Sth.

- `r-r3m-rn50` – R-R3M (ResNet-50); reproduction of [R3M](https://github.com/facebookresearch/r3m) trained on Sth-Sth.

- `v-cond-base` – V-Cond (ViT-Base) trained on Sth-Sth; larger (86M parameter) variant of V-Cond.

#### `voltron.load(name: str, device: str, freeze: bool, cache: str = cache/)`

Returns the model and the Torchvision Transform needed by the model, where `name` is one of the strings returned

by `voltron.available_models()`; this in general follows the same API as

[OpenAI's CLIP](https://github.com/openai/CLIP).

---

Voltron models (`v-{cond, dual, gen, ...}`) returned by `voltron.load()` support the following:

#### `model(img: Tensor, lang: Optional[List[str]], mode: str = "multimodal")`

Returns a sequence of embeddings corresponding to the output of the multimodal encoder; note that `lang` can be None,

which is totally fine for Voltron models! However, if you have any language (even a coarse task description), it'll

probably be helpful!

The parameter `mode` in `["multimodal", "visual"]` controls whether the output will contain the fused image patch and

language embeddings, or only the image patch embeddings.

**Note:** For the API for the non-Voltron models (e.g., R-MVP, R-R3M), take a look at

[`examples/verify.py`](examples/verify.py); this file shows how representations from *every* model can be extracted.

### Adaptation

See [`examples/adapt.py`](examples/adapt.py) and the [`voltron-evaluation`](https://github.com/siddk/voltron-evaluation)

repository for more examples on the various ways to adapt/use Voltron representations.

---

## Contributing

Before committing to the repository, make sure to set up your dev environment!

Here are the basic development environment setup guidelines:

+ Fork/clone the repository, performing an editable installation. Make sure to install with the development dependencies

(e.g., `pip install -e ".[dev]"`); this will install `black`, `ruff`, and `pre-commit`.

+ Install `pre-commit` hooks (`pre-commit install`).

+ Branch for the specific feature/issue, issuing PR against the upstream repository for review.

Additional Contribution Notes:

- This project has migrated to the recommended

[`pyproject.toml` based configuration for setuptools](https://setuptools.pypa.io/en/latest/userguide/quickstart.html).

However, as some tools haven't yet adopted [PEP 660](https://peps.python.org/pep-0660/), we provide a

[`setup.py` file](https://setuptools.pypa.io/en/latest/userguide/pyproject_config.html).

- This package follows the [`flat-layout` structure](https://setuptools.pypa.io/en/latest/userguide/package_discovery.html#flat-layout)

described in `setuptools`.

- Make sure to add any new dependencies to the `project.toml` file!

---

## Repository Structure

High-level overview of repository/project file-tree:

+ `docs/` - Package documentation & assets - including project roadmap.

+ `voltron` - Package source code; has all core utilities for model specification, loading, feature extraction,

preprocessing, etc.

+ `examples/` - Standalone examples scripts for demonstrating various functionality (e.g., extracting different types

of representations, adapting representations in various contexts, pretraining, amongst others).

+ `.pre-commit-config.yaml` - Pre-commit configuration file (sane defaults + `black` + `ruff`).

+ `LICENSE` - Code is made available under the MIT License.

+ `Makefile` - Top-level Makefile (by default, supports linting - checking & auto-fix); extend as needed.

+ `pyproject.toml` - Following PEP 621, this file has all project configuration details (including dependencies), as

well as tool configurations (for `black` and `ruff`).

+ `README.md` - You are here!

Raw data

{

"_id": null,

"home_page": "",

"name": "voltron-robotics",

"maintainer": "",

"docs_url": null,

"requires_python": ">=3.8",

"maintainer_email": "",

"keywords": "robotics,representation learning,natural language processing,machine learning",

"author": "",

"author_email": "Siddharth Karamcheti <skaramcheti@cs.stanford.edu>",

"download_url": "https://files.pythonhosted.org/packages/37/51/7f6c4e2fada1b9232a813fd7dce04f6d62b7f5fb055c7256db9a61dbbd54/voltron-robotics-1.0.3.tar.gz",

"platform": null,

"description": "<div align=\"center\">\n <img src=\"https://raw.githubusercontent.com/siddk/voltron-robotics/main/docs/assets/voltron-banner.png\" alt=\"Voltron Logo\"/>\n</div>\n\n<div align=\"center\">\n\n[](https://arxiv.org/abs/2302.12766)\n[](https://pytorch.org/get-started/previous-versions/#v1120)\n[](https://github.com/psf/black)\n[](https://github.com/charliermarsh/ruff)\n\n\n</div>\n\n---\n\n# Language-Driven Representation Learning for Robotics\n\nPackage repository for Voltron: Language-Driven Representation Learning for Robotics. Provides code for loading\npretrained Voltron, R3M, and MVP representations for adaptation to downstream tasks, as well as code for pretraining\nsuch representations on arbitrary datasets.\n\n---\n\n## Quickstart\n\nThis repository is built with PyTorch; while specified as a dependency for the package, we highly recommend that\nyou install the desired version (e.g., with accelerator support) for your given hardware and environment\nmanager (e.g., `conda`).\n\nPyTorch installation instructions [can be found here](https://pytorch.org/get-started/locally/). This repository\nshould work with PyTorch >= 1.12, but has only been thoroughly tested with PyTorch 1.12.0, Torchvision 0.13.0,\nTorchaudio 0.12.0.\n\nOnce PyTorch has been properly installed, you can install this package via PyPI, and you're off!\n\n```bash\npip install voltron-robotics\n```\n\nYou can also install this package locally via an editable installation in case you want to run examples/extend the\ncurrent functionality:\n\n```bash\ngit clone https://github.com/siddk/voltron-robotics\ncd voltron-robotics\npip install -e .\n```\n\n## Usage\n\nVoltron Robotics (package: `voltron`) is structured to provide easy access to pretrained Voltron models (and\nreproductions), to facilitate use for various downstream tasks. Using a pretrained Voltron model is easy:\n\n```python\nfrom torchvision.io import read_image\nfrom voltron import instantiate_extractor, load\n\n# Load a frozen Voltron (V-Cond) model & configure a vector extractor\nvcond, preprocess = load(\"v-cond\", device=\"cuda\", freeze=True)\nvector_extractor = instantiate_extractor(vcond)()\n\n# Obtain & Preprocess an image =>> can be from a dataset, or camera on a robot, etc.\n# => Feel free to add any language if you have it (Voltron models work either way!)\nimg = preprocess(read_image(\"examples/img/peel-carrot-initial.png\"))[None, ...].to(\"cuda\")\nlang = [\"peeling a carrot\"]\n\n# Extract both multimodal AND vision-only embeddings!\nmultimodal_embeddings = vcond(img, lang, mode=\"multimodal\")\nvisual_embeddings = vcond(img, mode=\"visual\")\n\n# Use the `vector_extractor` to output dense vector representations for downstream applications!\n# => Pass this representation to model of your choice (object detector, control policy, etc.)\nrepresentation = vector_extractor(multimodal_embeddings)\n```\n\nVoltron representations can be used for a variety of different applications; in the\n[`voltron-evaluation`](https://github.com/siddk/voltron-evaluation) repository, you can find code for adapting Voltron\nrepresentations to various downstream tasks (segmentation, object detection, control, etc.); all the applications from\nour paper.\n\n---\n\n## API\n\n\n\nThe package `voltron` provides the following functionality for using and adapting existing representations:\n\n#### `voltron.available_models()`\n\nReturns the name of available Voltron models; right now, the following models (all models trained in the paper) are\navailable:\n\n- `v-cond` \u2013 V-Cond (ViT-Small) trained on Sth-Sth; single-frame w/ language-conditioning.\n- `v-dual` \u2013 V-Dual (ViT-Small) trained on Sth-Sth; dual-frame w/ language-conditioning.\n- `v-gen` \u2013 V-Gen (ViT-Small) trained on Sth-Sth; dual-frame w/ language conditioning AND generation.\n- `r-mvp` \u2013 R-MVP (ViT-Small); reproduction of [MVP](https://github.com/ir413/mvp) trained on Sth-Sth.\n- `r-r3m-vit` \u2013 R-R3M (ViT-Small); reproduction of [R3M](https://github.com/facebookresearch/r3m) trained on Sth-Sth.\n- `r-r3m-rn50` \u2013 R-R3M (ResNet-50); reproduction of [R3M](https://github.com/facebookresearch/r3m) trained on Sth-Sth.\n- `v-cond-base` \u2013 V-Cond (ViT-Base) trained on Sth-Sth; larger (86M parameter) variant of V-Cond.\n\n#### `voltron.load(name: str, device: str, freeze: bool, cache: str = cache/)`\n\nReturns the model and the Torchvision Transform needed by the model, where `name` is one of the strings returned\nby `voltron.available_models()`; this in general follows the same API as\n[OpenAI's CLIP](https://github.com/openai/CLIP).\n\n---\n\nVoltron models (`v-{cond, dual, gen, ...}`) returned by `voltron.load()` support the following:\n\n#### `model(img: Tensor, lang: Optional[List[str]], mode: str = \"multimodal\")`\n\nReturns a sequence of embeddings corresponding to the output of the multimodal encoder; note that `lang` can be None,\nwhich is totally fine for Voltron models! However, if you have any language (even a coarse task description), it'll\nprobably be helpful!\n\nThe parameter `mode` in `[\"multimodal\", \"visual\"]` controls whether the output will contain the fused image patch and\nlanguage embeddings, or only the image patch embeddings.\n\n**Note:** For the API for the non-Voltron models (e.g., R-MVP, R-R3M), take a look at\n[`examples/verify.py`](examples/verify.py); this file shows how representations from *every* model can be extracted.\n\n### Adaptation\n\nSee [`examples/adapt.py`](examples/adapt.py) and the [`voltron-evaluation`](https://github.com/siddk/voltron-evaluation)\nrepository for more examples on the various ways to adapt/use Voltron representations.\n\n---\n\n## Contributing\n\nBefore committing to the repository, make sure to set up your dev environment!\nHere are the basic development environment setup guidelines:\n\n+ Fork/clone the repository, performing an editable installation. Make sure to install with the development dependencies\n (e.g., `pip install -e \".[dev]\"`); this will install `black`, `ruff`, and `pre-commit`.\n\n+ Install `pre-commit` hooks (`pre-commit install`).\n\n+ Branch for the specific feature/issue, issuing PR against the upstream repository for review.\n\nAdditional Contribution Notes:\n- This project has migrated to the recommended\n [`pyproject.toml` based configuration for setuptools](https://setuptools.pypa.io/en/latest/userguide/quickstart.html).\n However, as some tools haven't yet adopted [PEP 660](https://peps.python.org/pep-0660/), we provide a\n [`setup.py` file](https://setuptools.pypa.io/en/latest/userguide/pyproject_config.html).\n\n- This package follows the [`flat-layout` structure](https://setuptools.pypa.io/en/latest/userguide/package_discovery.html#flat-layout)\n described in `setuptools`.\n\n- Make sure to add any new dependencies to the `project.toml` file!\n\n---\n\n## Repository Structure\n\nHigh-level overview of repository/project file-tree:\n\n+ `docs/` - Package documentation & assets - including project roadmap.\n+ `voltron` - Package source code; has all core utilities for model specification, loading, feature extraction,\n preprocessing, etc.\n+ `examples/` - Standalone examples scripts for demonstrating various functionality (e.g., extracting different types\n of representations, adapting representations in various contexts, pretraining, amongst others).\n+ `.pre-commit-config.yaml` - Pre-commit configuration file (sane defaults + `black` + `ruff`).\n+ `LICENSE` - Code is made available under the MIT License.\n+ `Makefile` - Top-level Makefile (by default, supports linting - checking & auto-fix); extend as needed.\n+ `pyproject.toml` - Following PEP 621, this file has all project configuration details (including dependencies), as\n well as tool configurations (for `black` and `ruff`).\n+ `README.md` - You are here!\n",

"bugtrack_url": null,

"license": "MIT License Copyright (c) 2021-present, Siddharth Karamcheti and other contributors. Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. ",

"summary": "Voltron: Language-Driven Representation Learning for Robotics.",

"version": "1.0.3",

"split_keywords": [

"robotics",

"representation learning",

"natural language processing",

"machine learning"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "a82d6e67a816f3d8fb2e0e7f0b1cbc3be65805afd08ae17bc871a099f0a72943",

"md5": "e7a446aa1078354d310628e4a7776e70",

"sha256": "7a73b810b0e877c68befdd1cd6b03bd0fa6c8c54c051a0914bd2b0c1ef6724ea"

},

"downloads": -1,

"filename": "voltron_robotics-1.0.3-py3-none-any.whl",

"has_sig": false,

"md5_digest": "e7a446aa1078354d310628e4a7776e70",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.8",

"size": 93614,

"upload_time": "2023-04-05T12:48:23",

"upload_time_iso_8601": "2023-04-05T12:48:23.012583Z",

"url": "https://files.pythonhosted.org/packages/a8/2d/6e67a816f3d8fb2e0e7f0b1cbc3be65805afd08ae17bc871a099f0a72943/voltron_robotics-1.0.3-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "37517f6c4e2fada1b9232a813fd7dce04f6d62b7f5fb055c7256db9a61dbbd54",

"md5": "f017c4e5617f64f0e8b4f4d2ee40e703",

"sha256": "a2f028a0b92b5839e2b3f2a07d33cdb28cd9b96ccef73e1a833e1571a32d5fe8"

},

"downloads": -1,

"filename": "voltron-robotics-1.0.3.tar.gz",

"has_sig": false,

"md5_digest": "f017c4e5617f64f0e8b4f4d2ee40e703",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.8",

"size": 71031,

"upload_time": "2023-04-05T12:48:25",

"upload_time_iso_8601": "2023-04-05T12:48:25.079181Z",

"url": "https://files.pythonhosted.org/packages/37/51/7f6c4e2fada1b9232a813fd7dce04f6d62b7f5fb055c7256db9a61dbbd54/voltron-robotics-1.0.3.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2023-04-05 12:48:25",

"github": false,

"gitlab": false,

"bitbucket": false,

"lcname": "voltron-robotics"

}