#  jllama-py

## 主要技术栈

### 前端

Vue、ElementUI

### 后端

python3.11、pywebview、llama-cpp-python、transformers、pytorch、Stable Diffusion

## 介绍

jllama-py是[jllama](https://github.com/zjwan461/jllama)的Python版本,并且得益于使用了python作为技术底座,在原本jllama的基础上做了一些功能增强。 是一个基于python构建的桌面端AI模型工具集。集成了 **模型下载**、**模型部署**、**服务监控**、**gguf模型量化**、**gguf拆分合并** 、**权重格式转换**、**模型微调**、**StableDiffusion**等工具合集。让使用者无需掌握各种AI相关技术就能快速在本地运行模型进行模型推理、量化等操作、模型微调、AIGC创作等工作。

## 限制

jllama-py目前只支持大语言模型(LLM)。未来会考虑支持视觉、音频等多模态模型。jllama-py支持huggingface、gguf模型格式文件的本地推理,但是在本地部署方案上仅支持gguf模型权重格式,不过jllama-py也提供了将huggingface格式模型转换为gguf模型格式的功能。此外jllama-py仅支持Windows操作系统。目前jllama-py也只支持cuda的GPU加速方案。AIGC方面支持StableDiffusion 1.5版本

## 安装和使用

### 1. 使用pip安装

jllama-py使用了llama-cpp-python作为模型推理技术底座,需要本机配置了C++编译环境。推荐先安装llama-cpp-python之后再安装jllama-py。llama-cpp-python安装请参考如下。

CPU版本安装请参考[llama-cpp-python-cpu](llama-cpp-python-cpu.md)

CUDA版本安装请参考[llama-cpp-python-cuda](llama-cpp-python-cuda.md)

也可以直接安装llama-cpp-python官方的whl。 [Releases · abetlen/llama-cpp-python](https://github.com/abetlen/llama-cpp-python/releases)

```shell

# 省略llama-cpp-python的安装过程

...

pip install jllama-py

# 运行

jllama

```

直接安装请参考如下。CPU版本安装请提前下载好`MinGW`或安装`Visual Studio`;CUDA版本安装请安装`Visual Studio 2022`软件并安装`C++运行环境`。

```shell

# CPU版本安装

$env:CMAKE_GENERATOR = "MinGW Makefiles"

$env:CMAKE_ARGS = "-DGGML_OPENBLAS=on -DCMAKE_C_COMPILER=${MinGW安装目录}/mingw64/bin/gcc.exe -DCMAKE_CXX_COMPILER=${MinGW安装目录}/mingw64/bin/g++.exe"

pip install jllama-py

# CUDA版本安装

$env:CMAKE_ARGS = "-DGGML_CUDA=ON"

pip install jllama-py

```

**关于pytorch的cuda加速,请额外执行如下命令**。可参考[pytorch官方说明](https://pytorch.org/get-started/locally/) 。**tips: 50系显卡驱动比较新,请使用cuda12.8版本**。在jllama-py中,pytorch用于hf格式的模型推理以及模型微调。

```shell

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/${你的cuda版本}

```

### 2. 使用源码编译安装

基础依赖安装:执行如下安装命令

```shell

git clone https://github.com/zjwan461/jllama-py

cd jllama-py

pip install -r requirements.txt

cd ui

npm install

npm run build

# copy ui/dist文件夹到jllama/ui/dist目录下

cd ../jllama

python main.py

```

jllama-py使用了llama-cpp-python作为模型推理技术底座,默认情况下安装的是CPU版本的llama-cpp-python。如果需要使用cuda进行加速推理,请参考如下。

CPU版本安装请参考[llama-cpp-python-cpu](llama-cpp-python-cpu.md)

CUDA版本安装请参考[llama-cpp-python-cuda](llama-cpp-python-cuda.md)

默认情况下基础依赖安装会安装上CPU版本的**pytorch**包,如需要安装cuda版本请执行

```shell

pip install -r pytorch-cuda.txt

```

## 功能介绍

### 一、系统监控

一个简单的系统资源监控面板

### 二、模型管理

jllama-py提供了两种模型文件下载方式。[Hugging Face.](https://huggingface.co/)和[model scope](https://www.modelscope.cn/home) 不过考虑到国内网络问题建议使用modelscope的方式下载模型。

你可以在模型下载菜单中点击新增按钮。选择模型下载平台。模型名称可自定义,repo需要和modelscope的模型仓库名一致。比如如下图的`unsloth/DeepSeek-R1-Distill-Qwen-1.5B-GGUF`。点击确认后`Jllama`将会从modelscope上拉取可供下载的文件列表。选择你需要下载的GGUF模型权重文件进行下载即可。

需要指定模型类型。如果是gguf的模型则选择gguf,此外huggingface格式模型则选择hf

在弹出的模型文件item中,选择你想要下载的模型文件即可进行在线下载。如果不想使用jllama-py的在线下载功能,可以不勾选在线下载开关,直接关闭对话框即可。

### 三、AI推理和本地部署

#### AI 推理

AI推理是本地测试模型能力的工具,其并不具备并发能力。其作用是用来快速运行一个模型,完成一些简单的AI问答功能。虽然底层也是通过一个http api的方式来进行交互,但其并不兼容openAI的请求报文格式。

AI Chat:

在开启了模型推理服务后,点击列表右侧的`chat`按钮即可进入AI Chat页。在这个页面你可以和大模型进行简单的AI问答。

#### 本地部署

本地部署仅支持gguf类型的模型。底层使用llama-cpp-python部署一个兼容openAI的http server。本地部署会在jllama-py中维护一个llama-cpp-python的配置,通过修改这个配置来对本地部署的模型进行维护。jllama-py支持直接编辑和通过本地编辑器编辑的方式来修改此配置文件。在`执行部署`选项卡中即可以在本地开启一个兼容openAI的http server。开启后你可以将本地服务配置到各种RAG工具中,如`Cherry Studio`、`Dify`。关于配置项怎么写,请参考llama-cpp-python官方说明[OpenAI Compatible Web Server - llama-cpp-python](https://llama-cpp-python.readthedocs.io/en/latest/server/)中的Configuration and Multi-Model Support章节。

在Cherry Studio中配置jllama-py服务

### 四、gguf模型权重拆分、合并

#### 拆分

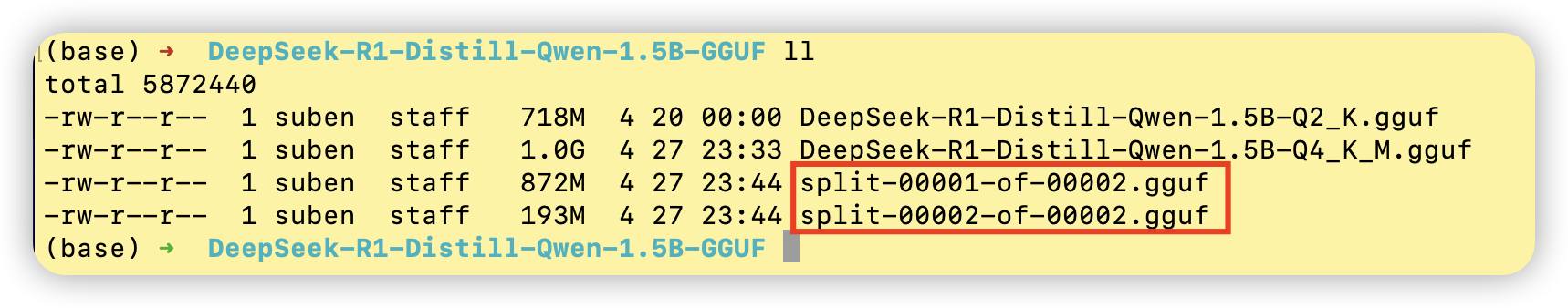

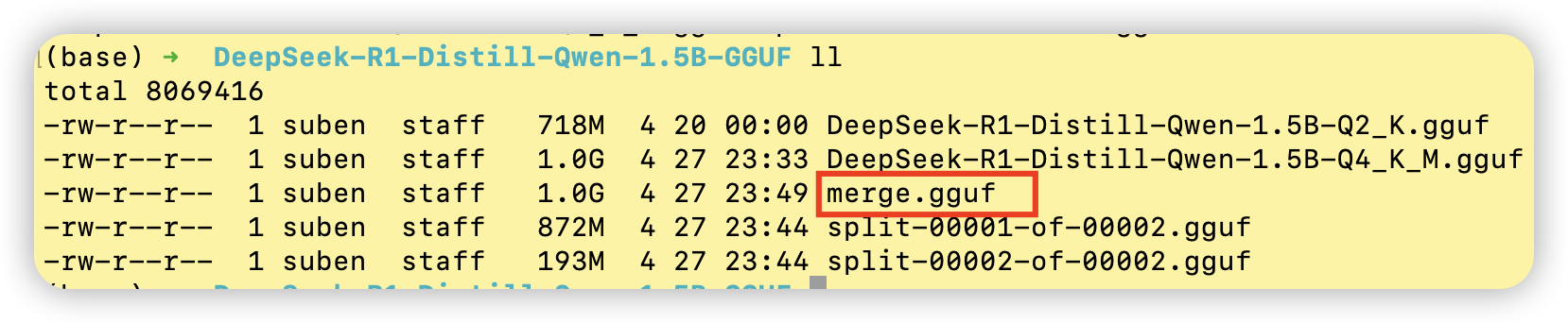

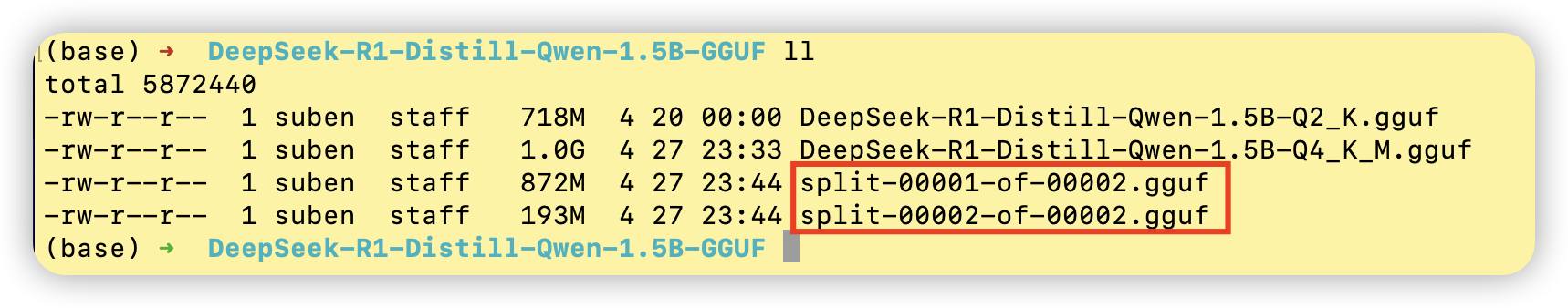

填写好输入文件、拆分选项、拆分参数、输出文件点击提交。jllama-py使用了llama.cpp的`llama-gguf-split`功能把原始的gguf拆分为多个gguf文件。利于超大模型权重文件的传输和保存。

#### 合并

填写好输入文件,即需要合并的gguf文件路径(00001-of-0000n命名的文件)、输出文件。jllama-py使用了llama.cpp的`llama-gguf-split`功能把分散的几个gguf文件合并为一个gguf模型权重文件。

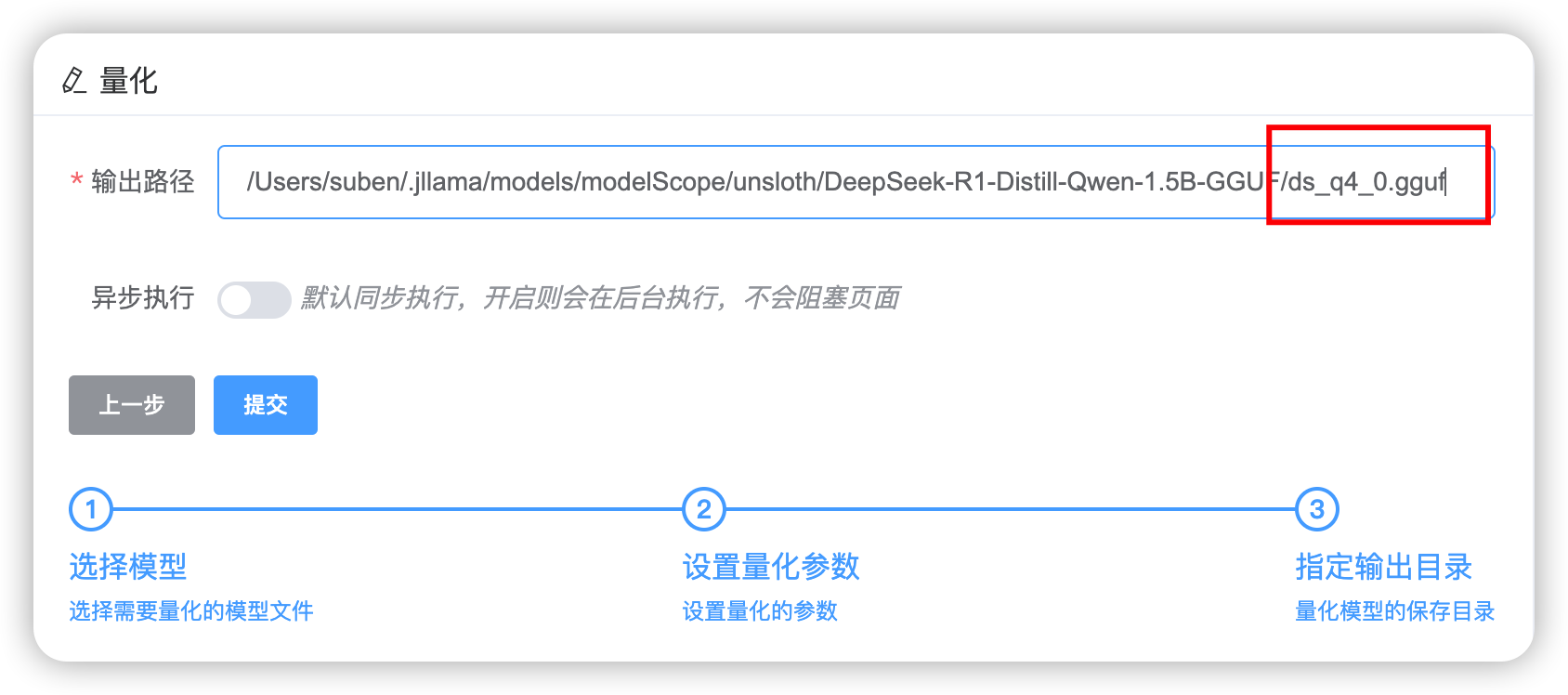

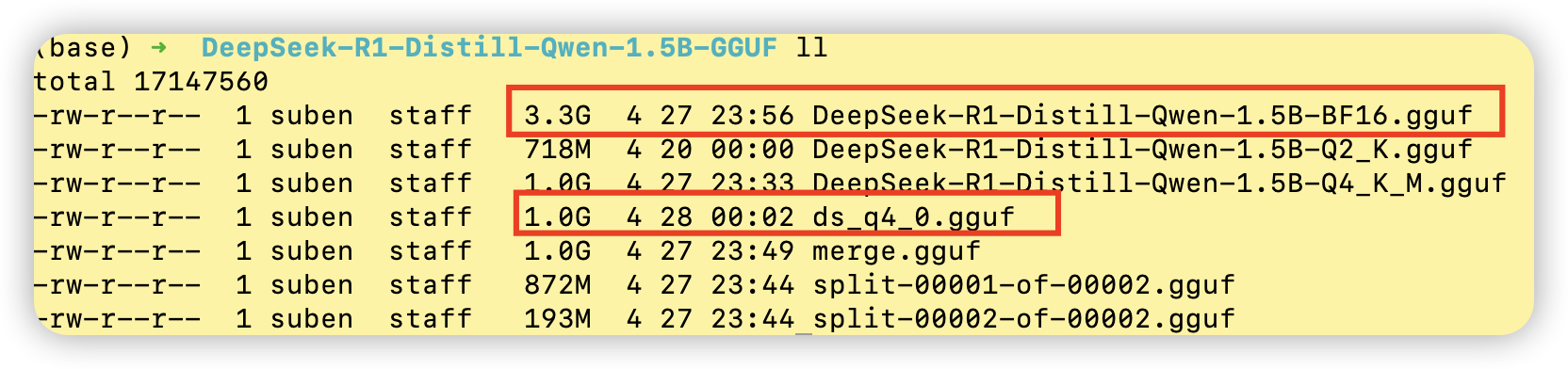

### 五、模型量化

对于较大参数量的模型权重文件直接进行本地部署的硬件要求是非常高的,即使使用了CUDA这种GPU加速的方案,其对显存大小的要求也是比较高的。部署一个7B的gguf模型所需显存资源大致如下:

- **16 位浮点数(FP16)**:通常情况下,每个参数占用 2 字节(16 位)。7B 模型意味着有 70 亿个参数,因此大约需要 70 亿 ×2 字节 = 140 亿字节,换算后约为 13.5GB 显存。

- **8 位整数(INT8)**:如果采用 8 位量化,每个参数占用 1 字节(8 位),那么 7B 模型大约需要 70 亿 ×1 字节 = 70 亿字节,即约 6.6GB 显存。

因此,如果使用模型量化技术,将一个FP16的模型量化为INT8的模型,能够减少一半左右的显存占用。能够帮助个人利用有限的硬件进行模型的本地部署。

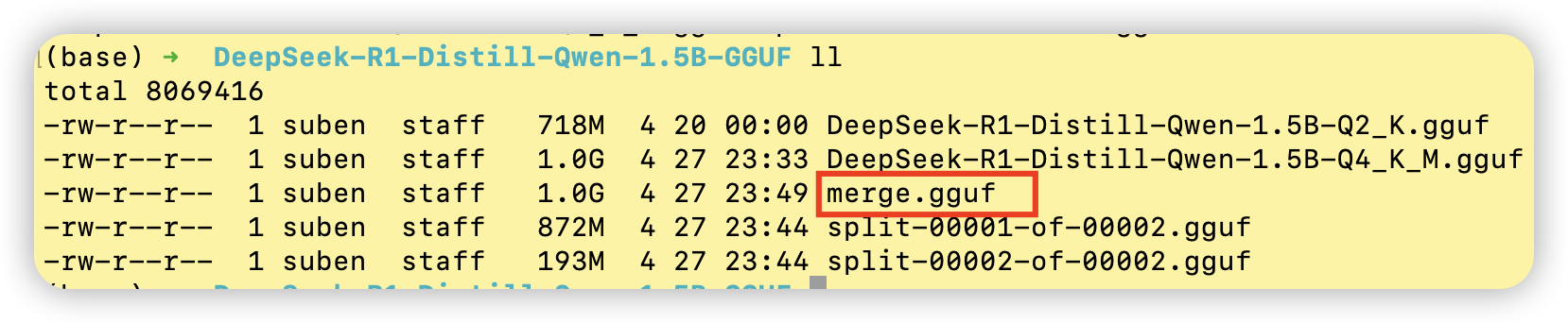

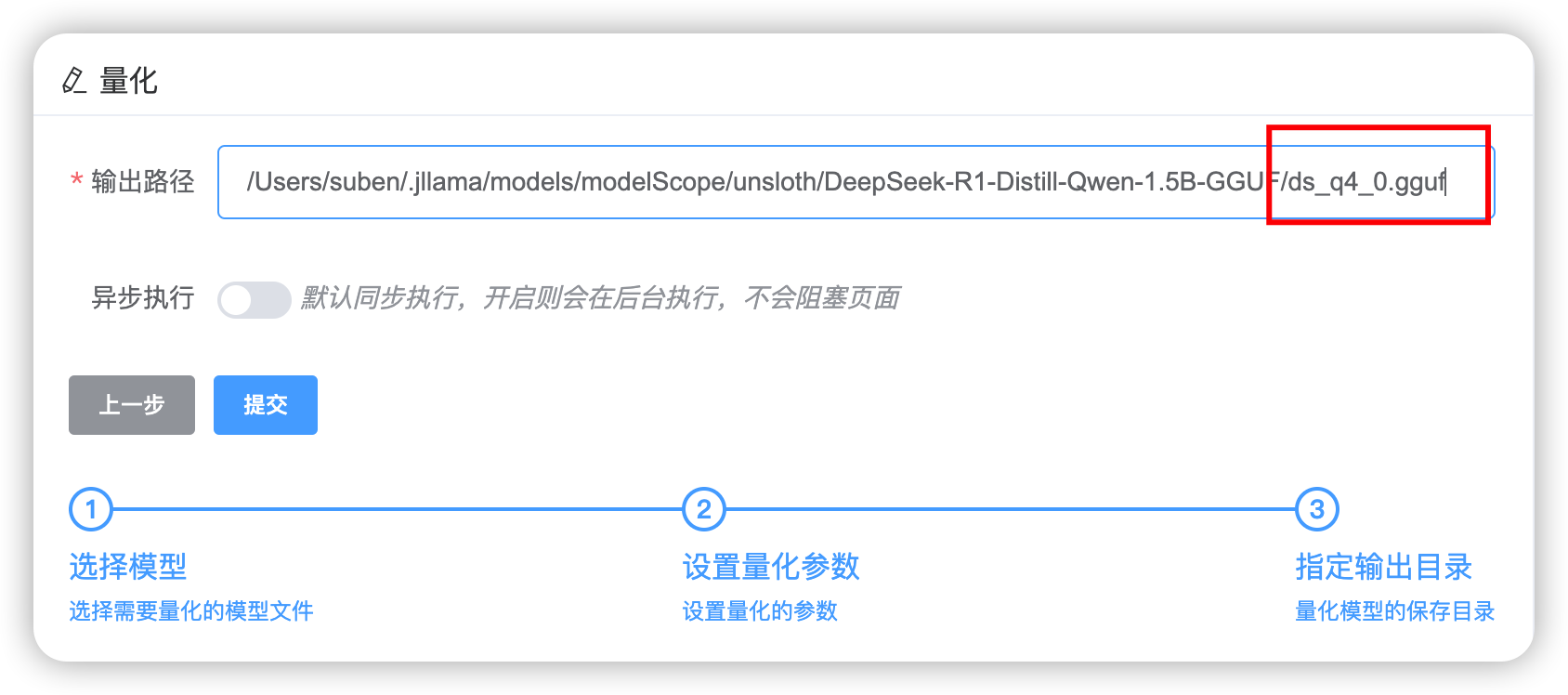

如下,这里我准备了一个BF16精度的deepseek-r1:1.5B的模型权重文件,将其量化为一个Q4_0精度的模型权重文件。量化完成后,得到一个Q4_0的量化后的模型权重文件。文件大小从BF16的3.3G降低到了1G。

### 六、模型导入

如果在第二点的模型管理中并没有使用在线下载模型,jllama-py也提供了手动导入模型的方式。

### 七、模型格式转换

因为jllama-py的本地部署方式只支持gguf模型格式。所以如果是transformers的原生模型格式想要使用本地部署功能需要将.safetensors格式的模型文件转换为gguf文件格式。在jllama-py中,你可以通过内置的脚本实现这一功能。

### 八、模型微调

#### 本地微调

此功能对本地机器的性能有较高要求,建议显存在16G+的机器使用本地微调功能。配置项请参考右侧的参数说明。此外本地微调只支持lora微调这一种方式,如需要进行全参数微调或者freeze参数微调请使用llamafactory微调模式。在微调之前也可以使用微调代码预览的功能,进行大致的代码review。

#### 远程微调

远程微调,需要提前在远端机器上安装好相关python软件包。请参考如下提供的requirements.txt。

```properties

accelerate==1.8.1

aiohappyeyeballs==2.6.1

aiohttp==3.12.13

aiosignal==1.3.2

attrs==25.3.0

certifi==2025.6.15

charset-normalizer==3.4.2

datasets==3.6.0

dill==0.3.8

filelock==3.13.1

frozenlist==1.7.0

fsspec==2024.6.1

hf-xet==1.1.5

huggingface-hub==0.33.1

idna==3.10

Jinja2==3.1.4

MarkupSafe==2.1.5

mpmath==1.3.0

multidict==6.6.2

multiprocess==0.70.16

networkx==3.3

numpy==2.1.2

nvidia-cublas-cu12==12.8.3.14

nvidia-cuda-cupti-cu12==12.8.57

nvidia-cuda-nvrtc-cu12==12.8.61

nvidia-cuda-runtime-cu12==12.8.57

nvidia-cudnn-cu12==9.7.1.26

nvidia-cufft-cu12==11.3.3.41

nvidia-cufile-cu12==1.13.0.11

nvidia-curand-cu12==10.3.9.55

nvidia-cusolver-cu12==11.7.2.55

nvidia-cusparse-cu12==12.5.7.53

nvidia-cusparselt-cu12==0.6.3

nvidia-nccl-cu12==2.26.2

nvidia-nvjitlink-cu12==12.8.61

nvidia-nvtx-cu12==12.8.55

packaging==25.0

pandas==2.3.0

peft==0.15.2

pillow==11.0.0

propcache==0.3.2

psutil==7.0.0

pyarrow==20.0.0

python-dateutil==2.9.0.post0

pytz==2025.2

PyYAML==6.0.2

regex==2024.11.6

requests==2.32.4

safetensors==0.5.3

six==1.17.0

sympy==1.13.3

tokenizers==0.21.2

torch==2.7.1+cu128

torchaudio==2.7.1+cu128

torchvision==0.22.1+cu128

tqdm==4.67.1

transformers==4.53.0

triton==3.3.1

typing_extensions==4.12.2

tzdata==2025.2

urllib3==2.5.0

xxhash==3.5.0

yarl==1.20.1

```

在微调之前先按要求填写好微调参数,之后点击远程微调按钮。在弹出的对话框中填写好远程Linux服务器的信息和微调目录以及python安装目录。

#### llamafactory微调

jllama-py内置了llamafactory-0.9.3版本。关于llamafactory,请参考[LLaMA Factory](https://llamafactory.readthedocs.io/zh-cn/latest/getting_started/installation.html)官方网站

### 九、AIGC专区

#### Stable Diffustion文生图

jllama-py对Stable Diffustion 1.5 文生图做了简单的实现。可以通过简单的提示词和基础的参数调整生成图片。具体的操作步骤如下。

1. 安装SD基础环境依赖,也就是下载SD基础模型。这个过程所需的时长取决于你的网络状况。你也可以手动下载SD1.5的模型放在设置的模型目录下。

2. 填写提示词和其他相关参数即可,点击按钮生成图片。

#### Stable Diffusion图生图

和文生图操作类似,只需要多额外上传一张参考图,多配置一个`变化率`参数即可。变化率越高,AI发挥的空间越大,反之AI会更多的参考原图的细节。

## 开发接入

修改当前用户名录下配置文件`${USER}/jllama/config.json`中的model为dev,修改ai_config配置项中本地保存模型的目录。如果需要设置网络代理,请修改proxy配置项中的代理地址(使用huggingface下载模型时需要配置此项)。

```json

{

"db_url": "sqlite:///db/jllama.db",

"server": {

"host": "127.0.0.1",

"port": 5000

},

"model": "dev",

"auto_start_dev_server": false,

"app_name": "jllama",

"app_width": 1366,

"app_height": 768,

"ai_config": {

"model_save_dir": "E:/models",

"llama_factory_port": 7860

},

"proxy": {

"http_proxy": "",

"https_proxy": ""

},

"aigc": {

"min_seed": 1,

"max_seed": 9999999999,

"log_step": 5

}

}

```

进入ui目录,并执行npm构建命令

```shell

cd ui

npm install

npm run serve

```

运行主程序

```shell

# 参考之前的从源代码构建章节,安装requirements

pip install -r requirements.txt

python main.py

```

Raw data

{

"_id": null,

"home_page": "https://github.com/zjwan461/jllama-py",

"name": "jllama-py",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.11",

"maintainer_email": null,

"keywords": "gguf AI huggingface modelscope pytorch transformers llama_cpp llamafactory",

"author": "Jerry",

"author_email": "826935261@qq.com",

"download_url": "https://files.pythonhosted.org/packages/2d/db/5c35aa0ab9d5f0810b867165f22027810876d18065e3376edf9a2dd1070a/jllama_py-1.1.13.tar.gz",

"platform": null,

"description": "#  jllama-py\r\n\r\n## \u4e3b\u8981\u6280\u672f\u6808\r\n\r\n### \u524d\u7aef\r\n\r\nVue\u3001ElementUI\r\n\r\n### \u540e\u7aef\r\n\r\npython3.11\u3001pywebview\u3001llama-cpp-python\u3001transformers\u3001pytorch\u3001Stable Diffusion\r\n\r\n## \u4ecb\u7ecd\r\n\r\njllama-py\u662f[jllama](https://github.com/zjwan461/jllama)\u7684Python\u7248\u672c\uff0c\u5e76\u4e14\u5f97\u76ca\u4e8e\u4f7f\u7528\u4e86python\u4f5c\u4e3a\u6280\u672f\u5e95\u5ea7\uff0c\u5728\u539f\u672cjllama\u7684\u57fa\u7840\u4e0a\u505a\u4e86\u4e00\u4e9b\u529f\u80fd\u589e\u5f3a\u3002 \u662f\u4e00\u4e2a\u57fa\u4e8epython\u6784\u5efa\u7684\u684c\u9762\u7aefAI\u6a21\u578b\u5de5\u5177\u96c6\u3002\u96c6\u6210\u4e86 **\u6a21\u578b\u4e0b\u8f7d**\u3001**\u6a21\u578b\u90e8\u7f72**\u3001**\u670d\u52a1\u76d1\u63a7**\u3001**gguf\u6a21\u578b\u91cf\u5316**\u3001**gguf\u62c6\u5206\u5408\u5e76** \u3001**\u6743\u91cd\u683c\u5f0f\u8f6c\u6362**\u3001**\u6a21\u578b\u5fae\u8c03**\u3001**StableDiffusion**\u7b49\u5de5\u5177\u5408\u96c6\u3002\u8ba9\u4f7f\u7528\u8005\u65e0\u9700\u638c\u63e1\u5404\u79cdAI\u76f8\u5173\u6280\u672f\u5c31\u80fd\u5feb\u901f\u5728\u672c\u5730\u8fd0\u884c\u6a21\u578b\u8fdb\u884c\u6a21\u578b\u63a8\u7406\u3001\u91cf\u5316\u7b49\u64cd\u4f5c\u3001\u6a21\u578b\u5fae\u8c03\u3001AIGC\u521b\u4f5c\u7b49\u5de5\u4f5c\u3002\r\n\r\n## \u9650\u5236\r\n\r\njllama-py\u76ee\u524d\u53ea\u652f\u6301\u5927\u8bed\u8a00\u6a21\u578b\uff08LLM\uff09\u3002\u672a\u6765\u4f1a\u8003\u8651\u652f\u6301\u89c6\u89c9\u3001\u97f3\u9891\u7b49\u591a\u6a21\u6001\u6a21\u578b\u3002jllama-py\u652f\u6301huggingface\u3001gguf\u6a21\u578b\u683c\u5f0f\u6587\u4ef6\u7684\u672c\u5730\u63a8\u7406\uff0c\u4f46\u662f\u5728\u672c\u5730\u90e8\u7f72\u65b9\u6848\u4e0a\u4ec5\u652f\u6301gguf\u6a21\u578b\u6743\u91cd\u683c\u5f0f\uff0c\u4e0d\u8fc7jllama-py\u4e5f\u63d0\u4f9b\u4e86\u5c06huggingface\u683c\u5f0f\u6a21\u578b\u8f6c\u6362\u4e3agguf\u6a21\u578b\u683c\u5f0f\u7684\u529f\u80fd\u3002\u6b64\u5916jllama-py\u4ec5\u652f\u6301Windows\u64cd\u4f5c\u7cfb\u7edf\u3002\u76ee\u524djllama-py\u4e5f\u53ea\u652f\u6301cuda\u7684GPU\u52a0\u901f\u65b9\u6848\u3002AIGC\u65b9\u9762\u652f\u6301StableDiffusion 1.5\u7248\u672c\r\n\r\n## \u5b89\u88c5\u548c\u4f7f\u7528\r\n\r\n### 1. \u4f7f\u7528pip\u5b89\u88c5\r\n\r\njllama-py\u4f7f\u7528\u4e86llama-cpp-python\u4f5c\u4e3a\u6a21\u578b\u63a8\u7406\u6280\u672f\u5e95\u5ea7\uff0c\u9700\u8981\u672c\u673a\u914d\u7f6e\u4e86C++\u7f16\u8bd1\u73af\u5883\u3002\u63a8\u8350\u5148\u5b89\u88c5llama-cpp-python\u4e4b\u540e\u518d\u5b89\u88c5jllama-py\u3002llama-cpp-python\u5b89\u88c5\u8bf7\u53c2\u8003\u5982\u4e0b\u3002\r\n\r\nCPU\u7248\u672c\u5b89\u88c5\u8bf7\u53c2\u8003[llama-cpp-python-cpu](llama-cpp-python-cpu.md)\r\n\r\nCUDA\u7248\u672c\u5b89\u88c5\u8bf7\u53c2\u8003[llama-cpp-python-cuda](llama-cpp-python-cuda.md)\r\n\r\n\u4e5f\u53ef\u4ee5\u76f4\u63a5\u5b89\u88c5llama-cpp-python\u5b98\u65b9\u7684whl\u3002 [Releases \u00b7 abetlen/llama-cpp-python](https://github.com/abetlen/llama-cpp-python/releases)\r\n\r\n```shell\r\n# \u7701\u7565llama-cpp-python\u7684\u5b89\u88c5\u8fc7\u7a0b\r\n...\r\npip install jllama-py\r\n# \u8fd0\u884c\r\njllama\r\n```\r\n\u76f4\u63a5\u5b89\u88c5\u8bf7\u53c2\u8003\u5982\u4e0b\u3002CPU\u7248\u672c\u5b89\u88c5\u8bf7\u63d0\u524d\u4e0b\u8f7d\u597d`MinGW`\u6216\u5b89\u88c5`Visual Studio`\uff1bCUDA\u7248\u672c\u5b89\u88c5\u8bf7\u5b89\u88c5`Visual Studio 2022`\u8f6f\u4ef6\u5e76\u5b89\u88c5`C++\u8fd0\u884c\u73af\u5883`\u3002\r\n```shell\r\n# CPU\u7248\u672c\u5b89\u88c5\r\n$env:CMAKE_GENERATOR = \"MinGW Makefiles\"\r\n$env:CMAKE_ARGS = \"-DGGML_OPENBLAS=on -DCMAKE_C_COMPILER=${MinGW\u5b89\u88c5\u76ee\u5f55}/mingw64/bin/gcc.exe -DCMAKE_CXX_COMPILER=${MinGW\u5b89\u88c5\u76ee\u5f55}/mingw64/bin/g++.exe\"\r\npip install jllama-py\r\n\r\n# CUDA\u7248\u672c\u5b89\u88c5\r\n$env:CMAKE_ARGS = \"-DGGML_CUDA=ON\"\r\npip install jllama-py\r\n```\r\n**\u5173\u4e8epytorch\u7684cuda\u52a0\u901f\uff0c\u8bf7\u989d\u5916\u6267\u884c\u5982\u4e0b\u547d\u4ee4**\u3002\u53ef\u53c2\u8003[pytorch\u5b98\u65b9\u8bf4\u660e](https://pytorch.org/get-started/locally/) \u3002**tips: 50\u7cfb\u663e\u5361\u9a71\u52a8\u6bd4\u8f83\u65b0,\u8bf7\u4f7f\u7528cuda12.8\u7248\u672c**\u3002\u5728jllama-py\u4e2d\uff0cpytorch\u7528\u4e8ehf\u683c\u5f0f\u7684\u6a21\u578b\u63a8\u7406\u4ee5\u53ca\u6a21\u578b\u5fae\u8c03\u3002\r\n\r\n```shell\r\npip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/${\u4f60\u7684cuda\u7248\u672c}\r\n```\r\n\r\n \r\n\r\n### 2. \u4f7f\u7528\u6e90\u7801\u7f16\u8bd1\u5b89\u88c5\r\n\r\n\u57fa\u7840\u4f9d\u8d56\u5b89\u88c5\uff1a\u6267\u884c\u5982\u4e0b\u5b89\u88c5\u547d\u4ee4\r\n\r\n```shell\r\ngit clone https://github.com/zjwan461/jllama-py\r\ncd jllama-py\r\npip install -r requirements.txt\r\ncd ui\r\nnpm install\r\nnpm run build\r\n# copy ui/dist\u6587\u4ef6\u5939\u5230jllama/ui/dist\u76ee\u5f55\u4e0b\r\ncd ../jllama\r\npython main.py\r\n```\r\n\r\njllama-py\u4f7f\u7528\u4e86llama-cpp-python\u4f5c\u4e3a\u6a21\u578b\u63a8\u7406\u6280\u672f\u5e95\u5ea7\uff0c\u9ed8\u8ba4\u60c5\u51b5\u4e0b\u5b89\u88c5\u7684\u662fCPU\u7248\u672c\u7684llama-cpp-python\u3002\u5982\u679c\u9700\u8981\u4f7f\u7528cuda\u8fdb\u884c\u52a0\u901f\u63a8\u7406\uff0c\u8bf7\u53c2\u8003\u5982\u4e0b\u3002\r\n\r\nCPU\u7248\u672c\u5b89\u88c5\u8bf7\u53c2\u8003[llama-cpp-python-cpu](llama-cpp-python-cpu.md)\r\n\r\nCUDA\u7248\u672c\u5b89\u88c5\u8bf7\u53c2\u8003[llama-cpp-python-cuda](llama-cpp-python-cuda.md)\r\n\r\n\u9ed8\u8ba4\u60c5\u51b5\u4e0b\u57fa\u7840\u4f9d\u8d56\u5b89\u88c5\u4f1a\u5b89\u88c5\u4e0aCPU\u7248\u672c\u7684**pytorch**\u5305\uff0c\u5982\u9700\u8981\u5b89\u88c5cuda\u7248\u672c\u8bf7\u6267\u884c\r\n\r\n```shell\r\npip install -r pytorch-cuda.txt\r\n```\r\n\r\n## \u529f\u80fd\u4ecb\u7ecd\r\n\r\n### \u4e00\u3001\u7cfb\u7edf\u76d1\u63a7\r\n\r\n\u4e00\u4e2a\u7b80\u5355\u7684\u7cfb\u7edf\u8d44\u6e90\u76d1\u63a7\u9762\u677f\r\n\r\n \r\n\r\n### \u4e8c\u3001\u6a21\u578b\u7ba1\u7406\r\n\r\njllama-py\u63d0\u4f9b\u4e86\u4e24\u79cd\u6a21\u578b\u6587\u4ef6\u4e0b\u8f7d\u65b9\u5f0f\u3002[Hugging Face.](https://huggingface.co/)\u548c[model scope](https://www.modelscope.cn/home) \u4e0d\u8fc7\u8003\u8651\u5230\u56fd\u5185\u7f51\u7edc\u95ee\u9898\u5efa\u8bae\u4f7f\u7528modelscope\u7684\u65b9\u5f0f\u4e0b\u8f7d\u6a21\u578b\u3002\r\n\r\n \r\n\r\n\r\n\r\n \r\n\r\n\u4f60\u53ef\u4ee5\u5728\u6a21\u578b\u4e0b\u8f7d\u83dc\u5355\u4e2d\u70b9\u51fb\u65b0\u589e\u6309\u94ae\u3002\u9009\u62e9\u6a21\u578b\u4e0b\u8f7d\u5e73\u53f0\u3002\u6a21\u578b\u540d\u79f0\u53ef\u81ea\u5b9a\u4e49\uff0crepo\u9700\u8981\u548cmodelscope\u7684\u6a21\u578b\u4ed3\u5e93\u540d\u4e00\u81f4\u3002\u6bd4\u5982\u5982\u4e0b\u56fe\u7684`unsloth/DeepSeek-R1-Distill-Qwen-1.5B-GGUF`\u3002\u70b9\u51fb\u786e\u8ba4\u540e`Jllama`\u5c06\u4f1a\u4ecemodelscope\u4e0a\u62c9\u53d6\u53ef\u4f9b\u4e0b\u8f7d\u7684\u6587\u4ef6\u5217\u8868\u3002\u9009\u62e9\u4f60\u9700\u8981\u4e0b\u8f7d\u7684GGUF\u6a21\u578b\u6743\u91cd\u6587\u4ef6\u8fdb\u884c\u4e0b\u8f7d\u5373\u53ef\u3002\r\n\r\n \r\n\r\n\u9700\u8981\u6307\u5b9a\u6a21\u578b\u7c7b\u578b\u3002\u5982\u679c\u662fgguf\u7684\u6a21\u578b\u5219\u9009\u62e9gguf\uff0c\u6b64\u5916huggingface\u683c\u5f0f\u6a21\u578b\u5219\u9009\u62e9hf\r\n\r\n \r\n\r\n\u5728\u5f39\u51fa\u7684\u6a21\u578b\u6587\u4ef6item\u4e2d\uff0c\u9009\u62e9\u4f60\u60f3\u8981\u4e0b\u8f7d\u7684\u6a21\u578b\u6587\u4ef6\u5373\u53ef\u8fdb\u884c\u5728\u7ebf\u4e0b\u8f7d\u3002\u5982\u679c\u4e0d\u60f3\u4f7f\u7528jllama-py\u7684\u5728\u7ebf\u4e0b\u8f7d\u529f\u80fd\uff0c\u53ef\u4ee5\u4e0d\u52fe\u9009\u5728\u7ebf\u4e0b\u8f7d\u5f00\u5173\uff0c\u76f4\u63a5\u5173\u95ed\u5bf9\u8bdd\u6846\u5373\u53ef\u3002\r\n\r\n \r\n\r\n\r\n\r\n### \u4e09\u3001AI\u63a8\u7406\u548c\u672c\u5730\u90e8\u7f72\r\n\r\n#### AI \u63a8\u7406\r\n\r\nAI\u63a8\u7406\u662f\u672c\u5730\u6d4b\u8bd5\u6a21\u578b\u80fd\u529b\u7684\u5de5\u5177\uff0c\u5176\u5e76\u4e0d\u5177\u5907\u5e76\u53d1\u80fd\u529b\u3002\u5176\u4f5c\u7528\u662f\u7528\u6765\u5feb\u901f\u8fd0\u884c\u4e00\u4e2a\u6a21\u578b\uff0c\u5b8c\u6210\u4e00\u4e9b\u7b80\u5355\u7684AI\u95ee\u7b54\u529f\u80fd\u3002\u867d\u7136\u5e95\u5c42\u4e5f\u662f\u901a\u8fc7\u4e00\u4e2ahttp api\u7684\u65b9\u5f0f\u6765\u8fdb\u884c\u4ea4\u4e92\uff0c\u4f46\u5176\u5e76\u4e0d\u517c\u5bb9openAI\u7684\u8bf7\u6c42\u62a5\u6587\u683c\u5f0f\u3002\r\n\r\n \r\n\r\nAI Chat\uff1a\r\n\r\n\u5728\u5f00\u542f\u4e86\u6a21\u578b\u63a8\u7406\u670d\u52a1\u540e\uff0c\u70b9\u51fb\u5217\u8868\u53f3\u4fa7\u7684`chat`\u6309\u94ae\u5373\u53ef\u8fdb\u5165AI Chat\u9875\u3002\u5728\u8fd9\u4e2a\u9875\u9762\u4f60\u53ef\u4ee5\u548c\u5927\u6a21\u578b\u8fdb\u884c\u7b80\u5355\u7684AI\u95ee\u7b54\u3002\r\n\r\n \r\n\r\n#### \u672c\u5730\u90e8\u7f72\r\n\r\n \u672c\u5730\u90e8\u7f72\u4ec5\u652f\u6301gguf\u7c7b\u578b\u7684\u6a21\u578b\u3002\u5e95\u5c42\u4f7f\u7528llama-cpp-python\u90e8\u7f72\u4e00\u4e2a\u517c\u5bb9openAI\u7684http server\u3002\u672c\u5730\u90e8\u7f72\u4f1a\u5728jllama-py\u4e2d\u7ef4\u62a4\u4e00\u4e2allama-cpp-python\u7684\u914d\u7f6e\uff0c\u901a\u8fc7\u4fee\u6539\u8fd9\u4e2a\u914d\u7f6e\u6765\u5bf9\u672c\u5730\u90e8\u7f72\u7684\u6a21\u578b\u8fdb\u884c\u7ef4\u62a4\u3002jllama-py\u652f\u6301\u76f4\u63a5\u7f16\u8f91\u548c\u901a\u8fc7\u672c\u5730\u7f16\u8f91\u5668\u7f16\u8f91\u7684\u65b9\u5f0f\u6765\u4fee\u6539\u6b64\u914d\u7f6e\u6587\u4ef6\u3002\u5728`\u6267\u884c\u90e8\u7f72`\u9009\u9879\u5361\u4e2d\u5373\u53ef\u4ee5\u5728\u672c\u5730\u5f00\u542f\u4e00\u4e2a\u517c\u5bb9openAI\u7684http server\u3002\u5f00\u542f\u540e\u4f60\u53ef\u4ee5\u5c06\u672c\u5730\u670d\u52a1\u914d\u7f6e\u5230\u5404\u79cdRAG\u5de5\u5177\u4e2d\uff0c\u5982`Cherry Studio`\u3001`Dify`\u3002\u5173\u4e8e\u914d\u7f6e\u9879\u600e\u4e48\u5199\uff0c\u8bf7\u53c2\u8003llama-cpp-python\u5b98\u65b9\u8bf4\u660e[OpenAI Compatible Web Server - llama-cpp-python](https://llama-cpp-python.readthedocs.io/en/latest/server/)\u4e2d\u7684Configuration and Multi-Model Support\u7ae0\u8282\u3002\r\n\r\n \r\n\r\n \r\n\r\n\u5728Cherry Studio\u4e2d\u914d\u7f6ejllama-py\u670d\u52a1\r\n\r\n \r\n\r\n \r\n\r\n\r\n\r\n### \u56db\u3001gguf\u6a21\u578b\u6743\u91cd\u62c6\u5206\u3001\u5408\u5e76\r\n\r\n#### \u62c6\u5206\r\n\r\n\u586b\u5199\u597d\u8f93\u5165\u6587\u4ef6\u3001\u62c6\u5206\u9009\u9879\u3001\u62c6\u5206\u53c2\u6570\u3001\u8f93\u51fa\u6587\u4ef6\u70b9\u51fb\u63d0\u4ea4\u3002jllama-py\u4f7f\u7528\u4e86llama.cpp\u7684`llama-gguf-split`\u529f\u80fd\u628a\u539f\u59cb\u7684gguf\u62c6\u5206\u4e3a\u591a\u4e2agguf\u6587\u4ef6\u3002\u5229\u4e8e\u8d85\u5927\u6a21\u578b\u6743\u91cd\u6587\u4ef6\u7684\u4f20\u8f93\u548c\u4fdd\u5b58\u3002\r\n\r\n \r\n\r\n \r\n\r\n#### \u5408\u5e76\r\n\r\n\u586b\u5199\u597d\u8f93\u5165\u6587\u4ef6\uff0c\u5373\u9700\u8981\u5408\u5e76\u7684gguf\u6587\u4ef6\u8def\u5f84\uff0800001-of-0000n\u547d\u540d\u7684\u6587\u4ef6\uff09\u3001\u8f93\u51fa\u6587\u4ef6\u3002jllama-py\u4f7f\u7528\u4e86llama.cpp\u7684`llama-gguf-split`\u529f\u80fd\u628a\u5206\u6563\u7684\u51e0\u4e2agguf\u6587\u4ef6\u5408\u5e76\u4e3a\u4e00\u4e2agguf\u6a21\u578b\u6743\u91cd\u6587\u4ef6\u3002\r\n\r\n\r\n\r\n \r\n\r\n### \u4e94\u3001\u6a21\u578b\u91cf\u5316\r\n\r\n\u5bf9\u4e8e\u8f83\u5927\u53c2\u6570\u91cf\u7684\u6a21\u578b\u6743\u91cd\u6587\u4ef6\u76f4\u63a5\u8fdb\u884c\u672c\u5730\u90e8\u7f72\u7684\u786c\u4ef6\u8981\u6c42\u662f\u975e\u5e38\u9ad8\u7684\uff0c\u5373\u4f7f\u4f7f\u7528\u4e86CUDA\u8fd9\u79cdGPU\u52a0\u901f\u7684\u65b9\u6848\uff0c\u5176\u5bf9\u663e\u5b58\u5927\u5c0f\u7684\u8981\u6c42\u4e5f\u662f\u6bd4\u8f83\u9ad8\u7684\u3002\u90e8\u7f72\u4e00\u4e2a7B\u7684gguf\u6a21\u578b\u6240\u9700\u663e\u5b58\u8d44\u6e90\u5927\u81f4\u5982\u4e0b\uff1a\r\n\r\n- **16 \u4f4d\u6d6e\u70b9\u6570\uff08FP16\uff09**\uff1a\u901a\u5e38\u60c5\u51b5\u4e0b\uff0c\u6bcf\u4e2a\u53c2\u6570\u5360\u7528 2 \u5b57\u8282\uff0816 \u4f4d\uff09\u30027B \u6a21\u578b\u610f\u5473\u7740\u6709 70 \u4ebf\u4e2a\u53c2\u6570\uff0c\u56e0\u6b64\u5927\u7ea6\u9700\u8981 70 \u4ebf \u00d72 \u5b57\u8282 = 140 \u4ebf\u5b57\u8282\uff0c\u6362\u7b97\u540e\u7ea6\u4e3a 13.5GB \u663e\u5b58\u3002\r\n- **8 \u4f4d\u6574\u6570\uff08INT8\uff09**\uff1a\u5982\u679c\u91c7\u7528 8 \u4f4d\u91cf\u5316\uff0c\u6bcf\u4e2a\u53c2\u6570\u5360\u7528 1 \u5b57\u8282\uff088 \u4f4d\uff09\uff0c\u90a3\u4e48 7B \u6a21\u578b\u5927\u7ea6\u9700\u8981 70 \u4ebf \u00d71 \u5b57\u8282 = 70 \u4ebf\u5b57\u8282\uff0c\u5373\u7ea6 6.6GB \u663e\u5b58\u3002\r\n\r\n\u56e0\u6b64\uff0c\u5982\u679c\u4f7f\u7528\u6a21\u578b\u91cf\u5316\u6280\u672f\uff0c\u5c06\u4e00\u4e2aFP16\u7684\u6a21\u578b\u91cf\u5316\u4e3aINT8\u7684\u6a21\u578b\uff0c\u80fd\u591f\u51cf\u5c11\u4e00\u534a\u5de6\u53f3\u7684\u663e\u5b58\u5360\u7528\u3002\u80fd\u591f\u5e2e\u52a9\u4e2a\u4eba\u5229\u7528\u6709\u9650\u7684\u786c\u4ef6\u8fdb\u884c\u6a21\u578b\u7684\u672c\u5730\u90e8\u7f72\u3002\r\n\r\n\r\n\r\n\u5982\u4e0b\uff0c\u8fd9\u91cc\u6211\u51c6\u5907\u4e86\u4e00\u4e2aBF16\u7cbe\u5ea6\u7684deepseek-r1:1.5B\u7684\u6a21\u578b\u6743\u91cd\u6587\u4ef6\uff0c\u5c06\u5176\u91cf\u5316\u4e3a\u4e00\u4e2aQ4_0\u7cbe\u5ea6\u7684\u6a21\u578b\u6743\u91cd\u6587\u4ef6\u3002\u91cf\u5316\u5b8c\u6210\u540e\uff0c\u5f97\u5230\u4e00\u4e2aQ4_0\u7684\u91cf\u5316\u540e\u7684\u6a21\u578b\u6743\u91cd\u6587\u4ef6\u3002\u6587\u4ef6\u5927\u5c0f\u4eceBF16\u76843.3G\u964d\u4f4e\u5230\u4e861G\u3002\r\n\r\n \r\n\r\n \r\n\r\n\r\n\r\n\r\n\r\n### \u516d\u3001\u6a21\u578b\u5bfc\u5165\r\n\r\n\u5982\u679c\u5728\u7b2c\u4e8c\u70b9\u7684\u6a21\u578b\u7ba1\u7406\u4e2d\u5e76\u6ca1\u6709\u4f7f\u7528\u5728\u7ebf\u4e0b\u8f7d\u6a21\u578b\uff0cjllama-py\u4e5f\u63d0\u4f9b\u4e86\u624b\u52a8\u5bfc\u5165\u6a21\u578b\u7684\u65b9\u5f0f\u3002\r\n\r\n  \r\n\r\n ### \u4e03\u3001\u6a21\u578b\u683c\u5f0f\u8f6c\u6362\r\n\r\n\u56e0\u4e3ajllama-py\u7684\u672c\u5730\u90e8\u7f72\u65b9\u5f0f\u53ea\u652f\u6301gguf\u6a21\u578b\u683c\u5f0f\u3002\u6240\u4ee5\u5982\u679c\u662ftransformers\u7684\u539f\u751f\u6a21\u578b\u683c\u5f0f\u60f3\u8981\u4f7f\u7528\u672c\u5730\u90e8\u7f72\u529f\u80fd\u9700\u8981\u5c06.safetensors\u683c\u5f0f\u7684\u6a21\u578b\u6587\u4ef6\u8f6c\u6362\u4e3agguf\u6587\u4ef6\u683c\u5f0f\u3002\u5728jllama-py\u4e2d\uff0c\u4f60\u53ef\u4ee5\u901a\u8fc7\u5185\u7f6e\u7684\u811a\u672c\u5b9e\u73b0\u8fd9\u4e00\u529f\u80fd\u3002\r\n\r\n \r\n\r\n \r\n\r\n\r\n\r\n \r\n\r\n\r\n\r\n ### \u516b\u3001\u6a21\u578b\u5fae\u8c03\r\n\r\n#### \u672c\u5730\u5fae\u8c03\r\n\r\n\u6b64\u529f\u80fd\u5bf9\u672c\u5730\u673a\u5668\u7684\u6027\u80fd\u6709\u8f83\u9ad8\u8981\u6c42\uff0c\u5efa\u8bae\u663e\u5b58\u572816G+\u7684\u673a\u5668\u4f7f\u7528\u672c\u5730\u5fae\u8c03\u529f\u80fd\u3002\u914d\u7f6e\u9879\u8bf7\u53c2\u8003\u53f3\u4fa7\u7684\u53c2\u6570\u8bf4\u660e\u3002\u6b64\u5916\u672c\u5730\u5fae\u8c03\u53ea\u652f\u6301lora\u5fae\u8c03\u8fd9\u4e00\u79cd\u65b9\u5f0f\uff0c\u5982\u9700\u8981\u8fdb\u884c\u5168\u53c2\u6570\u5fae\u8c03\u6216\u8005freeze\u53c2\u6570\u5fae\u8c03\u8bf7\u4f7f\u7528llamafactory\u5fae\u8c03\u6a21\u5f0f\u3002\u5728\u5fae\u8c03\u4e4b\u524d\u4e5f\u53ef\u4ee5\u4f7f\u7528\u5fae\u8c03\u4ee3\u7801\u9884\u89c8\u7684\u529f\u80fd\uff0c\u8fdb\u884c\u5927\u81f4\u7684\u4ee3\u7801review\u3002\r\n\r\n \r\n\r\n \r\n\r\n#### \u8fdc\u7a0b\u5fae\u8c03\r\n\r\n\u8fdc\u7a0b\u5fae\u8c03\uff0c\u9700\u8981\u63d0\u524d\u5728\u8fdc\u7aef\u673a\u5668\u4e0a\u5b89\u88c5\u597d\u76f8\u5173python\u8f6f\u4ef6\u5305\u3002\u8bf7\u53c2\u8003\u5982\u4e0b\u63d0\u4f9b\u7684requirements.txt\u3002\r\n\r\n```properties\r\naccelerate==1.8.1\r\naiohappyeyeballs==2.6.1\r\naiohttp==3.12.13\r\naiosignal==1.3.2\r\nattrs==25.3.0\r\ncertifi==2025.6.15\r\ncharset-normalizer==3.4.2\r\ndatasets==3.6.0\r\ndill==0.3.8\r\nfilelock==3.13.1\r\nfrozenlist==1.7.0\r\nfsspec==2024.6.1\r\nhf-xet==1.1.5\r\nhuggingface-hub==0.33.1\r\nidna==3.10\r\nJinja2==3.1.4\r\nMarkupSafe==2.1.5\r\nmpmath==1.3.0\r\nmultidict==6.6.2\r\nmultiprocess==0.70.16\r\nnetworkx==3.3\r\nnumpy==2.1.2\r\nnvidia-cublas-cu12==12.8.3.14\r\nnvidia-cuda-cupti-cu12==12.8.57\r\nnvidia-cuda-nvrtc-cu12==12.8.61\r\nnvidia-cuda-runtime-cu12==12.8.57\r\nnvidia-cudnn-cu12==9.7.1.26\r\nnvidia-cufft-cu12==11.3.3.41\r\nnvidia-cufile-cu12==1.13.0.11\r\nnvidia-curand-cu12==10.3.9.55\r\nnvidia-cusolver-cu12==11.7.2.55\r\nnvidia-cusparse-cu12==12.5.7.53\r\nnvidia-cusparselt-cu12==0.6.3\r\nnvidia-nccl-cu12==2.26.2\r\nnvidia-nvjitlink-cu12==12.8.61\r\nnvidia-nvtx-cu12==12.8.55\r\npackaging==25.0\r\npandas==2.3.0\r\npeft==0.15.2\r\npillow==11.0.0\r\npropcache==0.3.2\r\npsutil==7.0.0\r\npyarrow==20.0.0\r\npython-dateutil==2.9.0.post0\r\npytz==2025.2\r\nPyYAML==6.0.2\r\nregex==2024.11.6\r\nrequests==2.32.4\r\nsafetensors==0.5.3\r\nsix==1.17.0\r\nsympy==1.13.3\r\ntokenizers==0.21.2\r\ntorch==2.7.1+cu128\r\ntorchaudio==2.7.1+cu128\r\ntorchvision==0.22.1+cu128\r\ntqdm==4.67.1\r\ntransformers==4.53.0\r\ntriton==3.3.1\r\ntyping_extensions==4.12.2\r\ntzdata==2025.2\r\nurllib3==2.5.0\r\nxxhash==3.5.0\r\nyarl==1.20.1\r\n```\r\n\r\n\u5728\u5fae\u8c03\u4e4b\u524d\u5148\u6309\u8981\u6c42\u586b\u5199\u597d\u5fae\u8c03\u53c2\u6570\uff0c\u4e4b\u540e\u70b9\u51fb\u8fdc\u7a0b\u5fae\u8c03\u6309\u94ae\u3002\u5728\u5f39\u51fa\u7684\u5bf9\u8bdd\u6846\u4e2d\u586b\u5199\u597d\u8fdc\u7a0bLinux\u670d\u52a1\u5668\u7684\u4fe1\u606f\u548c\u5fae\u8c03\u76ee\u5f55\u4ee5\u53capython\u5b89\u88c5\u76ee\u5f55\u3002\r\n\r\n \r\n\r\n#### llamafactory\u5fae\u8c03\r\n\r\njllama-py\u5185\u7f6e\u4e86llamafactory-0.9.3\u7248\u672c\u3002\u5173\u4e8ellamafactory\uff0c\u8bf7\u53c2\u8003[LLaMA Factory](https://llamafactory.readthedocs.io/zh-cn/latest/getting_started/installation.html)\u5b98\u65b9\u7f51\u7ad9\r\n\r\n \r\n\r\n### \u4e5d\u3001AIGC\u4e13\u533a\r\n\r\n#### Stable Diffustion\u6587\u751f\u56fe\r\n\r\njllama-py\u5bf9Stable Diffustion 1.5 \u6587\u751f\u56fe\u505a\u4e86\u7b80\u5355\u7684\u5b9e\u73b0\u3002\u53ef\u4ee5\u901a\u8fc7\u7b80\u5355\u7684\u63d0\u793a\u8bcd\u548c\u57fa\u7840\u7684\u53c2\u6570\u8c03\u6574\u751f\u6210\u56fe\u7247\u3002\u5177\u4f53\u7684\u64cd\u4f5c\u6b65\u9aa4\u5982\u4e0b\u3002\r\n\r\n1. \u5b89\u88c5SD\u57fa\u7840\u73af\u5883\u4f9d\u8d56\uff0c\u4e5f\u5c31\u662f\u4e0b\u8f7dSD\u57fa\u7840\u6a21\u578b\u3002\u8fd9\u4e2a\u8fc7\u7a0b\u6240\u9700\u7684\u65f6\u957f\u53d6\u51b3\u4e8e\u4f60\u7684\u7f51\u7edc\u72b6\u51b5\u3002\u4f60\u4e5f\u53ef\u4ee5\u624b\u52a8\u4e0b\u8f7dSD1.5\u7684\u6a21\u578b\u653e\u5728\u8bbe\u7f6e\u7684\u6a21\u578b\u76ee\u5f55\u4e0b\u3002\r\n \r\n2. \u586b\u5199\u63d0\u793a\u8bcd\u548c\u5176\u4ed6\u76f8\u5173\u53c2\u6570\u5373\u53ef\uff0c\u70b9\u51fb\u6309\u94ae\u751f\u6210\u56fe\u7247\u3002\r\n \r\n\r\n#### Stable Diffusion\u56fe\u751f\u56fe\r\n\r\n\u548c\u6587\u751f\u56fe\u64cd\u4f5c\u7c7b\u4f3c\uff0c\u53ea\u9700\u8981\u591a\u989d\u5916\u4e0a\u4f20\u4e00\u5f20\u53c2\u8003\u56fe\uff0c\u591a\u914d\u7f6e\u4e00\u4e2a`\u53d8\u5316\u7387`\u53c2\u6570\u5373\u53ef\u3002\u53d8\u5316\u7387\u8d8a\u9ad8\uff0cAI\u53d1\u6325\u7684\u7a7a\u95f4\u8d8a\u5927\uff0c\u53cd\u4e4bAI\u4f1a\u66f4\u591a\u7684\u53c2\u8003\u539f\u56fe\u7684\u7ec6\u8282\u3002\r\n\r\n \r\n\r\n\r\n\r\n\r\n ## \u5f00\u53d1\u63a5\u5165\r\n\r\n\u4fee\u6539\u5f53\u524d\u7528\u6237\u540d\u5f55\u4e0b\u914d\u7f6e\u6587\u4ef6`${USER}/jllama/config.json`\u4e2d\u7684model\u4e3adev\uff0c\u4fee\u6539ai_config\u914d\u7f6e\u9879\u4e2d\u672c\u5730\u4fdd\u5b58\u6a21\u578b\u7684\u76ee\u5f55\u3002\u5982\u679c\u9700\u8981\u8bbe\u7f6e\u7f51\u7edc\u4ee3\u7406\uff0c\u8bf7\u4fee\u6539proxy\u914d\u7f6e\u9879\u4e2d\u7684\u4ee3\u7406\u5730\u5740\uff08\u4f7f\u7528huggingface\u4e0b\u8f7d\u6a21\u578b\u65f6\u9700\u8981\u914d\u7f6e\u6b64\u9879\uff09\u3002\r\n\r\n```json\r\n{\r\n \"db_url\": \"sqlite:///db/jllama.db\",\r\n \"server\": {\r\n \"host\": \"127.0.0.1\",\r\n \"port\": 5000\r\n },\r\n \"model\": \"dev\",\r\n \"auto_start_dev_server\": false,\r\n \"app_name\": \"jllama\",\r\n \"app_width\": 1366,\r\n \"app_height\": 768,\r\n \"ai_config\": {\r\n \"model_save_dir\": \"E:/models\",\r\n \"llama_factory_port\": 7860\r\n },\r\n \"proxy\": {\r\n \"http_proxy\": \"\",\r\n \"https_proxy\": \"\"\r\n },\r\n \"aigc\": {\r\n \"min_seed\": 1,\r\n \"max_seed\": 9999999999,\r\n \"log_step\": 5\r\n }\r\n}\r\n```\r\n\r\n\u8fdb\u5165ui\u76ee\u5f55\uff0c\u5e76\u6267\u884cnpm\u6784\u5efa\u547d\u4ee4\r\n\r\n```shell\r\ncd ui\r\nnpm install\r\nnpm run serve\r\n```\r\n\r\n\u8fd0\u884c\u4e3b\u7a0b\u5e8f\r\n\r\n```shell\r\n# \u53c2\u8003\u4e4b\u524d\u7684\u4ece\u6e90\u4ee3\u7801\u6784\u5efa\u7ae0\u8282\uff0c\u5b89\u88c5requirements\r\n\r\npip install -r requirements.txt\r\n\r\npython main.py\r\n```\r\n\r\n\r\n\r\n \r\n\r\n \r\n\r\n \r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n",

"bugtrack_url": null,

"license": "MIT",

"summary": "A simple AI Tool set application",

"version": "1.1.13",

"project_urls": {

"Homepage": "https://github.com/zjwan461/jllama-py"

},

"split_keywords": [

"gguf",

"ai",

"huggingface",

"modelscope",

"pytorch",

"transformers",

"llama_cpp",

"llamafactory"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "c7642d3b054c82f865df42a0e853e91948a9f7ced272eb0afc89f861682ed0f1",

"md5": "49c63739b6147888043002bad3d6544b",

"sha256": "57bc3f1f1d32b9eba7fcc61462969126f05d974a845d390e0f9838acef20e2b9"

},

"downloads": -1,

"filename": "jllama_py-1.1.13-py3-none-any.whl",

"has_sig": false,

"md5_digest": "49c63739b6147888043002bad3d6544b",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.11",

"size": 16850616,

"upload_time": "2025-07-19T05:47:12",

"upload_time_iso_8601": "2025-07-19T05:47:12.298078Z",

"url": "https://files.pythonhosted.org/packages/c7/64/2d3b054c82f865df42a0e853e91948a9f7ced272eb0afc89f861682ed0f1/jllama_py-1.1.13-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "2ddb5c35aa0ab9d5f0810b867165f22027810876d18065e3376edf9a2dd1070a",

"md5": "75b960af13f0b79e0eb4bc981c7757a6",

"sha256": "56e01ea577863a61f7abd0fee7185aaa0fc5527745a590e2a53399fdf7797b7f"

},

"downloads": -1,

"filename": "jllama_py-1.1.13.tar.gz",

"has_sig": false,

"md5_digest": "75b960af13f0b79e0eb4bc981c7757a6",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.11",

"size": 16730580,

"upload_time": "2025-07-19T05:47:27",

"upload_time_iso_8601": "2025-07-19T05:47:27.360745Z",

"url": "https://files.pythonhosted.org/packages/2d/db/5c35aa0ab9d5f0810b867165f22027810876d18065e3376edf9a2dd1070a/jllama_py-1.1.13.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-07-19 05:47:27",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "zjwan461",

"github_project": "jllama-py",

"travis_ci": false,

"coveralls": false,

"github_actions": false,

"requirements": [

{

"name": "pywebview",

"specs": [

[

"==",

"5.4"

]

]

},

{

"name": "flask",

"specs": [

[

"==",

"3.1.1"

]

]

},

{

"name": "flask-cors",

"specs": [

[

"==",

"6.0.1"

]

]

},

{

"name": "requests",

"specs": [

[

"==",

"2.32.3"

]

]

},

{

"name": "psutil",

"specs": [

[

"==",

"7.0.0"

]

]

},

{

"name": "GPUtil",

"specs": [

[

"==",

"1.4.0"

]

]

},

{

"name": "SQLAlchemy",

"specs": [

[

"==",

"2.0.41"

]

]

},

{

"name": "modelscope",

"specs": [

[

"==",

"1.26.0"

]

]

},

{

"name": "orjson",

"specs": [

[

"==",

"3.10.18"

]

]

},

{

"name": "transformers",

"specs": [

[

"==",

"4.52.4"

]

]

},

{

"name": "accelerate",

"specs": [

[

"==",

"1.7.0"

]

]

},

{

"name": "addict",

"specs": [

[

"==",

"2.4.0"

]

]

},

{

"name": "WMI",

"specs": [

[

"==",

"1.5.1"

]

]

},

{

"name": "hf_xet",

"specs": [

[

"==",

"1.1.5"

]

]

},

{

"name": "torch",

"specs": [

[

"~=",

"2.7.1"

]

]

},

{

"name": "numpy",

"specs": [

[

"~=",

"1.26.4"

]

]

},

{

"name": "tqdm",

"specs": [

[

"~=",

"4.67.1"

]

]

},

{

"name": "sentencepiece",

"specs": [

[

"~=",

"0.2.0"

]

]

},

{

"name": "pyyaml",

"specs": [

[

"~=",

"6.0.2"

]

]

},

{

"name": "huggingface-hub",

"specs": [

[

"~=",

"0.33.0"

]

]

},

{

"name": "uvicorn",

"specs": [

[

"~=",

"0.34.3"

]

]

},

{

"name": "datasets",

"specs": [

[

"~=",

"3.6.0"

]

]

},

{

"name": "bitsandbytes",

"specs": [

[

"~=",

"0.46.0"

]

]

},

{

"name": "peft",

"specs": [

[

"~=",

"0.15.2"

]

]

},

{

"name": "safetensors",

"specs": [

[

"~=",

"0.5.3"

]

]

},

{

"name": "jinja2",

"specs": [

[

"~=",

"3.1.6"

]

]

},

{

"name": "paramiko",

"specs": [

[

"~=",

"3.5.1"

]

]

},

{

"name": "scp",

"specs": [

[

"~=",

"0.15.0"

]

]

},

{

"name": "llamafactory",

"specs": [

[

"==",

"0.9.3"

]

]

},

{

"name": "llama-cpp-python",

"specs": [

[

"~=",

"0.3.2"

]

]

},

{

"name": "matplotlib",

"specs": [

[

"~=",

"3.10.3"

]

]

},

{

"name": "diffusers",

"specs": [

[

"~=",

"0.34.0"

]

]

},

{

"name": "pillow",

"specs": [

[

"~=",

"11.3.0"

]

]

},

{

"name": "transformers",

"specs": [

[

"~=",

"4.52.4"

]

]

},

{

"name": "setuptools",

"specs": [

[

"~=",

"78.1.1"

]

]

},

{

"name": "insightface",

"specs": [

[

"~=",

"0.7.3"

]

]

},

{

"name": "ip_adapterv",

"specs": [

[

"~=",

"0.1.0"

]

]

},

{

"name": "onnxruntime",

"specs": [

[

"~=",

"1.22.1"

]

]

}

],

"lcname": "jllama-py"

}