# Market Making RL Agent

Docs: https://Aviral1303.github.io/Market-Making-RL-Agent/

## Why this is useful

- End-to-end, deployable research stack: config-driven envs, MLflow tracking, CLI, REST API with async jobs, and DuckDB persistence

- Microstructure features that matter: OU price dynamics with regime switching, probabilistic fills, fees/slippage, multi-asset correlation, depth-aware quoting, size decisions

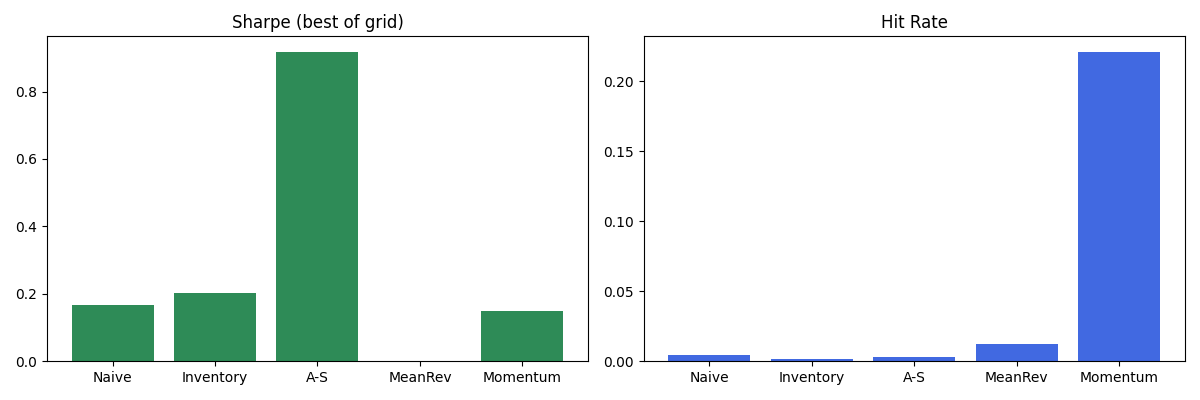

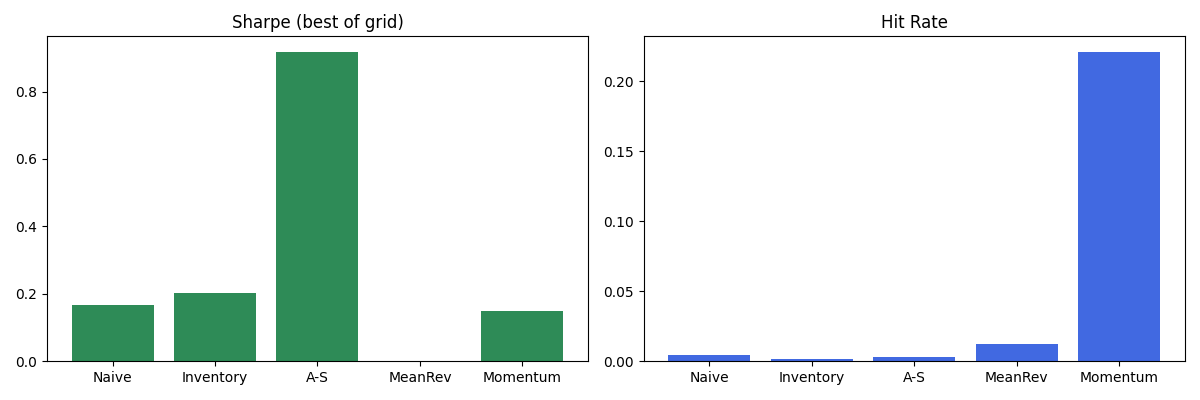

- Baselines and RL: Naive, Inventory-aware, Avellaneda–Stoikov, PPO; hyperparameter search with Optuna

## At a glance

### Benchmarks (best-of grid)

## 60s Quickstart

```

pip install mmrl

mmrl backtest

mmrl evaluate # Naive vs Rule-Based vs A–S vs PPO

mmrl analyze strategy_comparison.csv --plot # analyze your returns file

```

## Examples

- Backtest + HTML report in one go

```

mmrl backtest

mmrl report results/$(ls -t results | head -n 1) --out report.html

```

- Run grid search and visualize

```

mmrl grid

python3 analysis/plot_grid_heatmaps.py

```

- Compare agents (rule-based and PPO if rl extra installed)

```

mmrl evaluate

```

- Programmatic backtest

```python

from mmrl import run_backtest

cfg = {"run_tag": "script", "seed": 7, "steps": 1000, "output_dir": "results",

"agent": {"spread": 0.1, "inventory_sensitivity": 0.05},

"market": {"ou_enabled": True, "ou": {"mu": 100, "kappa": 0.05, "sigma": 0.5, "dt": 1.0},

"vol_regime": {"enabled": True, "high_sigma_scale": 3.0, "switch_prob": 0.02}},

"execution": {"base_arrival_rate": 1.0, "alpha": 1.5},

"fees": {"fee_bps": 1.0, "slippage_bps": 2.0, "maker_bps": -0.5, "taker_bps": 1.0}}

run_dir, metrics = run_backtest(cfg)

print(run_dir, metrics)

```

- Plug in your own data (CSV example)

```python

from env.simple_lob_env import SimpleLOBEnv

from mmrl.data import load_adapter

env = SimpleLOBEnv()

adapter = load_adapter('mmrl.data.csv_adapter:CSVAdapter', path='data/example.csv', mapping={'mid_price': 'mid', 'best_bid': 'bid', 'best_ask': 'ask'})

for tick in adapter.iter_ticks():

state = env.step_from_tick(tick)

```

## Programmatic API (Python)

```python

from mmrl import run_backtest

cfg = {

"run_tag": "demo", "seed": 42, "steps": 500, "output_dir": "results",

"agent": {"spread": 0.1, "inventory_sensitivity": 0.05},

"market": {"ou_enabled": True, "ou": {"mu": 100, "kappa": 0.05, "sigma": 0.5, "dt": 1.0},

"vol_regime": {"enabled": True, "high_sigma_scale": 3.0, "switch_prob": 0.02}},

"execution": {"base_arrival_rate": 1.0, "alpha": 1.5},

"fees": {"fee_bps": 1.0, "slippage_bps": 2.0, "maker_bps": -0.5, "taker_bps": 1.0}

}

run_dir, metrics = run_backtest(cfg)

print(run_dir, metrics)

```

From source:

```

pip install -r requirements.txt

```

## Colab Notebooks

- Quickstart

[](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/Quickstart.ipynb)

- Grid Search + Heatmaps

[](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/Grid_Heatmaps.ipynb)

- RL vs Rule-Based

[](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/RL_vs_RuleBased.ipynb)

- Multi-asset & Replay

[](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/MultiAsset_Replay.ipynb)

## Multi-asset

- Configure under `multi_asset` in `configs/inventory.yaml`

- Run:

```

python3 experiments/evaluate_multi_asset.py

python3 analysis/plot_multi_asset.py results/.../multi_asset_history.csv

```

## API

- Start stack:

```

docker compose up -d redis worker api mlflow

curl http://localhost:8000/health

curl http://localhost:8000/config/schema # config JSON schema

```

- Submit jobs:

```

curl -X POST http://localhost:8000/backtest -H 'Content-Type: application/json' -d '{"steps": 200}'

curl -X POST http://localhost:8000/grid -H 'Content-Type: application/json' -d '{"execution": {"alpha_grid": [1.0, 1.5]}}'

curl http://localhost:8000/trades/<run_id>?limit=100

curl http://localhost:8000/runs/<run_dir_name>/artifacts

curl -L -o run.zip http://localhost:8000/runs/<run_dir_name>/download

```

## Hyperparameter Optimization

```

python3 experiments/hyperopt.py

```

## Notable features

- Multi-asset Gym wrapper with per-asset, per-level actions (offsets + sizes)

- Depth-aware agent placing quotes at multiple levels with regime-conditioned parameters

- DuckDB persistence of runs/metrics/trades and a `/trades/{run_id}` endpoint

- MLflow logging of params, metrics, artifacts with run IDs written per run

- CLI extras: `mmrl fetch-data` (CCXT sample), `mmrl config-validate`, `mmrl config-schema`

## Roadmap

- Postgres-backed storage and public demo deployment

- Size-aware RL policy across multi-asset Gym env

- Notebooks + Colab badges for quick experimentation

## Packaging

- Install from source or build wheel with `python -m build` (requires `build`).

- Optional extras:

- `mmrl[api]` for FastAPI stack

- `mmrl[rl]` for Gymnasium/SB3

## Data utilities

- Fetch sample trades to Parquet:

```

python3 -m mmrl.cli fetch-data --exchange binance --symbol BTC/USDT --limit 1000 --out data/btcusdt.parquet

```

Raw data

{

"_id": null,

"home_page": null,

"name": "mmrl",

"maintainer": null,

"docs_url": null,

"requires_python": ">=3.10",

"maintainer_email": null,

"keywords": "market-making, quant, reinforcement-learning, finance, mlflow, fastapi",

"author": "Aviral Poddar",

"author_email": null,

"download_url": "https://files.pythonhosted.org/packages/96/55/51010a93d348f6c40b489d380e015a7664859cb68fbe5e02d3db07d0b6b2/mmrl-0.1.7.tar.gz",

"platform": null,

"description": "# Market Making RL Agent\n\n\n\n\n\n\nDocs: https://Aviral1303.github.io/Market-Making-RL-Agent/\n\n## Why this is useful\n- End-to-end, deployable research stack: config-driven envs, MLflow tracking, CLI, REST API with async jobs, and DuckDB persistence\n- Microstructure features that matter: OU price dynamics with regime switching, probabilistic fills, fees/slippage, multi-asset correlation, depth-aware quoting, size decisions\n- Baselines and RL: Naive, Inventory-aware, Avellaneda\u2013Stoikov, PPO; hyperparameter search with Optuna\n\n## At a glance\n\n\n### Benchmarks (best-of grid)\n\n\n## 60s Quickstart\n```\npip install mmrl\nmmrl backtest\nmmrl evaluate # Naive vs Rule-Based vs A\u2013S vs PPO\nmmrl analyze strategy_comparison.csv --plot # analyze your returns file\n```\n## Examples\n- Backtest + HTML report in one go\n```\nmmrl backtest\nmmrl report results/$(ls -t results | head -n 1) --out report.html\n```\n\n- Run grid search and visualize\n```\nmmrl grid\npython3 analysis/plot_grid_heatmaps.py\n```\n\n- Compare agents (rule-based and PPO if rl extra installed)\n```\nmmrl evaluate\n```\n\n- Programmatic backtest\n```python\nfrom mmrl import run_backtest\ncfg = {\"run_tag\": \"script\", \"seed\": 7, \"steps\": 1000, \"output_dir\": \"results\",\n \"agent\": {\"spread\": 0.1, \"inventory_sensitivity\": 0.05},\n \"market\": {\"ou_enabled\": True, \"ou\": {\"mu\": 100, \"kappa\": 0.05, \"sigma\": 0.5, \"dt\": 1.0},\n \"vol_regime\": {\"enabled\": True, \"high_sigma_scale\": 3.0, \"switch_prob\": 0.02}},\n \"execution\": {\"base_arrival_rate\": 1.0, \"alpha\": 1.5},\n \"fees\": {\"fee_bps\": 1.0, \"slippage_bps\": 2.0, \"maker_bps\": -0.5, \"taker_bps\": 1.0}}\nrun_dir, metrics = run_backtest(cfg)\nprint(run_dir, metrics)\n```\n\n- Plug in your own data (CSV example)\n```python\nfrom env.simple_lob_env import SimpleLOBEnv\nfrom mmrl.data import load_adapter\n\nenv = SimpleLOBEnv()\nadapter = load_adapter('mmrl.data.csv_adapter:CSVAdapter', path='data/example.csv', mapping={'mid_price': 'mid', 'best_bid': 'bid', 'best_ask': 'ask'})\nfor tick in adapter.iter_ticks():\n state = env.step_from_tick(tick)\n```\n## Programmatic API (Python)\n```python\nfrom mmrl import run_backtest\n\ncfg = {\n \"run_tag\": \"demo\", \"seed\": 42, \"steps\": 500, \"output_dir\": \"results\",\n \"agent\": {\"spread\": 0.1, \"inventory_sensitivity\": 0.05},\n \"market\": {\"ou_enabled\": True, \"ou\": {\"mu\": 100, \"kappa\": 0.05, \"sigma\": 0.5, \"dt\": 1.0},\n \"vol_regime\": {\"enabled\": True, \"high_sigma_scale\": 3.0, \"switch_prob\": 0.02}},\n \"execution\": {\"base_arrival_rate\": 1.0, \"alpha\": 1.5},\n \"fees\": {\"fee_bps\": 1.0, \"slippage_bps\": 2.0, \"maker_bps\": -0.5, \"taker_bps\": 1.0}\n}\nrun_dir, metrics = run_backtest(cfg)\nprint(run_dir, metrics)\n```\n\nFrom source:\n```\npip install -r requirements.txt\n```\n\n## Colab Notebooks\n- Quickstart\n \n [](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/Quickstart.ipynb)\n\n- Grid Search + Heatmaps\n \n [](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/Grid_Heatmaps.ipynb)\n\n- RL vs Rule-Based\n \n [](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/RL_vs_RuleBased.ipynb)\n\n- Multi-asset & Replay\n \n [](https://colab.research.google.com/github/Aviral1303/Market-Making-RL-Agent/blob/main/notebooks/MultiAsset_Replay.ipynb)\n\n## Multi-asset\n- Configure under `multi_asset` in `configs/inventory.yaml`\n- Run:\n```\npython3 experiments/evaluate_multi_asset.py\npython3 analysis/plot_multi_asset.py results/.../multi_asset_history.csv\n```\n\n## API\n- Start stack:\n```\ndocker compose up -d redis worker api mlflow\ncurl http://localhost:8000/health\ncurl http://localhost:8000/config/schema # config JSON schema\n```\n- Submit jobs:\n```\ncurl -X POST http://localhost:8000/backtest -H 'Content-Type: application/json' -d '{\"steps\": 200}'\ncurl -X POST http://localhost:8000/grid -H 'Content-Type: application/json' -d '{\"execution\": {\"alpha_grid\": [1.0, 1.5]}}'\ncurl http://localhost:8000/trades/<run_id>?limit=100\ncurl http://localhost:8000/runs/<run_dir_name>/artifacts\ncurl -L -o run.zip http://localhost:8000/runs/<run_dir_name>/download\n```\n\n## Hyperparameter Optimization\n```\npython3 experiments/hyperopt.py\n```\n\n## Notable features\n- Multi-asset Gym wrapper with per-asset, per-level actions (offsets + sizes)\n- Depth-aware agent placing quotes at multiple levels with regime-conditioned parameters\n- DuckDB persistence of runs/metrics/trades and a `/trades/{run_id}` endpoint\n- MLflow logging of params, metrics, artifacts with run IDs written per run\n- CLI extras: `mmrl fetch-data` (CCXT sample), `mmrl config-validate`, `mmrl config-schema`\n\n## Roadmap\n- Postgres-backed storage and public demo deployment\n- Size-aware RL policy across multi-asset Gym env\n- Notebooks + Colab badges for quick experimentation\n\n## Packaging\n- Install from source or build wheel with `python -m build` (requires `build`).\n- Optional extras:\n - `mmrl[api]` for FastAPI stack\n - `mmrl[rl]` for Gymnasium/SB3\n\n## Data utilities\n- Fetch sample trades to Parquet:\n```\npython3 -m mmrl.cli fetch-data --exchange binance --symbol BTC/USDT --limit 1000 --out data/btcusdt.parquet\n```\n",

"bugtrack_url": null,

"license": null,

"summary": "Market Making RL - simulation, experiments, and CLI",

"version": "0.1.7",

"project_urls": {

"Homepage": "https://github.com/Aviral1303/Market-Making-RL-Agent",

"Issues": "https://github.com/Aviral1303/Market-Making-RL-Agent/issues",

"Repository": "https://github.com/Aviral1303/Market-Making-RL-Agent"

},

"split_keywords": [

"market-making",

" quant",

" reinforcement-learning",

" finance",

" mlflow",

" fastapi"

],

"urls": [

{

"comment_text": null,

"digests": {

"blake2b_256": "5ed3a5f1675d97a8e3df17a249385351e98f44ff4acff3cb8d3fc4b0def087d0",

"md5": "f81a761b65243d4cdd63ed2aa5a69e5d",

"sha256": "6cc4b846f2b8c0293db0814194ad59beda9bcaff4ea7b0a01e6358ee9adc1d9a"

},

"downloads": -1,

"filename": "mmrl-0.1.7-py3-none-any.whl",

"has_sig": false,

"md5_digest": "f81a761b65243d4cdd63ed2aa5a69e5d",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": ">=3.10",

"size": 49711,

"upload_time": "2025-08-31T19:44:18",

"upload_time_iso_8601": "2025-08-31T19:44:18.276373Z",

"url": "https://files.pythonhosted.org/packages/5e/d3/a5f1675d97a8e3df17a249385351e98f44ff4acff3cb8d3fc4b0def087d0/mmrl-0.1.7-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": null,

"digests": {

"blake2b_256": "965551010a93d348f6c40b489d380e015a7664859cb68fbe5e02d3db07d0b6b2",

"md5": "a9f3269b6726fc2ea7a4d8892fed1fa7",

"sha256": "a1dde0a6f6b9d9512a6113e099847c7fbf9ff4373b19b5a163af1891bbbdf01b"

},

"downloads": -1,

"filename": "mmrl-0.1.7.tar.gz",

"has_sig": false,

"md5_digest": "a9f3269b6726fc2ea7a4d8892fed1fa7",

"packagetype": "sdist",

"python_version": "source",

"requires_python": ">=3.10",

"size": 41792,

"upload_time": "2025-08-31T19:44:19",

"upload_time_iso_8601": "2025-08-31T19:44:19.330667Z",

"url": "https://files.pythonhosted.org/packages/96/55/51010a93d348f6c40b489d380e015a7664859cb68fbe5e02d3db07d0b6b2/mmrl-0.1.7.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2025-08-31 19:44:19",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "Aviral1303",

"github_project": "Market-Making-RL-Agent",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "numpy",

"specs": [

[

"==",

"1.23.5"

]

]

},

{

"name": "pandas",

"specs": [

[

"==",

"2.3.1"

]

]

},

{

"name": "matplotlib",

"specs": [

[

"==",

"3.10.5"

]

]

},

{

"name": "seaborn",

"specs": [

[

"==",

"0.13.2"

]

]

},

{

"name": "tqdm",

"specs": [

[

"==",

"4.67.1"

]

]

},

{

"name": "PyYAML",

"specs": [

[

"==",

"6.0.2"

]

]

},

{

"name": "mlflow",

"specs": [

[

"==",

"2.14.2"

]

]

},

{

"name": "typer",

"specs": [

[

"==",

"0.12.3"

]

]

},

{

"name": "fastapi",

"specs": [

[

"==",

"0.115.6"

]

]

},

{

"name": "uvicorn",

"specs": [

[

"==",

"0.30.6"

]

]

},

{

"name": "pytest",

"specs": [

[

"==",

"8.3.3"

]

]

},

{

"name": "httpx",

"specs": [

[

"==",

"0.27.2"

]

]

},

{

"name": "prometheus-client",

"specs": [

[

"==",

"0.20.0"

]

]

},

{

"name": "gymnasium",

"specs": [

[

"==",

"0.28.1"

]

]

},

{

"name": "stable-baselines3",

"specs": [

[

"==",

"2.3.2"

]

]

},

{

"name": "rq",

"specs": [

[

"==",

"1.16.2"

]

]

},

{

"name": "redis",

"specs": [

[

"==",

"5.0.8"

]

]

},

{

"name": "duckdb",

"specs": [

[

"==",

"1.3.2"

]

]

},

{

"name": "optuna",

"specs": [

[

"==",

"4.0.0"

]

]

},

{

"name": "ccxt",

"specs": [

[

"==",

"4.4.59"

]

]

}

],

"lcname": "mmrl"

}