# Podcast-LLM: AI-Powered Podcast Generation

[](https://codecov.io/gh/evandempsey/podcast-llm)

[](https://github.com/evandempsey/podcast-llm)

[](https://github.com/evandempsey/podcast-llm/releases)

[](https://github.com/evandempsey/podcast-llm/issues)

[](https://creativecommons.org/licenses/by-nc/4.0/)

An intelligent system that automatically generates engaging podcast conversations using LLMs and text-to-speech technology.

[View Documentation](https://evandempsey.github.io/podcast-llm/)

## Features

- Two modes of operation:

- Research mode: Automated research and content gathering using Tavily search

- Context mode: Generate podcasts from provided source materials (URLs and files)

- Dynamic podcast outline generation

- Natural conversational script writing with multiple Q&A rounds

- High-quality text-to-speech synthesis using Google Cloud or ElevenLabs

- Checkpoint system to save progress and resume generation

- Configurable voices and audio settings

- Gradio UI

## Examples

Listen to sample podcasts generated using Podcast-LLM:

### Structured JSON Output from LLMs (Google multispeaker voices)

[](https://soundcloud.com/evan-dempsey-153309617/llm-structured-output)

### UFO Crash Retrieval (Elevenlabs voices)

[](https://soundcloud.com/evan-dempsey-153309617/ufo-crash-retrieval-elevenlabs-voices)

### The Behenian Fixed Stars (Google multispeaker voices)

[](https://soundcloud.com/evan-dempsey-153309617/behenian-fixed-stars)

### Podcast-LLM Overview (Google multispeaker voices)

[](https://soundcloud.com/evan-dempsey-153309617/podcast-llm-with-anthropic-and-google-multispeaker)

### Robotic Process Automation (Google voices)

[](https://soundcloud.com/evan-dempsey-153309617/robotic-process-automation-google-voices)

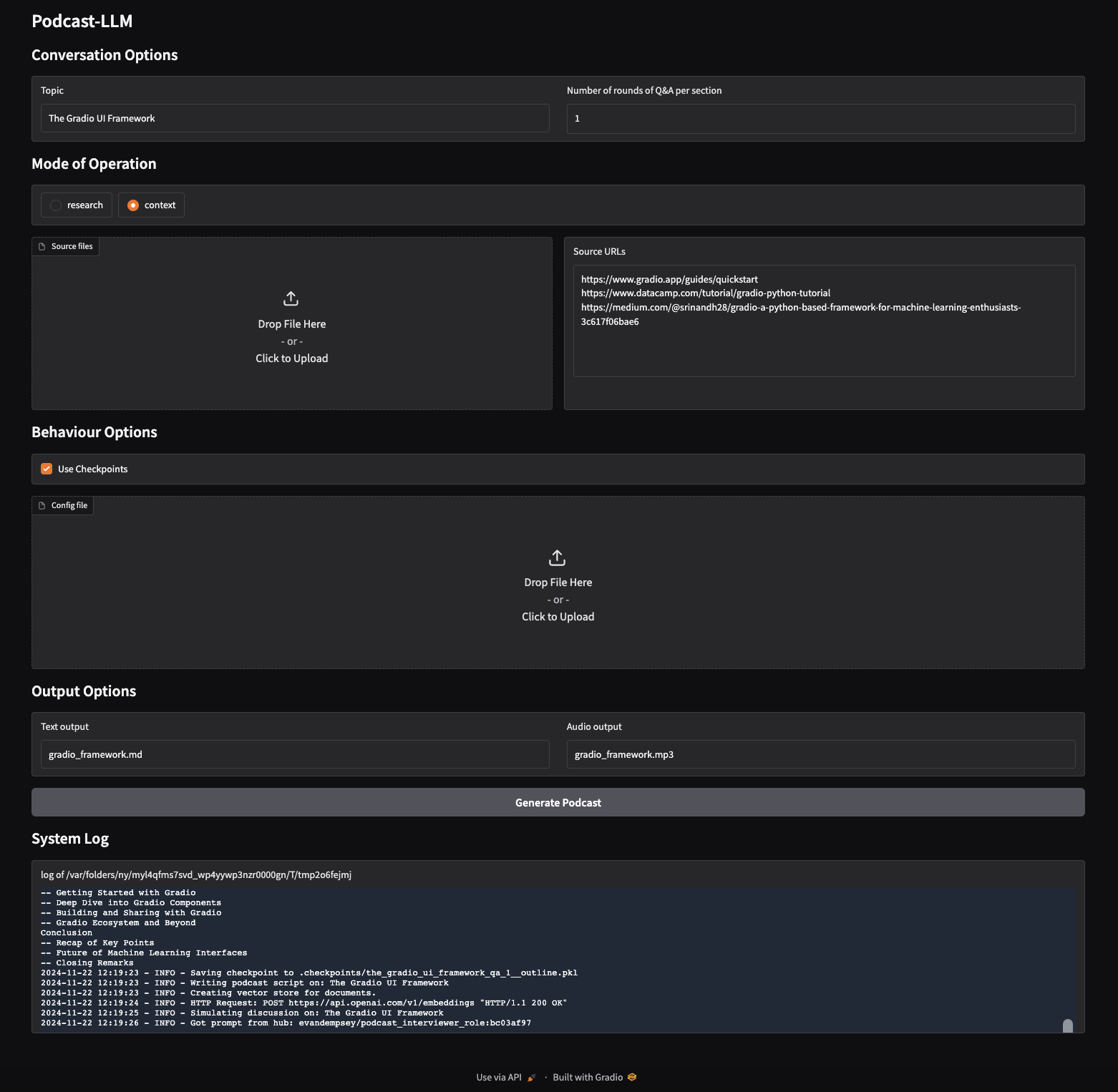

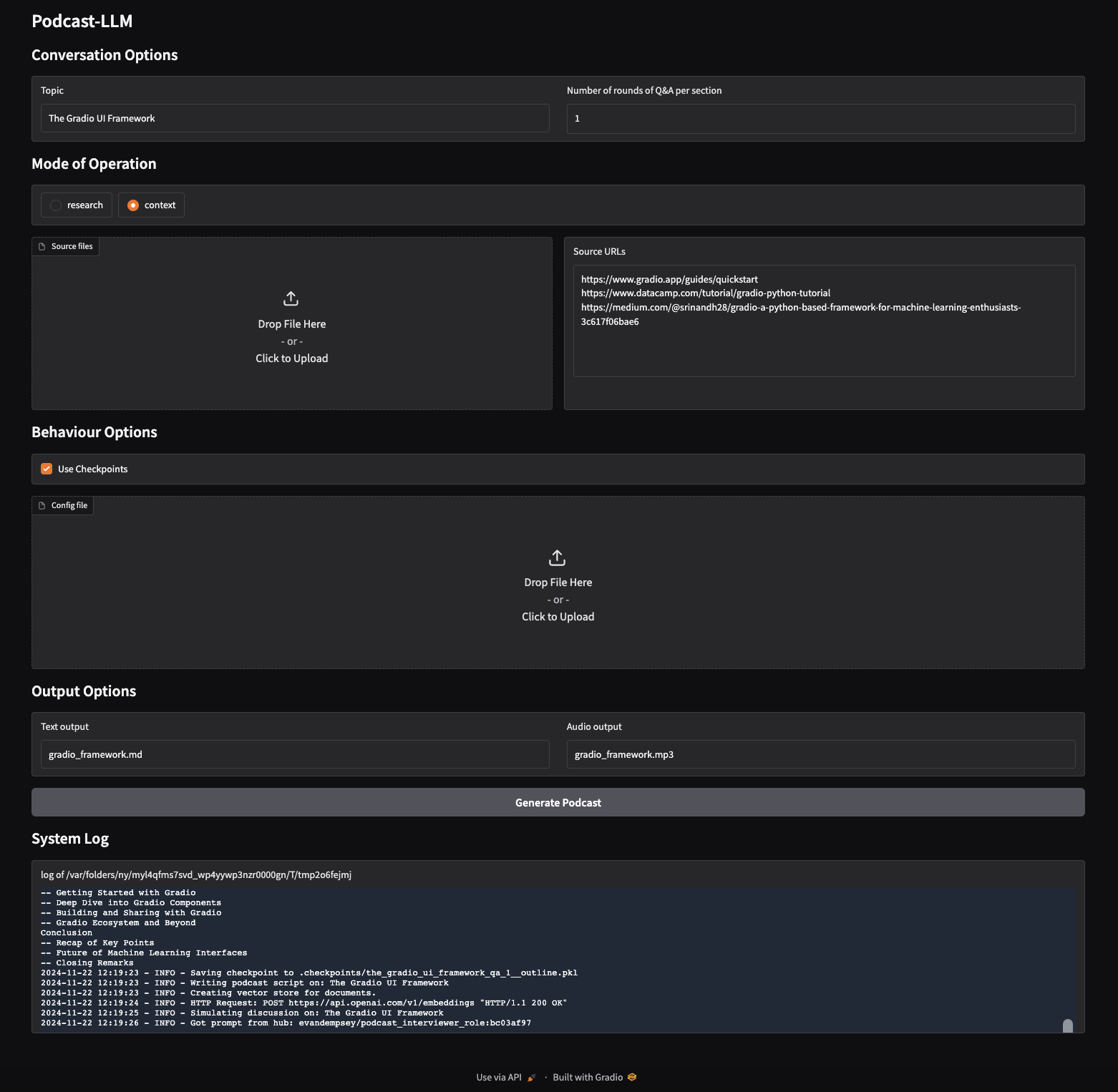

## Web Interface

## Installation

1. Install using pip:

```bash

pip install podcast-llm

```

2. Set up environment variables in `.env`:

```

OPENAI_API_KEY=your_openai_key

GOOGLE_API_KEY=your_google_key

ELEVENLABS_API_KEY=your_elevenlabs_key

TAVILY_API_KEY=your_tavily_key

ANTHROPIC_API_KEY=your_anthropic_api_key

```

## Usage

1. Generate a podcast about a topic:

```bash

# Research mode (default) - automatically researches the topic

podcast-llm "Artificial Intelligence"

# Context mode - uses provided sources

podcast-llm "Machine Learning" --mode context --sources paper.pdf https://example.com/article

```

2. Options:

```bash

# Customize number of Q&A rounds per section

podcast-llm "Linux" --qa-rounds 3

# Disable checkpointing

podcast-llm "Space Exploration" --checkpoint false

# Generate audio output

podcast-llm "Quantum Computing" --audio-output podcast.mp3

# Generate Markdown output

podcast-llm "Machine Learning" --text-output podcast.md

```

3. Customize voices and other settings in `config/config.yaml`

4. Launch the Gradio web interface:

```bash

# Start the web UI

podcast-llm-gui

```

This launches a user-friendly web interface where you can:

- Enter a podcast topic

- Choose between research and context modes

- Upload source files and URLs for context mode

- Configure Q&A rounds and checkpointing

- Specify output paths for text and audio

- Monitor generation progress in real-time

## License

This project is licensed under Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0)

This means you are free to:

- Share: Copy and redistribute the material in any medium or format

- Adapt: Remix, transform, and build upon the material

Under the following terms:

- Attribution: You must give appropriate credit, provide a link to the license, and indicate if changes were made

- NonCommercial: You may not use the material for commercial purposes

- No additional restrictions: You may not apply legal terms or technological measures that legally restrict others from doing anything the license permits

For commercial use, please contact evandempsey@gmail.com to obtain a commercial license.

The full license text can be found at: https://creativecommons.org/licenses/by-nc/4.0/legalcode

## Acknowledgements

This project was inspired by [podcastfy](https://github.com/souzatharsis/podcastfy), which provides a framework for generating podcasts using LLMs.

This implementation differs by automating the research and content gathering process, allowing for fully autonomous podcast generation about any topic without requiring manual research or content curation.

Raw data

{

"_id": null,

"home_page": "https://github.com/evandempsey/podcast-llm",

"name": "podcast-llm",

"maintainer": null,

"docs_url": null,

"requires_python": "<4.0,>=3.12",

"maintainer_email": null,

"keywords": "podcast, llm, ai",

"author": "Evan Dempsey",

"author_email": "evandempsey@gmail.com",

"download_url": "https://files.pythonhosted.org/packages/31/c8/ae769941f83f94a8d2efa155b599b870592ca9274258c720eedb8a829a08/podcast_llm-0.2.2.tar.gz",

"platform": null,

"description": "# Podcast-LLM: AI-Powered Podcast Generation\n\n\n[](https://codecov.io/gh/evandempsey/podcast-llm)\n[](https://github.com/evandempsey/podcast-llm)\n[](https://github.com/evandempsey/podcast-llm/releases)\n[](https://github.com/evandempsey/podcast-llm/issues)\n[](https://creativecommons.org/licenses/by-nc/4.0/)\n\n\nAn intelligent system that automatically generates engaging podcast conversations using LLMs and text-to-speech technology.\n\n[View Documentation](https://evandempsey.github.io/podcast-llm/)\n\n## Features\n\n- Two modes of operation:\n - Research mode: Automated research and content gathering using Tavily search\n - Context mode: Generate podcasts from provided source materials (URLs and files)\n- Dynamic podcast outline generation\n- Natural conversational script writing with multiple Q&A rounds\n- High-quality text-to-speech synthesis using Google Cloud or ElevenLabs\n- Checkpoint system to save progress and resume generation\n- Configurable voices and audio settings\n- Gradio UI\n\n## Examples\n\nListen to sample podcasts generated using Podcast-LLM:\n\n### Structured JSON Output from LLMs (Google multispeaker voices)\n\n[](https://soundcloud.com/evan-dempsey-153309617/llm-structured-output)\n\n### UFO Crash Retrieval (Elevenlabs voices)\n\n[](https://soundcloud.com/evan-dempsey-153309617/ufo-crash-retrieval-elevenlabs-voices)\n\n### The Behenian Fixed Stars (Google multispeaker voices)\n\n[](https://soundcloud.com/evan-dempsey-153309617/behenian-fixed-stars)\n\n### Podcast-LLM Overview (Google multispeaker voices)\n\n[](https://soundcloud.com/evan-dempsey-153309617/podcast-llm-with-anthropic-and-google-multispeaker)\n\n### Robotic Process Automation (Google voices)\n\n[](https://soundcloud.com/evan-dempsey-153309617/robotic-process-automation-google-voices)\n\n\n## Web Interface\n\n\n\n## Installation\n\n1. Install using pip:\n ```bash\n pip install podcast-llm\n ```\n\n2. Set up environment variables in `.env`:\n ```\n OPENAI_API_KEY=your_openai_key\n GOOGLE_API_KEY=your_google_key \n ELEVENLABS_API_KEY=your_elevenlabs_key\n TAVILY_API_KEY=your_tavily_key\n ANTHROPIC_API_KEY=your_anthropic_api_key\n ```\n\n## Usage\n\n1. Generate a podcast about a topic:\n ```bash\n # Research mode (default) - automatically researches the topic\n podcast-llm \"Artificial Intelligence\"\n\n # Context mode - uses provided sources\n podcast-llm \"Machine Learning\" --mode context --sources paper.pdf https://example.com/article\n ```\n\n2. Options:\n ```bash\n # Customize number of Q&A rounds per section\n podcast-llm \"Linux\" --qa-rounds 3\n\n # Disable checkpointing\n podcast-llm \"Space Exploration\" --checkpoint false\n\n # Generate audio output\n podcast-llm \"Quantum Computing\" --audio-output podcast.mp3\n\n # Generate Markdown output\n podcast-llm \"Machine Learning\" --text-output podcast.md\n ```\n\n3. Customize voices and other settings in `config/config.yaml`\n\n4. Launch the Gradio web interface:\n ```bash\n # Start the web UI\n podcast-llm-gui\n ```\n\n This launches a user-friendly web interface where you can:\n - Enter a podcast topic\n - Choose between research and context modes\n - Upload source files and URLs for context mode\n - Configure Q&A rounds and checkpointing\n - Specify output paths for text and audio\n - Monitor generation progress in real-time\n\n\n## License\n\nThis project is licensed under Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0)\n\nThis means you are free to:\n- Share: Copy and redistribute the material in any medium or format\n- Adapt: Remix, transform, and build upon the material\n\nUnder the following terms:\n- Attribution: You must give appropriate credit, provide a link to the license, and indicate if changes were made\n- NonCommercial: You may not use the material for commercial purposes\n- No additional restrictions: You may not apply legal terms or technological measures that legally restrict others from doing anything the license permits\n\nFor commercial use, please contact evandempsey@gmail.com to obtain a commercial license.\n\nThe full license text can be found at: https://creativecommons.org/licenses/by-nc/4.0/legalcode\n\n## Acknowledgements\n\nThis project was inspired by [podcastfy](https://github.com/souzatharsis/podcastfy), which provides a framework for generating podcasts using LLMs. \n\nThis implementation differs by automating the research and content gathering process, allowing for fully autonomous podcast generation about any topic without requiring manual research or content curation.\n",

"bugtrack_url": null,

"license": "Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0)",

"summary": "An intelligent system that automatically generates engaging podcast conversations using LLMs and text-to-speech technology.",

"version": "0.2.2",

"project_urls": {

"Documentation": "https://evandempsey.github.io/podcast-llm/",

"Homepage": "https://github.com/evandempsey/podcast-llm",

"Repository": "https://github.com/evandempsey/podcast-llm"

},

"split_keywords": [

"podcast",

" llm",

" ai"

],

"urls": [

{

"comment_text": "",

"digests": {

"blake2b_256": "399fb5e6f414f1804cb8b64c2f172142cce475fe65010331653a1889bbf5a3e7",

"md5": "be1ffbaea17f2bc44d6cd136d0c49c72",

"sha256": "dacff98c3cd12e5857bced192a9954fdafe5cea06a8a4be273b607862b85160a"

},

"downloads": -1,

"filename": "podcast_llm-0.2.2-py3-none-any.whl",

"has_sig": false,

"md5_digest": "be1ffbaea17f2bc44d6cd136d0c49c72",

"packagetype": "bdist_wheel",

"python_version": "py3",

"requires_python": "<4.0,>=3.12",

"size": 46924,

"upload_time": "2024-12-08T14:23:41",

"upload_time_iso_8601": "2024-12-08T14:23:41.148223Z",

"url": "https://files.pythonhosted.org/packages/39/9f/b5e6f414f1804cb8b64c2f172142cce475fe65010331653a1889bbf5a3e7/podcast_llm-0.2.2-py3-none-any.whl",

"yanked": false,

"yanked_reason": null

},

{

"comment_text": "",

"digests": {

"blake2b_256": "31c8ae769941f83f94a8d2efa155b599b870592ca9274258c720eedb8a829a08",

"md5": "c549b53c8c9987a537f8b04e169b98e7",

"sha256": "492e79de5edfcbeb0d2d6d6e7edd5ce9ff145e2f0c9f6074070a9ca10f5eba14"

},

"downloads": -1,

"filename": "podcast_llm-0.2.2.tar.gz",

"has_sig": false,

"md5_digest": "c549b53c8c9987a537f8b04e169b98e7",

"packagetype": "sdist",

"python_version": "source",

"requires_python": "<4.0,>=3.12",

"size": 36622,

"upload_time": "2024-12-08T14:23:42",

"upload_time_iso_8601": "2024-12-08T14:23:42.828240Z",

"url": "https://files.pythonhosted.org/packages/31/c8/ae769941f83f94a8d2efa155b599b870592ca9274258c720eedb8a829a08/podcast_llm-0.2.2.tar.gz",

"yanked": false,

"yanked_reason": null

}

],

"upload_time": "2024-12-08 14:23:42",

"github": true,

"gitlab": false,

"bitbucket": false,

"codeberg": false,

"github_user": "evandempsey",

"github_project": "podcast-llm",

"travis_ci": false,

"coveralls": false,

"github_actions": true,

"requirements": [

{

"name": "aiohappyeyeballs",

"specs": [

[

"==",

"2.4.3"

]

]

},

{

"name": "aiohttp",

"specs": [

[

"==",

"3.10.10"

]

]

},

{

"name": "aiosignal",

"specs": [

[

"==",

"1.3.1"

]

]

},

{

"name": "alabaster",

"specs": [

[

"==",

"0.7.16"

]

]

},

{

"name": "annotated-types",

"specs": [

[

"==",

"0.7.0"

]

]

},

{

"name": "anthropic",

"specs": [

[

"==",

"0.38.0"

]

]

},

{

"name": "anyio",

"specs": [

[

"==",

"4.6.2.post1"

]

]

},

{

"name": "attrs",

"specs": [

[

"==",

"24.2.0"

]

]

},

{

"name": "babel",

"specs": [

[

"==",

"2.16.0"

]

]

},

{

"name": "beautifulsoup4",

"specs": [

[

"==",

"4.12.3"

]

]

},

{

"name": "cachetools",

"specs": [

[

"==",

"5.5.0"

]

]

},

{

"name": "certifi",

"specs": [

[

"==",

"2024.8.30"

]

]

},

{

"name": "charset-normalizer",

"specs": [

[

"==",

"3.4.0"

]

]

},

{

"name": "click",

"specs": [

[

"==",

"8.1.7"

]

]

},

{

"name": "coverage",

"specs": [

[

"==",

"7.6.4"

]

]

},

{

"name": "cssselect",

"specs": [

[

"==",

"1.2.0"

]

]

},

{

"name": "dataclasses-json",

"specs": [

[

"==",

"0.6.7"

]

]

},

{

"name": "defusedxml",

"specs": [

[

"==",

"0.7.1"

]

]

},

{

"name": "distro",

"specs": [

[

"==",

"1.9.0"

]

]

},

{

"name": "docstring_parser",

"specs": [

[

"==",

"0.16"

]

]

},

{

"name": "docutils",

"specs": [

[

"==",

"0.20.1"

]

]

},

{

"name": "elevenlabs",

"specs": [

[

"==",

"1.12.1"

]

]

},

{

"name": "feedfinder2",

"specs": [

[

"==",

"0.0.4"

]

]

},

{

"name": "feedparser",

"specs": [

[

"==",

"6.0.11"

]

]

},

{

"name": "filelock",

"specs": [

[

"==",

"3.16.1"

]

]

},

{

"name": "frozenlist",

"specs": [

[

"==",

"1.5.0"

]

]

},

{

"name": "fsspec",

"specs": [

[

"==",

"2024.10.0"

]

]

},

{

"name": "google-ai-generativelanguage",

"specs": [

[

"==",

"0.6.10"

]

]

},

{

"name": "google-api-core",

"specs": [

[

"==",

"2.22.0"

]

]

},

{

"name": "google-api-python-client",

"specs": [

[

"==",

"2.151.0"

]

]

},

{

"name": "google-auth",

"specs": [

[

"==",

"2.35.0"

]

]

},

{

"name": "google-auth-httplib2",

"specs": [

[

"==",

"0.2.0"

]

]

},

{

"name": "google-cloud-aiplatform",

"specs": [

[

"==",

"1.71.1"

]

]

},

{

"name": "google-cloud-bigquery",

"specs": [

[

"==",

"3.26.0"

]

]

},

{

"name": "google-cloud-core",

"specs": [

[

"==",

"2.4.1"

]

]

},

{

"name": "google-cloud-resource-manager",

"specs": [

[

"==",

"1.13.0"

]

]

},

{

"name": "google-cloud-storage",

"specs": [

[

"==",

"2.18.2"

]

]

},

{

"name": "google-cloud-texttospeech",

"specs": [

[

"==",

"2.20.0"

]

]

},

{

"name": "google-crc32c",

"specs": [

[

"==",

"1.6.0"

]

]

},

{

"name": "google-generativeai",

"specs": [

[

"==",

"0.8.3"

]

]

},

{

"name": "google-resumable-media",

"specs": [

[

"==",

"2.7.2"

]

]

},

{

"name": "googleapis-common-protos",

"specs": [

[

"==",

"1.65.0"

]

]

},

{

"name": "grpc-google-iam-v1",

"specs": [

[

"==",

"0.13.1"

]

]

},

{

"name": "grpcio",

"specs": [

[

"==",

"1.67.1"

]

]

},

{

"name": "grpcio-status",

"specs": [

[

"==",

"1.67.1"

]

]

},

{

"name": "h11",

"specs": [

[

"==",

"0.14.0"

]

]

},

{

"name": "httpcore",

"specs": [

[

"==",

"1.0.6"

]

]

},

{

"name": "httplib2",

"specs": [

[

"==",

"0.22.0"

]

]

},

{

"name": "httpx",

"specs": [

[

"==",

"0.27.2"

]

]

},

{

"name": "httpx-sse",

"specs": [

[

"==",

"0.4.0"

]

]

},

{

"name": "huggingface-hub",

"specs": [

[

"==",

"0.26.2"

]

]

},

{

"name": "idna",

"specs": [

[

"==",

"3.10"

]

]

},

{

"name": "imagesize",

"specs": [

[

"==",

"1.4.1"

]

]

},

{

"name": "iniconfig",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "jieba3k",

"specs": [

[

"==",

"0.35.1"

]

]

},

{

"name": "Jinja2",

"specs": [

[

"==",

"3.1.4"

]

]

},

{

"name": "jiter",

"specs": [

[

"==",

"0.6.1"

]

]

},

{

"name": "joblib",

"specs": [

[

"==",

"1.4.2"

]

]

},

{

"name": "jsonpatch",

"specs": [

[

"==",

"1.33"

]

]

},

{

"name": "jsonpointer",

"specs": [

[

"==",

"3.0.0"

]

]

},

{

"name": "langchain",

"specs": [

[

"==",

"0.3.4"

]

]

},

{

"name": "langchain-anthropic",

"specs": [

[

"==",

"0.2.4"

]

]

},

{

"name": "langchain-community",

"specs": [

[

"==",

"0.3.3"

]

]

},

{

"name": "langchain-core",

"specs": [

[

"==",

"0.3.15"

]

]

},

{

"name": "langchain-google-genai",

"specs": [

[

"==",

"2.0.4"

]

]

},

{

"name": "langchain-google-vertexai",

"specs": [

[

"==",

"2.0.7"

]

]

},

{

"name": "langchain-openai",

"specs": [

[

"==",

"0.2.3"

]

]

},

{

"name": "langchain-text-splitters",

"specs": [

[

"==",

"0.3.0"

]

]

},

{

"name": "langsmith",

"specs": [

[

"==",

"0.1.137"

]

]

},

{

"name": "lxml",

"specs": [

[

"==",

"5.3.0"

]

]

},

{

"name": "lxml_html_clean",

"specs": [

[

"==",

"0.3.1"

]

]

},

{

"name": "MarkupSafe",

"specs": [

[

"==",

"3.0.2"

]

]

},

{

"name": "marshmallow",

"specs": [

[

"==",

"3.23.0"

]

]

},

{

"name": "multidict",

"specs": [

[

"==",

"6.1.0"

]

]

},

{

"name": "mypy-extensions",

"specs": [

[

"==",

"1.0.0"

]

]

},

{

"name": "newspaper3k",

"specs": [

[

"==",

"0.2.8"

]

]

},

{

"name": "nltk",

"specs": [

[

"==",

"3.9.1"

]

]

},

{

"name": "numpy",

"specs": [

[

"==",

"1.26.4"

]

]

},

{

"name": "openai",

"specs": [

[

"==",

"1.52.2"

]

]

},

{

"name": "orjson",

"specs": [

[

"==",

"3.10.10"

]

]

},

{

"name": "packaging",

"specs": [

[

"==",

"24.1"

]

]

},

{

"name": "pillow",

"specs": [

[

"==",

"11.0.0"

]

]

},

{

"name": "pluggy",

"specs": [

[

"==",

"1.5.0"

]

]

},

{

"name": "pockets",

"specs": [

[

"==",

"0.9.1"

]

]

},

{

"name": "propcache",

"specs": [

[

"==",

"0.2.0"

]

]

},

{

"name": "proto-plus",

"specs": [

[

"==",

"1.25.0"

]

]

},

{

"name": "protobuf",

"specs": [

[

"==",

"5.28.3"

]

]

},

{

"name": "pyasn1",

"specs": [

[

"==",

"0.6.1"

]

]

},

{

"name": "pyasn1_modules",

"specs": [

[

"==",

"0.4.1"

]

]

},

{

"name": "pydantic",

"specs": [

[

"==",

"2.9.2"

]

]

},

{

"name": "pydantic-settings",

"specs": [

[

"==",

"2.6.0"

]

]

},

{

"name": "pydantic_core",

"specs": [

[

"==",

"2.23.4"

]

]

},

{

"name": "pydub",

"specs": [

[

"==",

"0.25.1"

]

]

},

{

"name": "Pygments",

"specs": [

[

"==",

"2.18.0"

]

]

},

{

"name": "pyparsing",

"specs": [

[

"==",

"3.2.0"

]

]

},

{

"name": "pypdf",

"specs": [

[

"==",

"5.1.0"

]

]

},

{

"name": "pytest",

"specs": [

[

"==",

"8.3.3"

]

]

},

{

"name": "pytest-cov",

"specs": [

[

"==",

"6.0.0"

]

]

},

{

"name": "pytest-mock",

"specs": [

[

"==",

"3.14.0"

]

]

},

{

"name": "python-dateutil",

"specs": [

[

"==",

"2.9.0.post0"

]

]

},

{

"name": "python-dotenv",

"specs": [

[

"==",

"1.0.1"

]

]

},

{

"name": "PyYAML",

"specs": [

[

"==",

"6.0.2"

]

]

},

{

"name": "regex",

"specs": [

[

"==",

"2024.9.11"

]

]

},

{

"name": "requests",

"specs": [

[

"==",

"2.32.3"

]

]

},

{

"name": "requests-file",

"specs": [

[

"==",

"2.1.0"

]

]

},

{

"name": "requests-toolbelt",

"specs": [

[

"==",

"1.0.0"

]

]

},

{

"name": "rsa",

"specs": [

[

"==",

"4.9"

]

]

},

{

"name": "sgmllib3k",

"specs": [

[

"==",

"1.0.0"

]

]

},

{

"name": "shapely",

"specs": [

[

"==",

"2.0.6"

]

]

},

{

"name": "six",

"specs": [

[

"==",

"1.16.0"

]

]

},

{

"name": "sniffio",

"specs": [

[

"==",

"1.3.1"

]

]

},

{

"name": "snowballstemmer",

"specs": [

[

"==",

"2.2.0"

]

]

},

{

"name": "soupsieve",

"specs": [

[

"==",

"2.6"

]

]

},

{

"name": "Sphinx",

"specs": [

[

"==",

"7.2.6"

]

]

},

{

"name": "sphinx-rtd-theme",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "sphinxcontrib-applehelp",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "sphinxcontrib-devhelp",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "sphinxcontrib-htmlhelp",

"specs": [

[

"==",

"2.1.0"

]

]

},

{

"name": "sphinxcontrib-jquery",

"specs": [

[

"==",

"4.1"

]

]

},

{

"name": "sphinxcontrib-jsmath",

"specs": [

[

"==",

"1.0.1"

]

]

},

{

"name": "sphinxcontrib-napoleon",

"specs": [

[

"==",

"0.7"

]

]

},

{

"name": "sphinxcontrib-qthelp",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "sphinxcontrib-serializinghtml",

"specs": [

[

"==",

"2.0.0"

]

]

},

{

"name": "SQLAlchemy",

"specs": [

[

"==",

"2.0.36"

]

]

},

{

"name": "tavily-python",

"specs": [

[

"==",

"0.5.0"

]

]

},

{

"name": "tenacity",

"specs": [

[

"==",

"9.0.0"

]

]

},

{

"name": "tiktoken",

"specs": [

[

"==",

"0.8.0"

]

]

},

{

"name": "tinysegmenter",

"specs": [

[

"==",

"0.3"

]

]

},

{

"name": "tldextract",

"specs": [

[

"==",

"5.1.2"

]

]

},

{

"name": "tokenizers",

"specs": [

[

"==",

"0.20.1"

]

]

},

{

"name": "tqdm",

"specs": [

[

"==",

"4.66.6"

]

]

},

{

"name": "typing-inspect",

"specs": [

[

"==",

"0.9.0"

]

]

},

{

"name": "typing_extensions",

"specs": [

[

"==",

"4.12.2"

]

]

},

{

"name": "uritemplate",

"specs": [

[

"==",

"4.1.1"

]

]

},

{

"name": "urllib3",

"specs": [

[

"==",

"2.2.3"

]

]

},

{

"name": "websockets",

"specs": [

[

"==",

"13.1"

]

]

},

{

"name": "wikipedia",

"specs": [

[

"==",

"1.4.0"

]

]

},

{

"name": "yarl",

"specs": [

[

"==",

"1.16.0"

]

]

},

{

"name": "youtube-transcript-api",

"specs": [

[

"==",

"0.6.2"

]

]

}

],

"lcname": "podcast-llm"

}